先日セットアップしたミニPCにESXi8.0 Freeでは余ってたM.2 SATA 256GBにシステムを、M.2 NVMe 512GBを主データストアとして使っていた。

ふと手持ちのM.2系ストレージを見てみると、M.2 NVMeの2TB SSDが2枚余っていたので、片方をESXi用とするか、とまずはUSB NVMeケースに入れてVMFSでフォーマットし、M.2 NVMe 512GB からデータを移動させた。

ちなみに、USB NVMeケースを認識させるには、ESXi8.0で標準動作しているUSB パススルー用の USB Arbitrator service を停止させる必要があった。

出典:Configuring a vSphere ESXi host to use a local USB device for VMkernel coredumps

# /etc/init.d/usbarbitrator stop

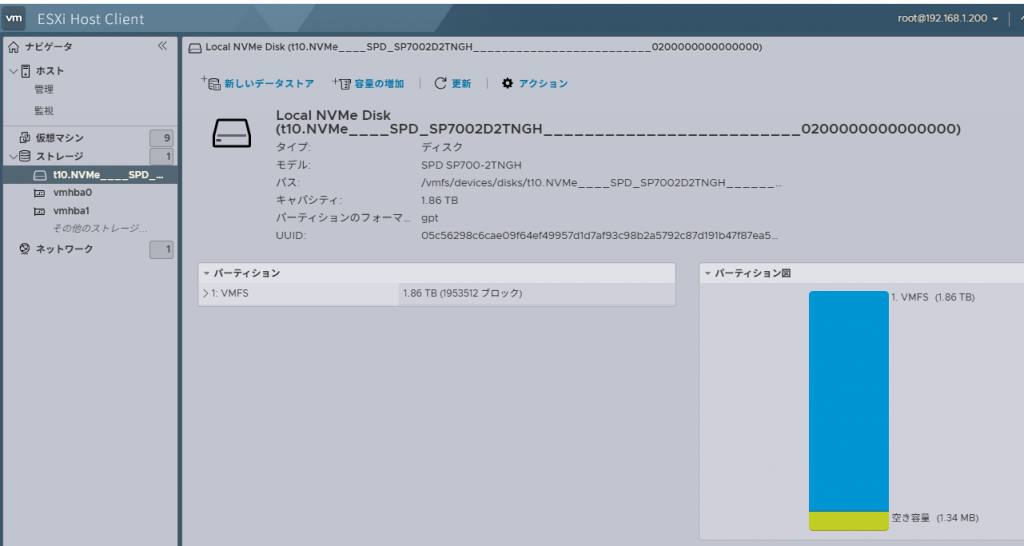

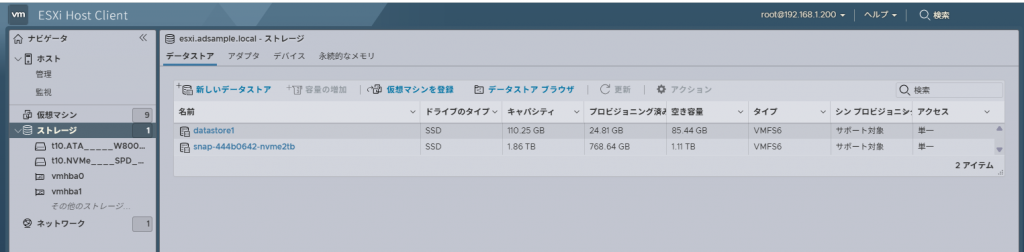

M.2 NVMe 2TB を内蔵させてESXi Host Clientから確認

[ストレージ]-[デバイス]ではちゃんとSPD SP7002D2TNGH が認識されている

クリックすると、中にVMFSパーテーションがあるのも認識されている

しかし[ストレージ]-[データストア]には表示されていない。

どういうことなのか、いろいろ調べた結果、なんとか解決した

どうやら、USB NVMeケースでマウントしていたVMFS領域について、明示的にumountしておかないと、いろんな処理がらみで面倒なことになっていたのではないか、と推測される状態となっていた。

まず、M.2 NVMeストレージとして認識されているかを「esxcli nvme namespace list」と「esxcli nvme controller list」を実行して確認

[root@esxi:~] esxcli nvme namespace list

Name Controller Number Namespace ID Block Size Capacity in MB

--------------------------------------------------------------------- ----------------- ------------ ---------- --------------

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 256 1 512 1953514

[root@esxi:~] esxcli nvme controller list

Name Controller Number Adapter Transport Type Is Online Controller Type Is VVOL Keep Alive Timeout IO Queue Number IO Queue Size

---------------------------------------------------------------------------------------- ----------------- ------- -------------- --------- --------------- ------- ------------------ --------------- -------------

nqn.2014-08.org.nvmexpress_1e4b_SPD_SP700-2TNGH_________________________0901SP7007D00399 256 vmhba1 PCIe true false 0 1 1024

[root@esxi:~]

次に /vmfs/devices/disks/ 以下にデバイスがあるかを確認

[root@esxi:~] ls /vmfs/devices/disks/

t10.ATA_____W800S_256GB_____________________________2202211088199_______

t10.ATA_____W800S_256GB_____________________________2202211088199_______:1

t10.ATA_____W800S_256GB_____________________________2202211088199_______:5

t10.ATA_____W800S_256GB_____________________________2202211088199_______:6

t10.ATA_____W800S_256GB_____________________________2202211088199_______:7

t10.ATA_____W800S_256GB_____________________________2202211088199_______:8

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000:1

vml.0100000000303230305f303030305f303030305f3030303000535044205350

vml.0100000000303230305f303030305f303030305f3030303000535044205350:1

vml.01000000003232303232313130383831393920202020202020573830305320

vml.01000000003232303232313130383831393920202020202020573830305320:1

vml.01000000003232303232313130383831393920202020202020573830305320:5

vml.01000000003232303232313130383831393920202020202020573830305320:6

vml.01000000003232303232313130383831393920202020202020573830305320:7

vml.01000000003232303232313130383831393920202020202020573830305320:8

vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2

vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2:1

[root@esxi:~]

今回認識していないのはSPDのDevfs pathを「esxcli storage core device list」で確認

[root@esxi:~] esxcli storage core device list

t10.ATA_____W800S_256GB_____________________________2202211088199_______

Display Name: Local ATA Disk (t10.ATA_____W800S_256GB_____________________________2202211088199_______)

Has Settable Display Name: true

Size: 244198

Device Type: Direct-Access

Multipath Plugin: HPP

Devfs Path: /vmfs/devices/disks/t10.ATA_____W800S_256GB_____________________________2202211088199_______

Vendor: ATA

Model: W800S 256GB

Revision: 3G5A

SCSI Level: 5

Is Pseudo: false

Status: on

Is RDM Capable: false

Is Local: true

Is Removable: false

Is SSD: true

Is VVOL PE: false

Is Offline: false

Is Perennially Reserved: false

Queue Full Sample Size: 0

Queue Full Threshold: 0

Thin Provisioning Status: yes

Attached Filters:

VAAI Status: unsupported

Other UIDs: vml.01000000003232303232313130383831393920202020202020573830305320

Is Shared Clusterwide: false

Is SAS: false

Is USB: false

Is Boot Device: true

Device Max Queue Depth: 31

No of outstanding IOs with competing worlds: 31

Drive Type: unknown

RAID Level: unknown

Number of Physical Drives: unknown

Protection Enabled: false

PI Activated: false

PI Type: 0

PI Protection Mask: NO PROTECTION

Supported Guard Types: NO GUARD SUPPORT

DIX Enabled: false

DIX Guard Type: NO GUARD SUPPORT

Emulated DIX/DIF Enabled: false

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

Display Name: Local NVMe Disk (t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000)

Has Settable Display Name: true

Size: 1953514

Device Type: Direct-Access

Multipath Plugin: HPP

Devfs Path: /vmfs/devices/disks/t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

Vendor: NVMe

Model: SPD SP700-2TNGH

Revision: SP02203A

SCSI Level: 0

Is Pseudo: false

Status: on

Is RDM Capable: false

Is Local: true

Is Removable: false

Is SSD: true

Is VVOL PE: false

Is Offline: false

Is Perennially Reserved: false

Queue Full Sample Size: 0

Queue Full Threshold: 0

Thin Provisioning Status: no

Attached Filters:

VAAI Status: unsupported

Other UIDs: vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2

Is Shared Clusterwide: false

Is SAS: false

Is USB: false

Is Boot Device: false

Device Max Queue Depth: 1023

No of outstanding IOs with competing worlds: 32

Drive Type: physical

RAID Level: NA

Number of Physical Drives: 1

Protection Enabled: false

PI Activated: false

PI Type: 0

PI Protection Mask: NO PROTECTION

Supported Guard Types: NO GUARD SUPPORT

DIX Enabled: false

DIX Guard Type: NO GUARD SUPPORT

Emulated DIX/DIF Enabled: false

[root@esxi:~]

パーテーションは1番の方なので下記を実施

[root@esxi:~] voma -m vmfs -f check -N -d /vmfs/devices/disks/vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2:1

Running VMFS Checker version 2.1 in check mode

Initializing LVM metadata, Basic Checks will be done

Checking for filesystem activity

Performing filesystem liveness check..|Scanning for VMFS-6 host activity (4096 bytes/HB, 1024 HBs).

Reservation Support is not present for NVME devices

Performing filesystem liveness check..|

########################################################################

# Warning !!! #

# #

# You are about to execute VOMA without device reservation. #

# Any access to this device from other hosts when VOMA is running #

# can cause severe data corruption #

# #

# This mode is supported only under VMware support supervision. #

########################################################################

Do you want to continue (Y/N)?

0) _Yes

1) _No

Select a number from 0-1: 0

Phase 1: Checking VMFS header and resource files

Detected VMFS-6 file system (labeled:'nvme2tb') with UUID:68e4cab1-0a865c28-49c0-04ab182311d3, Version 6:82

Phase 2: Checking VMFS heartbeat region

Phase 3: Checking all file descriptors.

Phase 4: Checking pathname and connectivity.

Phase 5: Checking resource reference counts.

Total Errors Found: 0

[root@esxi:~]

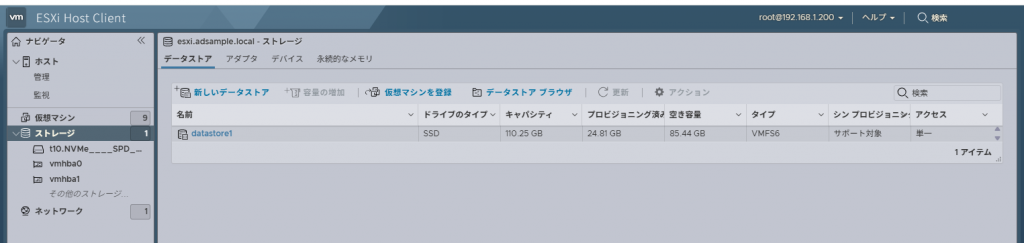

esxcli storage filesystem rescanを実行すると、ファイルシステムかスナップショットのどちらかにVMFS領域が認識されている

[root@esxi:~] esxcli storage filesystem rescan

[root@esxi:~] esxcli storage filesystem list

Mount Point Volume Name UUID Mounted Type Size Free

------------------------------------------------- ------------------------------------------ ----------------------------------- ------- ------ ------------ ------------

/vmfs/volumes/68cad69a-e82d8e40-5b65-5bb7fb6107f2 datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 true VMFS-6 118380036096 91743059968

/vmfs/volumes/68cad69a-d23fb18e-73e5-5bb7fb6107f2 OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 true VMFSOS 128580583424 125363552256

/vmfs/volumes/fa8a25f7-ba40ebee-45ac-f419c9f388e0 BOOTBANK1 fa8a25f7-ba40ebee-45ac-f419c9f388e0 true vfat 4293591040 4022075392

/vmfs/volumes/f43b0450-7e4d6762-c6be-52e6552cc1f8 BOOTBANK2 f43b0450-7e4d6762-c6be-52e6552cc1f8 true vfat 4293591040 4021354496

[root@esxi:~] esxcli storage vmfs snapshot lis

Error: Unknown command or namespace storage vmfs snapshot lis

[root@esxi:~] esxcli storage vmfs snapshot list

68e4cab1-0a865c28-49c0-04ab182311d3

Volume Name: nvme2tb

VMFS UUID: 68e4cab1-0a865c28-49c0-04ab182311d3

Can mount: true

Reason for un-mountability:

Can resignature: true

Reason for non-resignaturability:

Unresolved Extent Count: 1

[root@esxi:~]

今回はスナップショットとして認識されていたので、再署名を行う

[root@esxi:~] esxcli storage vmfs snapshot resignature --volume-label=nvme2tb

[root@esxi:~]

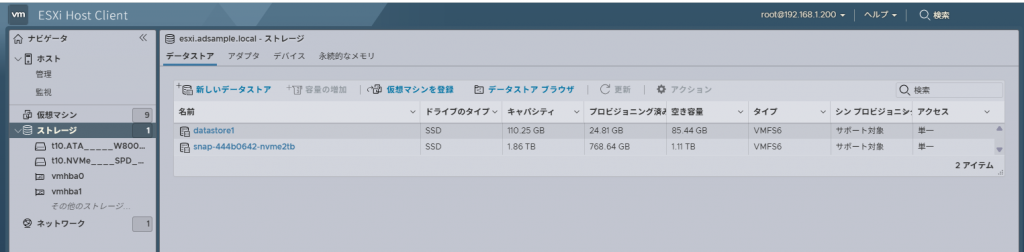

再署名すると”snap”という名前ながら普通のファイルシステムとして認識された

[root@esxi:~] esxcli storage vmfs snapshot list

[root@esxi:~] esxcli storage filesystem list

Mount Point Volume Name UUID Mounted Type Size Free

------------------------------------------------- ------------------------------------------ ----------------------------------- ------- ------ ------------- -------------

/vmfs/volumes/68cad69a-e82d8e40-5b65-5bb7fb6107f2 datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 true VMFS-6 118380036096 91743059968

/vmfs/volumes/68e5b682-56352c06-7c60-04ab182311d3 snap-444b0642-nvme2tb 68e5b682-56352c06-7c60-04ab182311d3 true VMFS-6 2048162529280 1222844088320

/vmfs/volumes/68cad69a-d23fb18e-73e5-5bb7fb6107f2 OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 true VMFSOS 128580583424 125363552256

/vmfs/volumes/fa8a25f7-ba40ebee-45ac-f419c9f388e0 BOOTBANK1 fa8a25f7-ba40ebee-45ac-f419c9f388e0 true vfat 4293591040 4022075392

/vmfs/volumes/f43b0450-7e4d6762-c6be-52e6552cc1f8 BOOTBANK2 f43b0450-7e4d6762-c6be-52e6552cc1f8 true vfat 4293591040 4021354496

[root@esxi:~]

再起動しても認識状態は変わらず、普通のVMFS領域として使用できたので、データストア名を元に戻して再使用を開始した

ここから下は調査ログ

ここから下は、状況調査する際に参照した情報について列挙したメモです

KB「VMware ESXi/ESX を操作するときのディスクの識別」にあるコマンドをいくつか実行してみる

[root@esxi:~] esxcli storage core path list

sata.vmhba0-sata.0:1-t10.ATA_____W800S_256GB_____________________________2202211088199_______

UID: sata.vmhba0-sata.0:1-t10.ATA_____W800S_256GB_____________________________2202211088199_______

Runtime Name: vmhba0:C0:T1:L0

Device: t10.ATA_____W800S_256GB_____________________________2202211088199_______

Device Display Name: Local ATA Disk (t10.ATA_____W800S_256GB_____________________________2202211088199_______)

Adapter: vmhba0

Controller: Not Applicable

Channel: 0

Target: 1

LUN: 0

Plugin: HPP

State: active

Transport: sata

Adapter Identifier: sata.vmhba0

Target Identifier: sata.0:1

Adapter Transport Details: Unavailable or path is unclaimed

Target Transport Details: Unavailable or path is unclaimed

Maximum IO Size: 33554432

pcie.300-pcie.0:0-t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

UID: pcie.300-pcie.0:0-t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

Runtime Name: vmhba1:C0:T0:L0

Device: t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

Device Display Name: Local NVMe Disk (t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000)

Adapter: vmhba1

Controller: nqn.2014-08.org.nvmexpress_1e4b_SPD_SP700-2TNGH_________________________0901SP7007D00399

Channel: 0

Target: 0

LUN: 0

Plugin: HPP

State: active

Transport: pcie

Adapter Identifier: pcie.300

Target Identifier: pcie.0:0

Adapter Transport Details: Unavailable or path is unclaimed

Target Transport Details: Unavailable or path is unclaimed

Maximum IO Size: 524288

[root@esxi:~]

[root@esxi:~] esxcfg-mpath -b

t10.ATA_____W800S_256GB_____________________________2202211088199_______ : Local ATA Disk (t10.ATA_____W800S_256GB_____________________________2202211088199_______)

vmhba0:C0:T1:L0 LUN:0 state:active Local HBA vmhba0 channel 0 target 1

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 : Local NVMe Disk (t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000)

vmhba1:C0:T0:L0 LUN:0 state:active Local HBA vmhba1 channel 0 target 0

[root@esxi:~]

[root@esxi:~] esxcli storage core device list

t10.ATA_____W800S_256GB_____________________________2202211088199_______

Display Name: Local ATA Disk (t10.ATA_____W800S_256GB_____________________________2202211088199_______)

Has Settable Display Name: true

Size: 244198

Device Type: Direct-Access

Multipath Plugin: HPP

Devfs Path: /vmfs/devices/disks/t10.ATA_____W800S_256GB_____________________________2202211088199_______

Vendor: ATA

Model: W800S 256GB

Revision: 3G5A

SCSI Level: 5

Is Pseudo: false

Status: on

Is RDM Capable: false

Is Local: true

Is Removable: false

Is SSD: true

Is VVOL PE: false

Is Offline: false

Is Perennially Reserved: false

Queue Full Sample Size: 0

Queue Full Threshold: 0

Thin Provisioning Status: yes

Attached Filters:

VAAI Status: unsupported

Other UIDs: vml.01000000003232303232313130383831393920202020202020573830305320

Is Shared Clusterwide: false

Is SAS: false

Is USB: false

Is Boot Device: true

Device Max Queue Depth: 31

No of outstanding IOs with competing worlds: 31

Drive Type: unknown

RAID Level: unknown

Number of Physical Drives: unknown

Protection Enabled: false

PI Activated: false

PI Type: 0

PI Protection Mask: NO PROTECTION

Supported Guard Types: NO GUARD SUPPORT

DIX Enabled: false

DIX Guard Type: NO GUARD SUPPORT

Emulated DIX/DIF Enabled: false

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

Display Name: Local NVMe Disk (t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000)

Has Settable Display Name: true

Size: 1953514

Device Type: Direct-Access

Multipath Plugin: HPP

Devfs Path: /vmfs/devices/disks/t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

Vendor: NVMe

Model: SPD SP700-2TNGH

Revision: SP02203A

SCSI Level: 0

Is Pseudo: false

Status: on

Is RDM Capable: false

Is Local: true

Is Removable: false

Is SSD: true

Is VVOL PE: false

Is Offline: false

Is Perennially Reserved: false

Queue Full Sample Size: 0

Queue Full Threshold: 0

Thin Provisioning Status: no

Attached Filters:

VAAI Status: unsupported

Other UIDs: vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2

Is Shared Clusterwide: false

Is SAS: false

Is USB: false

Is Boot Device: false

Device Max Queue Depth: 1023

No of outstanding IOs with competing worlds: 32

Drive Type: physical

RAID Level: NA

Number of Physical Drives: 1

Protection Enabled: false

PI Activated: false

PI Type: 0

PI Protection Mask: NO PROTECTION

Supported Guard Types: NO GUARD SUPPORT

DIX Enabled: false

DIX Guard Type: NO GUARD SUPPORT

Emulated DIX/DIF Enabled: false

[root@esxi:~]

[root@esxi:~] esxcfg-scsidevs -c

Device UID Device Type Console Device Size Multipath PluginDisplay Name

t10.ATA_____W800S_256GB_____________________________2202211088199_______ Direct-Access /vmfs/devices/disks/t10.ATA_____W800S_256GB_____________________________2202211088199_______ 244198MB HPP Local ATA Disk (t10.ATA_____W800S_256GB_____________________________2202211088199_______)

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 Direct-Access /vmfs/devices/disks/t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 1953514MB HPP Local NVMe Disk (t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000)

[root@esxi:~]

vmfsに関する出力となると、2TBデバイスが登場しない

[root@esxi:~] esxcli storage vmfs extent list

Volume Name VMFS UUID Extent Number Device Name Partition

------------------------------------------ ----------------------------------- ------------- ------------------------------------------------------------------------ ---------

datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 0 t10.ATA_____W800S_256GB_____________________________2202211088199_______ 8

OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 0 t10.ATA_____W800S_256GB_____________________________2202211088199_______ 7

[root@esxi:~]

[root@esxi:~] esxcfg-scsidevs -m

t10.ATA_____W800S_256GB_____________________________2202211088199_______:8 /vmfs/devices/disks/t10.ATA_____W800S_256GB_____________________________2202211088199_______:8 68cad69a-e82d8e40-5b65-5bb7fb6107f2 0 datastore1

t10.ATA_____W800S_256GB_____________________________2202211088199_______:7 /vmfs/devices/disks/t10.ATA_____W800S_256GB_____________________________2202211088199_______:7 68cad69a-d23fb18e-73e5-5bb7fb6107f2 0 OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2

[root@esxi:~]

[root@esxi:~] esxcli storage filesystem list

Mount Point Volume Name UUID Mounted Type Size Free

------------------------------------------------- ------------------------------------------ ----------------------------------- ------- ------ ------------ ------------

/vmfs/volumes/68cad69a-e82d8e40-5b65-5bb7fb6107f2 datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 true VMFS-6 118380036096 91743059968

/vmfs/volumes/68cad69a-d23fb18e-73e5-5bb7fb6107f2 OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 true VMFSOS 128580583424 125363552256

/vmfs/volumes/fa8a25f7-ba40ebee-45ac-f419c9f388e0 BOOTBANK1 fa8a25f7-ba40ebee-45ac-f419c9f388e0 true vfat 4293591040 4022075392

/vmfs/volumes/f43b0450-7e4d6762-c6be-52e6552cc1f8 BOOTBANK2 f43b0450-7e4d6762-c6be-52e6552cc1f8 true vfat 4293591040 4021354496

[root@esxi:~]

/vmfs/devices/disks の下を見てみる

[root@esxi:~] ls -alh /vmfs/devices/disks

total 4500908025

drwxr-xr-x 2 root root 512 Oct 8 00:11 .

drwxr-xr-x 16 root root 512 Oct 8 00:11 ..

-rw------- 1 root root 238.5G Oct 8 00:11 t10.ATA_____W800S_256GB_____________________________2202211088199_______

-rw------- 1 root root 100.0M Oct 8 00:11 t10.ATA_____W800S_256GB_____________________________2202211088199_______:1

-rw------- 1 root root 4.0G Oct 8 00:11 t10.ATA_____W800S_256GB_____________________________2202211088199_______:5

-rw------- 1 root root 4.0G Oct 8 00:11 t10.ATA_____W800S_256GB_____________________________2202211088199_______:6

-rw------- 1 root root 119.9G Oct 8 00:11 t10.ATA_____W800S_256GB_____________________________2202211088199_______:7

-rw------- 1 root root 110.5G Oct 8 00:11 t10.ATA_____W800S_256GB_____________________________2202211088199_______:8

-rw------- 1 root root 1.9T Oct 8 00:11 t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

-rw------- 1 root root 1.9T Oct 8 00:11 t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000:1

lrwxrwxrwx 1 root root 69 Oct 8 00:11 vml.0100000000303230305f303030305f303030305f3030303000535044205350 -> t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

lrwxrwxrwx 1 root root 71 Oct 8 00:11 vml.0100000000303230305f303030305f303030305f3030303000535044205350:1 -> t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000:1

lrwxrwxrwx 1 root root 72 Oct 8 00:11 vml.01000000003232303232313130383831393920202020202020573830305320 -> t10.ATA_____W800S_256GB_____________________________2202211088199_______

lrwxrwxrwx 1 root root 74 Oct 8 00:11 vml.01000000003232303232313130383831393920202020202020573830305320:1 -> t10.ATA_____W800S_256GB_____________________________2202211088199_______:1

lrwxrwxrwx 1 root root 74 Oct 8 00:11 vml.01000000003232303232313130383831393920202020202020573830305320:5 -> t10.ATA_____W800S_256GB_____________________________2202211088199_______:5

lrwxrwxrwx 1 root root 74 Oct 8 00:11 vml.01000000003232303232313130383831393920202020202020573830305320:6 -> t10.ATA_____W800S_256GB_____________________________2202211088199_______:6

lrwxrwxrwx 1 root root 74 Oct 8 00:11 vml.01000000003232303232313130383831393920202020202020573830305320:7 -> t10.ATA_____W800S_256GB_____________________________2202211088199_______:7

lrwxrwxrwx 1 root root 74 Oct 8 00:11 vml.01000000003232303232313130383831393920202020202020573830305320:8 -> t10.ATA_____W800S_256GB_____________________________2202211088199_______:8

lrwxrwxrwx 1 root root 69 Oct 8 00:11 vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2 -> t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

lrwxrwxrwx 1 root root 71 Oct 8 00:11 vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2:1 -> t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000:1

[root@esxi:~]

KB「Detach a LUN device from ESXi hosts」より

[root@esxi:~] esxcli storage core device world list

Device World ID Open Count World Name

------------------------------------------------------------------------ -------- ---------- ----------

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 524300 1 idle0

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 524399 1 OCFlush

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 524403 1 bcflushd

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 524728 1 Vol3JournalExtendMgrWorld

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 524813 1 J6AsyncReplayManager

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 525311 1 hostd

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 525543 1 healthd

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 525311 1 hostd

[root@esxi:~] esxcli storage core device world list -d vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2

Device World ID Open Count World Name

--------------------------------------------------------------------- -------- ---------- ----------

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 525311 1 hostd

[root@esxi:~]

[root@esxi:~] esxcli storage core adapter list

HBA Name Driver Link State UID Capabilities Description

-------- --------- ---------- ----------- ------------ -----------

vmhba0 vmw_ahci link-n/a sata.vmhba0 (0000:00:17.0) Intel Corporation Alder Lake-N SATA AHCI Controller

vmhba1 nvme_pcie link-n/a pcie.300 (0000:03:00.0) MAXIO Technology (Hangzhou) Ltd. NVMe SSD Controller MAP1602 (DRAM-less)

[root@esxi:~]

[root@esxi:~] esxcli storage core device partition list

Device Partition Start Sector End Sector Type Size

------------------------------------------------------------------------ --------- ------------ ---------- ---- -------------

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 0 0 500118191 0 256060514304

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 1 64 204863 0 104857600

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 5 208896 8595455 6 4293918720

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 6 8597504 16984063 6 4293918720

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 7 16986112 268435455 f8 128742064128

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 8 268437504 500118158 fb 118620495360

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 0 0 4000797359 0 2048408248320

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 1 2048 4000794624 fb 2048405799424

[root@esxi:~] esxcli storage core device partition showguid

Device Partition Layout GUID

------------------------------------------------------------------------ --------- ------ ----

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 0 GPT 00000000000000000000000000000000

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 1 GPT c12a7328f81f11d2ba4b00a0c93ec93b

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 5 GPT ebd0a0a2b9e5443387c068b6b72699c7

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 6 GPT ebd0a0a2b9e5443387c068b6b72699c7

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 7 GPT 4eb2ea3978554790a79efae495e21f8d

t10.ATA_____W800S_256GB_____________________________2202211088199_______ 8 GPT aa31e02a400f11db9590000c2911d1b8

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 0 GPT 00000000000000000000000000000000

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 1 GPT aa31e02a400f11db9590000c2911d1b8

[root@esxi:~]

ESXiのvmkernelのモジュールに関するパラメータを調査

まず、nvmeに関連しそうなモジュール一覧

[root@esxi:~] esxcli system module list|grep nvme

vmknvme true true

vmknvme_vmkapi_compat true true

nvme_pcie true true

[root@esxi:~]

それぞれのモジュールにあるパラメータを確認

[root@esxi:~] esxcli system module parameters list --module=nvme_pcie

Name Type Value Description

--------------------------- ---- ----- -----------

nvmePCIEBlkSizeAwarePollAct int NVMe PCIe block size aware poll activate. Valid if poll activated. Default activated.

nvmePCIEDebugMask int NVMe PCIe driver debug mask

nvmePCIEDma4KSwitch int NVMe PCIe 4k-alignment DMA

nvmePCIEFakeAdminQSize uint NVMe PCIe fake ADMIN queue size. 0's based

nvmePCIELogLevel int NVMe PCIe driver log level

nvmePCIEMsiEnbaled int NVMe PCIe MSI interrupt enable

nvmePCIEPollAct int NVMe PCIe hybrid poll activate, MSIX interrupt must be enabled. Default activated.

nvmePCIEPollInterval uint NVMe PCIe hybrid poll interval between each poll in microseconds. Valid if poll activated. Default 50us.

nvmePCIEPollOIOThr uint NVMe PCIe hybrid poll OIO threshold of automatic switch from interrupt to poll. Valid if poll activated. Default 30 OIO commands per IO queue.

[root@esxi:~] esxcli system module parameters list --module=vmknvme

Name Type Value Description

------------------------------------- ---- ----- -----------

vmknvme_adapter_num_cmpl_queues uint Number of PSA completion queues for NVMe-oF adapter, min: 1, max: 16, default: 4

vmknvme_bind_intr uint If enabled, the interrupt cookies are binded to completion worlds. This parameter is only applied when using driver completion worlds.

vmknvme_compl_world_type uint completion world type, PSA: 0, VMKNVME: 1

vmknvme_ctlr_recover_initial_attempts uint Number of initial controller recover attempts, MIN: 2, MAX: 30

vmknvme_ctlr_recover_method uint controller recover method after initial recover attempts, RETRY: 0, DELETE: 1

vmknvme_cw_rate uint Number of completion worlds per IO queue (NVMe/PCIe only). Number is a power of 2. Applies when number of queues less than 4.

vmknvme_enable_noiob uint If enabled, driver will split the commands based on NOIOB.

vmknvme_hostnqn_format uint HostNQN format, UUID: 0, HostName: 1

vmknvme_io_queue_num uint vmknvme IO queue number for NVMe/PCIe adapter: pow of 2 in [1, 16]

vmknvme_io_queue_size uint IO queue size: [8, 1024]

vmknvme_iosplit_workaround uint If enabled, qdepth in PSA layer is half size of vmknvme settings.

vmknvme_log_level uint log level: [0, 20]

vmknvme_max_prp_entries_num uint User defined maximum number of PRP entries per controller:default value is 0

vmknvme_stats uint Nvme statistics per controller (NVMe/PCIe only now). Logical OR of flags for collecting. 0x0 for disable, 0x1 for basic data (IO pattern), 0x2 for histogram without IO block size, 0x4 for histogram with IO block size. Default 0x2.

vmknvme_total_io_queue_size uint Aggregated IO queue size of a controller, MIN: 64, MAX: 4096

vmknvme_use_default_domain_name uint If set to 1, the default domain name "com.vmware", not the system domain name will always be used to generate host NQN. Not used: 0, used: 1, default: 0

[root@esxi:~] esxcli system module parameters list --module=vmknvme_vmkapi_compat

[root@esxi:~]

データストアとしての取り扱いに関連しそうなものはなさそうに見える

esxcliを調べるとesxcli nvmeコマンド群があった

HPE Alletra 9000:VMware ESXi実装ガイドのESXiホストからのネームスペースの検出とネームスペースへの接続 に NVMe over FC時のesxcli nvmeコマンドでの実行例があるので実行してみる

[root@esxi:~] esxcli nvme adapter list

Adapter Adapter Qualified Name Transport Type Driver Associated Devices

------- ----------------------------------------------------------------------------------- -------------- --------- ------------------

vmhba1 aqn:nvme_pcie:nqn.2014-08.org.nvmexpress1e4b1e4b0901SP7007D00399____SPD_SP700-2TNGH PCIe nvme_pcie

[root@esxi:~]

ネームスペースはすでにある

[root@esxi:~] esxcli nvme namespace list

Name Controller Number Namespace ID Block Size Capacity in MB

--------------------------------------------------------------------- ----------------- ------------ ---------- --------------

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000 256 1 512 1953514

[root@esxi:~] esxcli nvme controller list

Name Controller Number Adapter Transport Type Is Online Controller Type Is VVOL Keep Alive Timeout IO Queue Number IO Queue Size

---------------------------------------------------------------------------------------- ----------------- ------- -------------- --------- --------------- ------- ------------------ --------------- -------------

nqn.2014-08.org.nvmexpress_1e4b_SPD_SP700-2TNGH_________________________0901SP7007D00399 256 vmhba1 PCIe true false 0 1 1024

[root@esxi:~]

PowerEdge:DellサーバーおよびVMware ESXiでのNVMe LED管理 にLED管理の前段階となるデバイスにどういう設定ができるか表示するといった項目があった

[root@esxi:~] esxcli nvme device list

HBA Name Status Signature

-------- ------ ---------

vmhba1 Online nvmeMgmt-nvmhba0

[root@esxi:~] esxcli nvme device get -A vmhba1

Controller Identify Info:

PCIVID: 0x1e4b

PCISSVID: 0x1e4b

Serial Number: 0901SP7007D00399

Model Number: SPD SP700-2TNGH

Firmware Revision: SP02203A

Recommended Arbitration Burst: 0

IEEE OUI Identifier: 000000

Controller Associated with an SR-IOV Virtual Function: false

Controller Associated with a PCI Function: true

NVM Subsystem May Contain Two or More Controllers: false

NVM Subsystem Contains Only One Controller: true

NVM Subsystem May Contain Two or More PCIe Ports: false

NVM Subsystem Contains Only One PCIe Port: true

Max Data Transfer Size: 7

Controller ID: 0

Version: 1.4

RTD3 Resume Latency: 500000 us

RTD3 Entry Latency: 2000000 us

Optional Firmware Activation Event Support: true

Optional Namespace Attribute Changed Event Support: false

Host Identifier Support: false

Namespace Management and Attachment Support: false

Firmware Activate and Download Support: true

Format NVM Support: true

Security Send and Receive Support: true

Abort Command Limit: 2

Async Event Request Limit: 3

Firmware Activate Without Reset Support: true

Firmware Slot Number: 3

The First Slot Is Read-only: false

Telemetry Log Page Support: false

Command Effects Log Page Support: true

SMART/Health Information Log Page per Namespace Support: false

Error Log Page Entries: 63

Number of Power States Support: 4

Format of Admin Vendor Specific Commands Is Same: true

Format of Admin Vendor Specific Commands Is Vendor Specific: false

Autonomous Power State Transitions Support: true

Warning Composite Temperature Threshold: 363

Critical Composite Temperature Threshold: 368

Max Time for Firmware Activation: 200 * 100ms

Host Memory Buffer Preferred Size: 8192 * 4KB

Host Memory Buffer Min Size: 8192 * 4KB

Total NVM Capacity: 0x1dceea56000

Unallocated NVM Capacity: 0x0

Access Size: 0 * 512B

Total Size: 0 * 128KB

Authentication Method: 0

Number of RPMB Units: 0

Keep Alive Support: 0

Max Submission Queue Entry Size: 64 Bytes

Required Submission Queue Entry Size: 64 Bytes

Max Completion Queue Entry Size: 16 Bytes

Required Completion Queue Entry Size: 16 Bytes

Max Outstanding Commands: 0

Number of Namespaces: 1

Reservation Support: false

Save/Select Field in Set/Get Feature Support: true

Write Zeroes Command Support: true

Dataset Management Command Support: true

Write Uncorrectable Command Support: true

Compare Command Support: true

Fused Operation Support: false

Cryptographic Erase as Part of Secure Erase Support: false

Cryptographic Erase and User Data Erase to All Namespaces: false

Cryptographic Erase and User Data Erase to One Particular Namespace: true

Format Operation to All Namespaces: false

Format Opertaion to One Particular Namespace: true

Volatile Write Cache Is Present: true

Atomic Write Unit Normal: 0 Logical Blocks

Atomic Write Unit Power Fail: 0 Logical Blocks

Format of All NVM Vendor Specific Commands Is Same: false

Format of All NVM Vendor Specific Commands Is Vendor Specific: true

Atomic Compare and Write Unit: 0

SGL Address Specify Offset Support: false

MPTR Contain SGL Descriptor Support: false

SGL Length Able to Larger than Data Amount: false

SGL Length Shall Be Equal to Data Amount: true

Byte Aligned Contiguous Physical Buffer of Metadata Support: false

SGL Bit Bucket Descriptor Support: false

SGL Keyed SGL Data Block Descriptor Support: false

SGL for NVM Command Set Support: false

NVM Subsystem NVMe Qualified Name:

NVM Subsystem NVMe Qualified Name (hex format):

[root@esxi:~]

SCSIからNVMe VMware VMFSデータストアへのオフライン移行手順

[root@esxi:~] esxcli storage vmfs lockmode list

Volume Name UUID Type Locking Mode ATS Compatible ATS Upgrade Modes ATS Incompatibility Reason

------------------------------------------ ----------------------------------- -------- ------------ -------------- ----------------- --------------------------

datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 VMFS-6 ATS+SCSI false None Device does not support ATS

OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 Non-VMFS ATS+SCSI false None Device does not support ATS

[root@esxi:~]

vomaコマンドでファイルシステムチェック

[root@esxi:~] ls /vmfs/devices/disks/

t10.ATA_____W800S_256GB_____________________________2202211088199_______

t10.ATA_____W800S_256GB_____________________________2202211088199_______:1

t10.ATA_____W800S_256GB_____________________________2202211088199_______:5

t10.ATA_____W800S_256GB_____________________________2202211088199_______:6

t10.ATA_____W800S_256GB_____________________________2202211088199_______:7

t10.ATA_____W800S_256GB_____________________________2202211088199_______:8

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000

t10.NVMe____SPD_SP7002D2TNGH_________________________0200000000000000:1

vml.0100000000303230305f303030305f303030305f3030303000535044205350

vml.0100000000303230305f303030305f303030305f3030303000535044205350:1

vml.01000000003232303232313130383831393920202020202020573830305320

vml.01000000003232303232313130383831393920202020202020573830305320:1

vml.01000000003232303232313130383831393920202020202020573830305320:5

vml.01000000003232303232313130383831393920202020202020573830305320:6

vml.01000000003232303232313130383831393920202020202020573830305320:7

vml.01000000003232303232313130383831393920202020202020573830305320:8

vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2

vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b89f9e2:1

[root@esxi:~] voma -m vmfs -f check -N -d /vmfs/devices/disks/vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b8

9f9e2:1

Running VMFS Checker version 2.1 in check mode

Initializing LVM metadata, Basic Checks will be done

Checking for filesystem activity

Performing filesystem liveness check..|Scanning for VMFS-6 host activity (4096 bytes/HB, 1024 HBs).

Reservation Support is not present for NVME devices

Performing filesystem liveness check..|

########################################################################

# Warning !!! #

# #

# You are about to execute VOMA without device reservation. #

# Any access to this device from other hosts when VOMA is running #

# can cause severe data corruption #

# #

# This mode is supported only under VMware support supervision. #

########################################################################

Do you want to continue (Y/N)?

0) _Yes

1) _No

Select a number from 0-1: 0

Phase 1: Checking VMFS header and resource files

Detected VMFS-6 file system (labeled:'nvme2tb') with UUID:68e4cab1-0a865c28-49c0-04ab182311d3, Version 6:82

Phase 2: Checking VMFS heartbeat region

Phase 3: Checking all file descriptors.

Phase 4: Checking pathname and connectivity.

Phase 5: Checking resource reference counts.

Total Errors Found: 0

[root@esxi:~]

ファイルシステムが追加されたわけではない?

[root@esxi:~] esxcli storage filesystem rescan

[root@esxi:~] esxcli storage filesystem list

Mount Point Volume Name UUID Mounted Type Size Free

------------------------------------------------- ------------------------------------------ ----------------------------------- ------- ------ ------------ ------------

/vmfs/volumes/68cad69a-e82d8e40-5b65-5bb7fb6107f2 datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 true VMFS-6 118380036096 91743059968

/vmfs/volumes/68cad69a-d23fb18e-73e5-5bb7fb6107f2 OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 true VMFSOS 128580583424 125363552256

/vmfs/volumes/fa8a25f7-ba40ebee-45ac-f419c9f388e0 BOOTBANK1 fa8a25f7-ba40ebee-45ac-f419c9f388e0 true vfat 4293591040 4022075392

/vmfs/volumes/f43b0450-7e4d6762-c6be-52e6552cc1f8 BOOTBANK2 f43b0450-7e4d6762-c6be-52e6552cc1f8 true vfat 4293591040 4021354496

[root@esxi:~]

スナップショットがある?

[root@esxi:~] esxcli storage vmfs snapshot list

68e4cab1-0a865c28-49c0-04ab182311d3

Volume Name: nvme2tb

VMFS UUID: 68e4cab1-0a865c28-49c0-04ab182311d3

Can mount: true

Reason for un-mountability:

Can resignature: true

Reason for non-resignaturability:

Unresolved Extent Count: 1

[root@esxi:~]

再署名してみた

[root@esxi:~] esxcli storage vmfs snapshot resignature --volume-label=nvme2tb

[root@esxi:~] esxcli storage vmfs snapshot list

[root@esxi:~] esxcli storage filesystem list

Mount Point Volume Name UUID Mounted Type Size Free

------------------------------------------------- ------------------------------------------ ----------------------------------- ------- ------ ------------- -------------

/vmfs/volumes/68cad69a-e82d8e40-5b65-5bb7fb6107f2 datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 true VMFS-6 118380036096 91743059968

/vmfs/volumes/68e5b682-56352c06-7c60-04ab182311d3 snap-444b0642-nvme2tb 68e5b682-56352c06-7c60-04ab182311d3 true VMFS-6 2048162529280 1222844088320

/vmfs/volumes/68cad69a-d23fb18e-73e5-5bb7fb6107f2 OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 true VMFSOS 128580583424 125363552256

/vmfs/volumes/fa8a25f7-ba40ebee-45ac-f419c9f388e0 BOOTBANK1 fa8a25f7-ba40ebee-45ac-f419c9f388e0 true vfat 4293591040 4022075392

/vmfs/volumes/f43b0450-7e4d6762-c6be-52e6552cc1f8 BOOTBANK2 f43b0450-7e4d6762-c6be-52e6552cc1f8 true vfat 4293591040 4021354496

[root@esxi:~]

スナップショット領域がファイルシステムとして認識された?

名前がsnapとついてるだけで普通のデータストア?

もう1回vomaでチェック

[root@esxi:~] voma -m vmfs -f check -N -d /vmfs/devices/disks/vml.05c56298c6cae09f64ef49957d1d7af93c98b2a5792c87d191b47f87ea5b8

9f9e2:1

Running VMFS Checker version 2.1 in check mode

Initializing LVM metadata, Basic Checks will be done

Checking for filesystem activity

Performing filesystem liveness check..|Scanning for VMFS-6 host activity (4096 bytes/HB, 1024 HBs).

Reservation Support is not present for NVME devices

Performing filesystem liveness check..|

########################################################################

# Warning !!! #

# #

# You are about to execute VOMA without device reservation. #

# Any access to this device from other hosts when VOMA is running #

# can cause severe data corruption #

# #

# This mode is supported only under VMware support supervision. #

########################################################################

Do you want to continue (Y/N)?

0) _Yes

1) _No

Select a number from 0-1: 0

Phase 1: Checking VMFS header and resource files

Detected VMFS-6 file system (labeled:'snap-444b0642-nvme2tb') with UUID:68e5b682-56352c06-7c60-04ab182311d3, Version 6:82

Phase 2: Checking VMFS heartbeat region

Phase 3: Checking all file descriptors.

Phase 4: Checking pathname and connectivity.

Phase 5: Checking resource reference counts.

Total Errors Found: 0

[root@esxi:~] esxcli storage vmfs snapshot list

[root@esxi:~] esxcli storage filesystem list

Mount Point Volume Name UUID Mounted Type Size Free

------------------------------------------------- ------------------------------------------ ----------------------------------- ------- ------ ------------- -------------

/vmfs/volumes/68cad69a-e82d8e40-5b65-5bb7fb6107f2 datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 true VMFS-6 118380036096 91743059968

/vmfs/volumes/68e5b682-56352c06-7c60-04ab182311d3 snap-444b0642-nvme2tb 68e5b682-56352c06-7c60-04ab182311d3 true VMFS-6 2048162529280 1222844088320

/vmfs/volumes/68cad69a-d23fb18e-73e5-5bb7fb6107f2 OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 true VMFSOS 128580583424 125363552256

/vmfs/volumes/fa8a25f7-ba40ebee-45ac-f419c9f388e0 BOOTBANK1 fa8a25f7-ba40ebee-45ac-f419c9f388e0 true vfat 4293591040 4022075392

/vmfs/volumes/f43b0450-7e4d6762-c6be-52e6552cc1f8 BOOTBANK2 f43b0450-7e4d6762-c6be-52e6552cc1f8 true vfat 4293591040 4021354496

[root@esxi:~]

特に状況は変わらない

lockmode確認すると、そちらでもデバイスは増えた

[root@esxi:~] esxcli storage vmfs lockmode list

Volume Name UUID Type Locking Mode ATS Compatible ATS Upgrade Modes ATS Incompatibility Reason

------------------------------------------ ----------------------------------- -------- ------------ -------------- ----------------- --------------------------

datastore1 68cad69a-e82d8e40-5b65-5bb7fb6107f2 VMFS-6 ATS+SCSI false None Device does not support ATS

snap-444b0642-nvme2tb 68e5b682-56352c06-7c60-04ab182311d3 VMFS-6 ATS+SCSI false None Device does not support ATS

OSDATA-68cad69a-d23fb18e-73e5-5bb7fb6107f2 68cad69a-d23fb18e-73e5-5bb7fb6107f2 Non-VMFS ATS+SCSI false None Device does not support ATS

[root@esxi:~]

とりあえずESXiを再起動

再起動してみても、同じ認識状況だったので、snapを普通の名前に変えて使用継続することとした