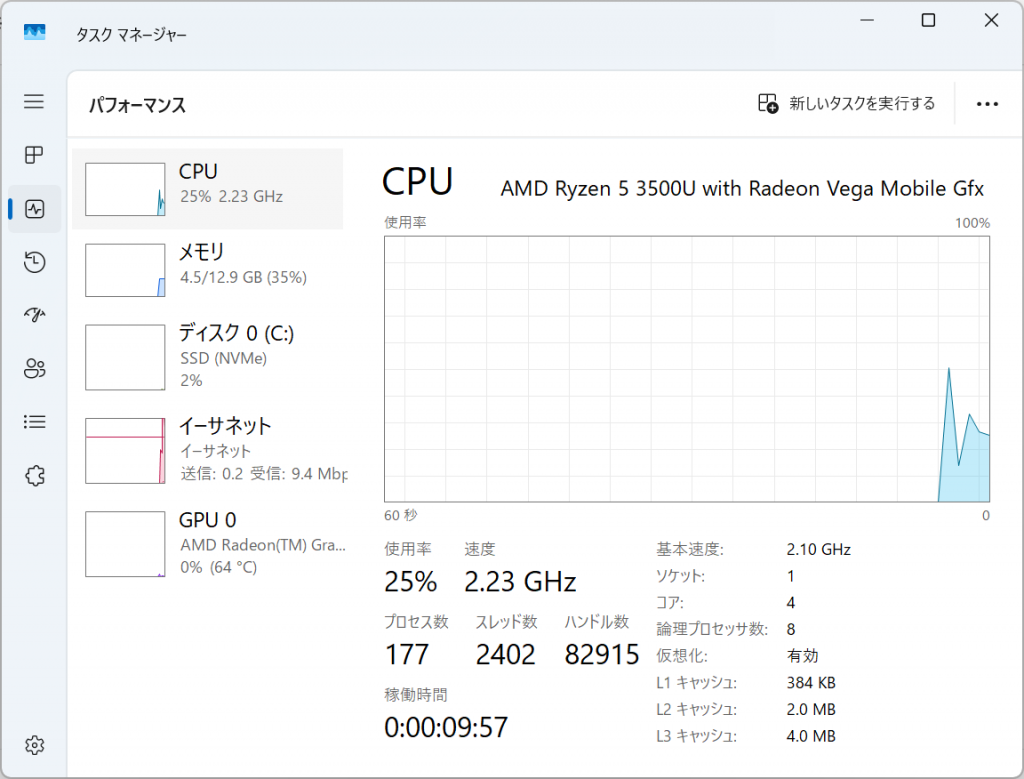

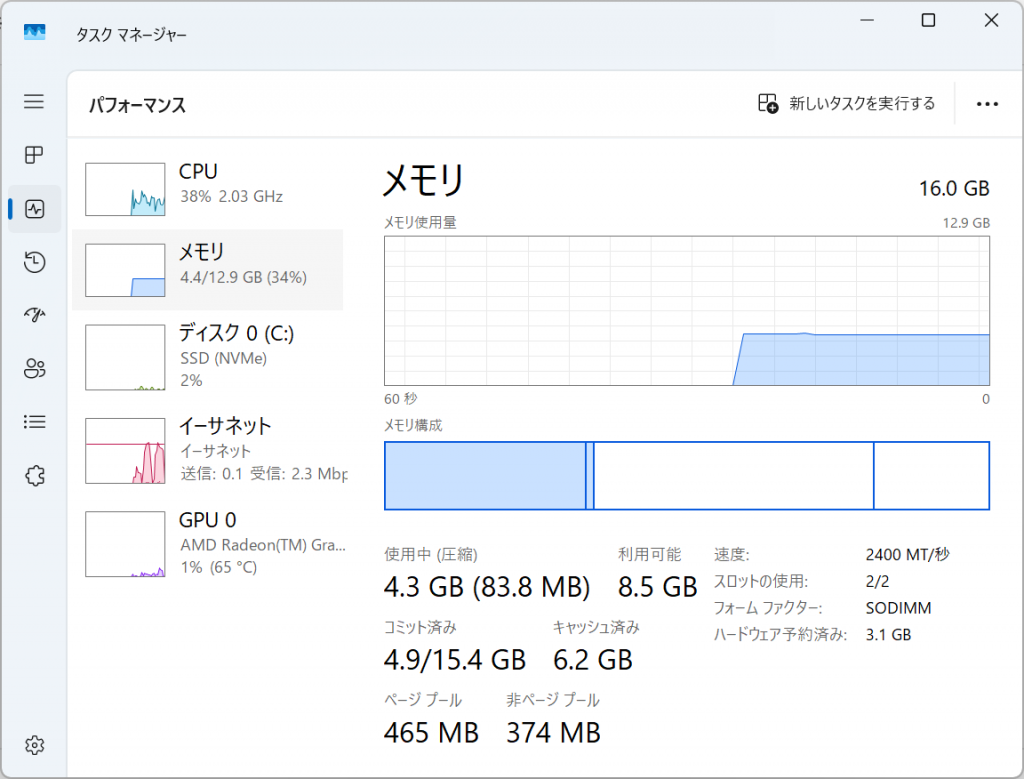

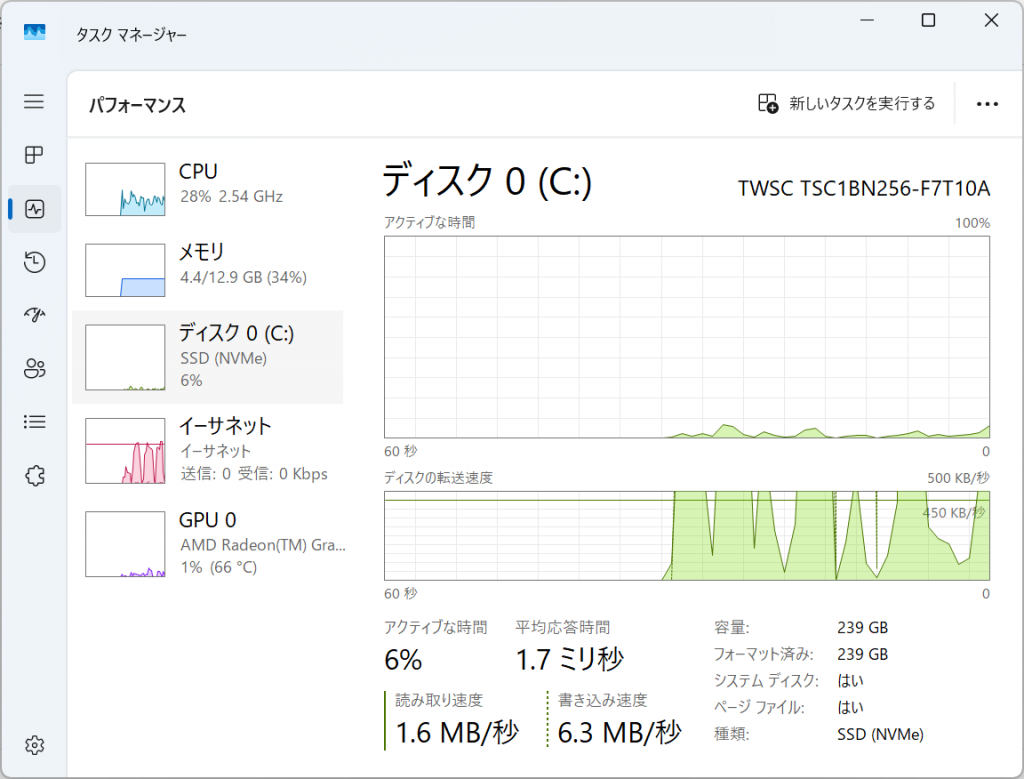

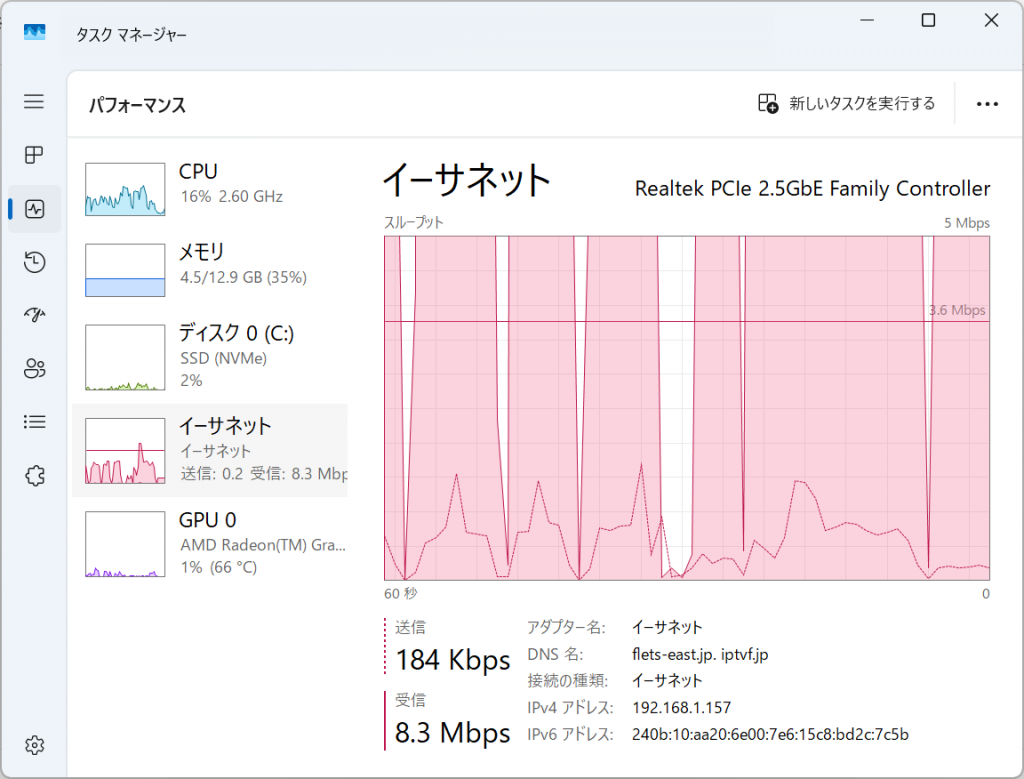

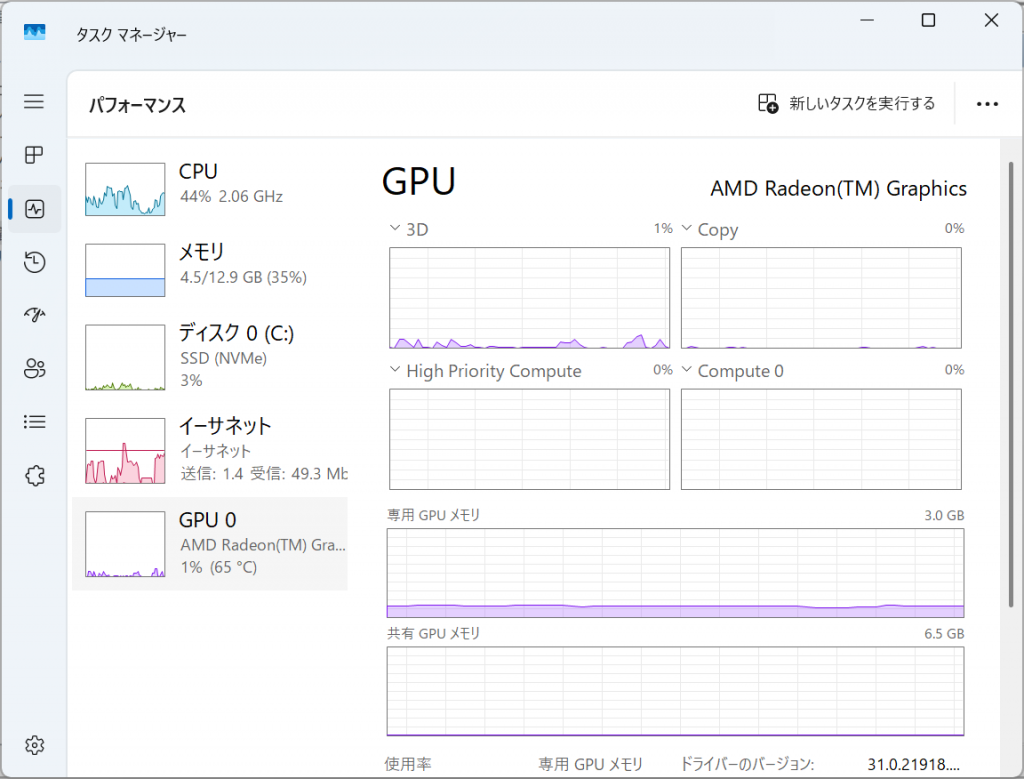

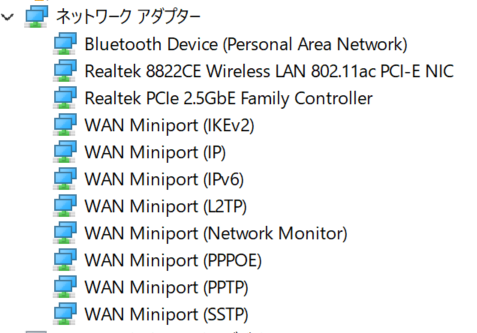

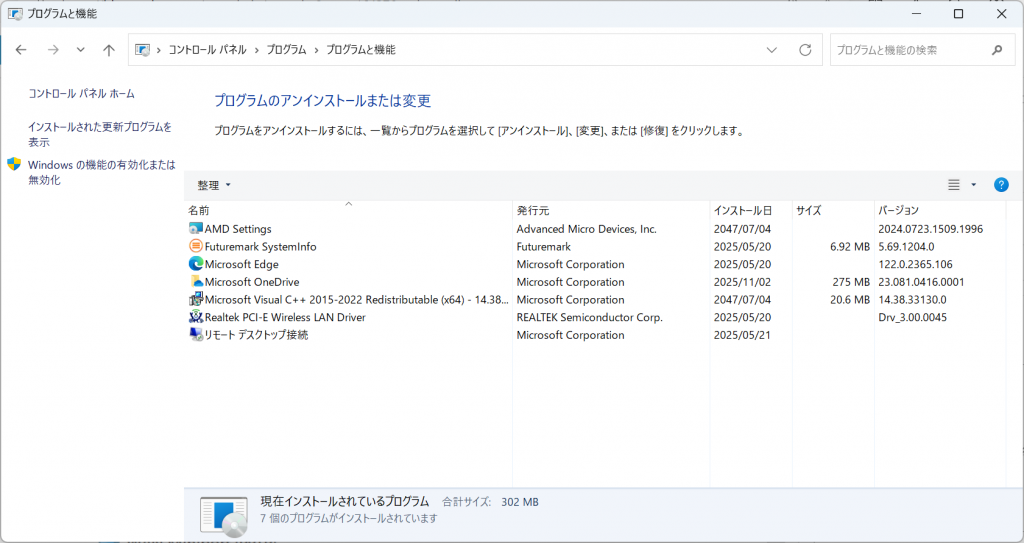

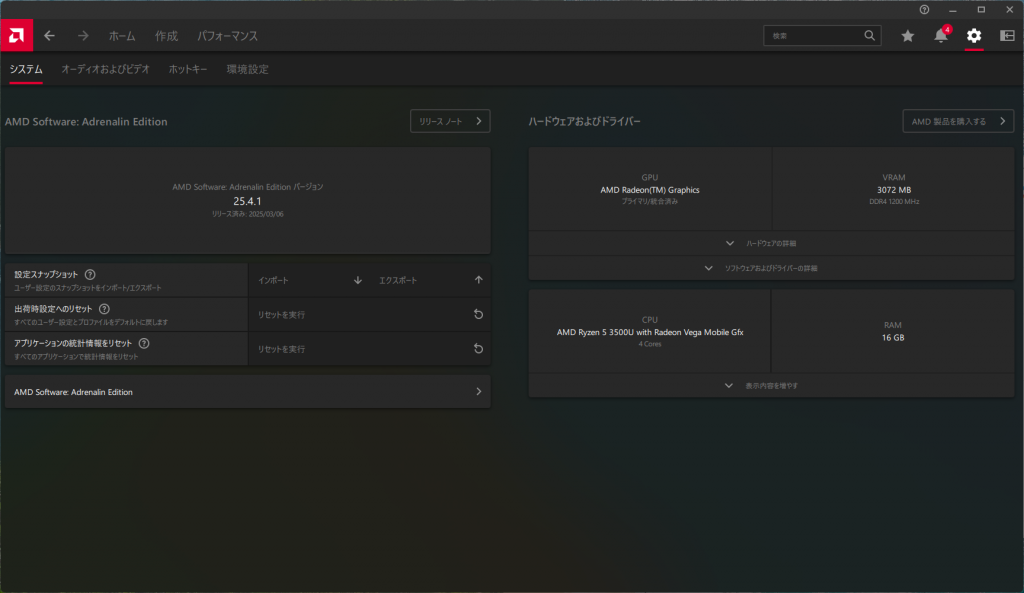

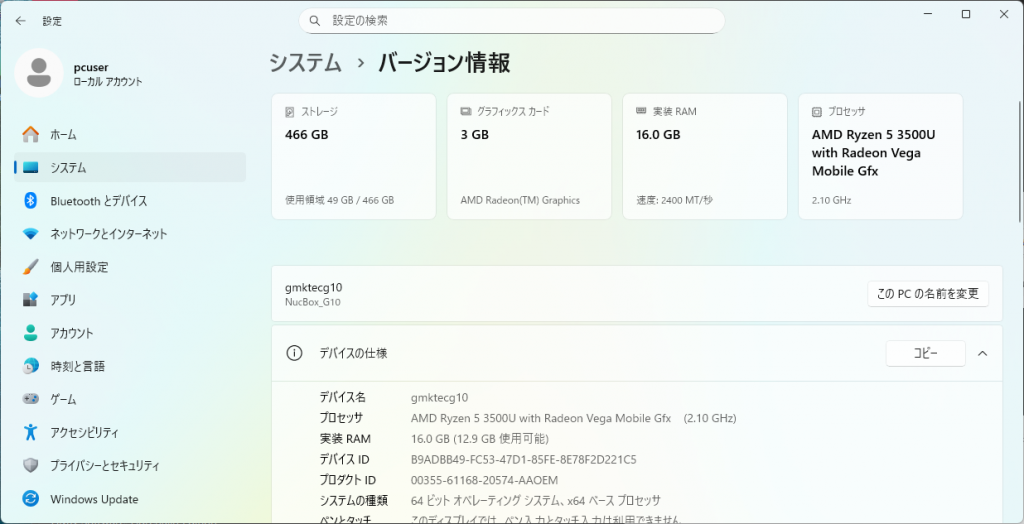

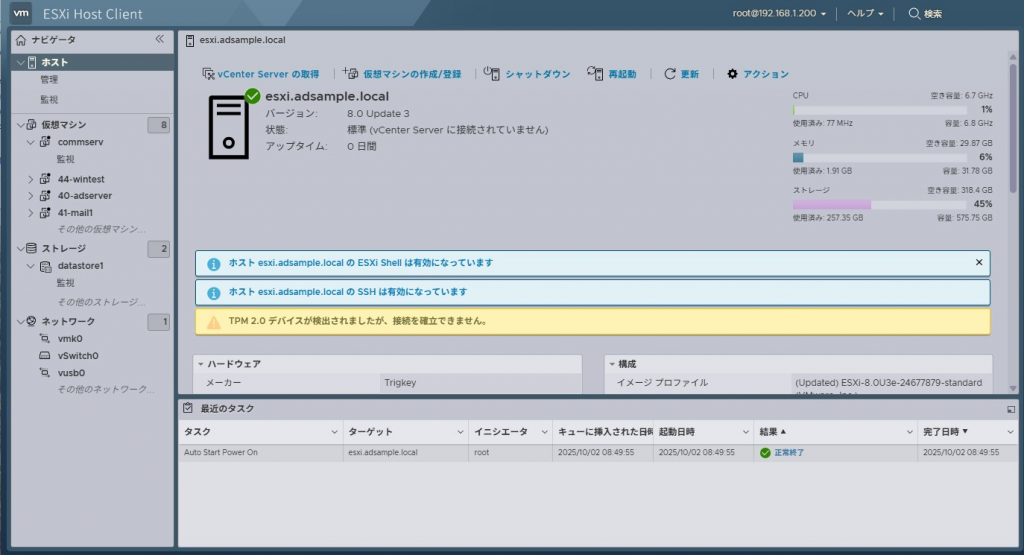

Ryzen 5 3500U搭載GMKtec G10 mini上に構築した vSphere ESXi 8.0 Freeの検証環境にて、Proxmox VE 9.1仮想マシン3台構成でクラスタを作成し、HA試験を行ったところ、3台中1台に対して失敗した。

結果を先に書いておくと、Proxmox VE環境上の仮想マシン挙動で発覚したが、実際の問題はvSphre ESXiの仮想CPUの作りの問題でした。

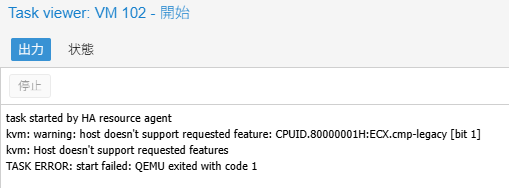

さて、発覚した状況としては、Proxmox VE上でHA設定をしている場合、仮想マシンを動かしていたサーバを停止すると、稼働中のサーバ上で再起動してくるはずが、下記のエラーで起動が失敗していた。

task started by HA resource agent

kvm: warning: host doesn't support requested feature: CPUID.80000001H:ECX.cmp-legacy [bit 1]

kvm: Host doesn't support requested features

TASK ERROR: start failed: QEMU exited with code 1

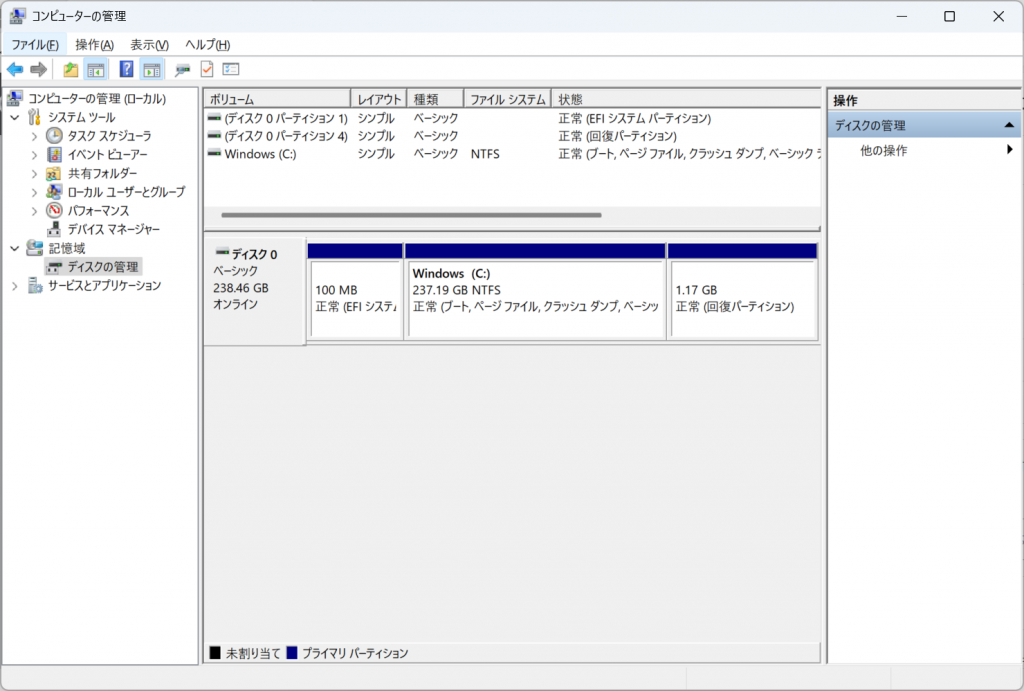

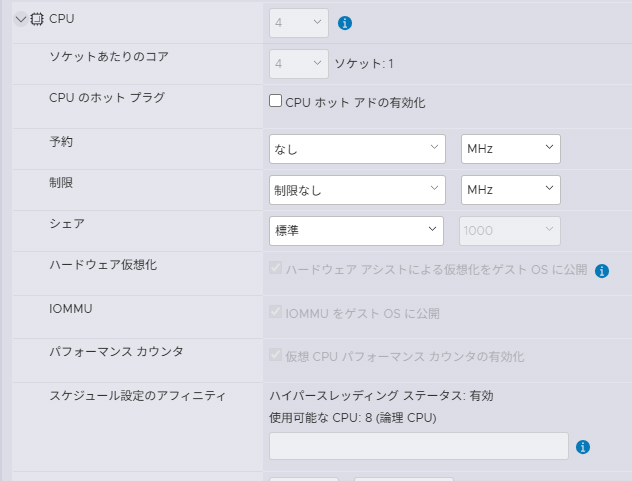

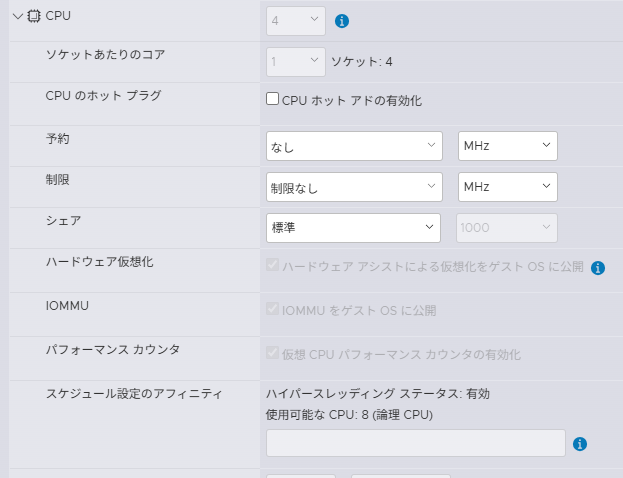

3台とも仮想マシンの4vCPUにしてるはずなんだけど・・・と思ってCPU設定を見直してみると、ソケット数が違っていた

proxmox1, proxmox2は1ソケット4コア

proxmox3は4ソケット1コア

この影響なのか、/proc/cpuinfoに違いが・・・

proxmox1とproxmox2の/proc/cpuinfo

root@proxmox2:~# ssh proxmox1 cat /proc/cpuinfo

processor : 0

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 4

core id : 0

cpu cores : 4

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 1

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 4

core id : 1

cpu cores : 4

apicid : 1

initial apicid : 1

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 2

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 4

core id : 2

cpu cores : 4

apicid : 2

initial apicid : 2

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 3

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 4

core id : 3

cpu cores : 4

apicid : 3

initial apicid : 3

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

root@proxmox2:~#

proxmox3の/proc/cpuinfo

root@proxmox2:~#

ssh proxmox3 cat /proc/cpuinfo

processor : 0

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 1

core id : 0

cpu cores : 1

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret spectre_v2_user

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 1

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 2

siblings : 1

core id : 0

cpu cores : 1

apicid : 2

initial apicid : 2

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret spectre_v2_user

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 2

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 4

siblings : 1

core id : 0

cpu cores : 1

apicid : 4

initial apicid : 4

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret spectre_v2_user

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 3

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 6

siblings : 1

core id : 0

cpu cores : 1

apicid : 6

initial apicid : 6

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret spectre_v2_user

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

root@proxmox2:~#

proxmox3のflagsには「ht」「cmp_legacy」がない。

またproxmox3のbugsには「spectre_v2_user」がある

proxmox3は1つのCPU内に複数のコア/スレッドがないので、hyper thread機能が存在しない、ということで消されていた模様

このため、proxmox3仮想マシンを一度停止し、CPU設定を1ソケット4コアに揃えたところ、flagsに「ht」と「cmp_legacy」が追加されたことを確認した

root@proxmox2:~# ssh proxmox3 cat /proc/cpuinfo

processor : 0

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 4

core id : 0

cpu cores : 4

apicid : 0

initial apicid : 0

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret spectre_v2_user

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 1

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 4

core id : 1

cpu cores : 4

apicid : 1

initial apicid : 1

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret spectre_v2_user

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 2

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 4

core id : 2

cpu cores : 4

apicid : 2

initial apicid : 2

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret spectre_v2_user

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

processor : 3

vendor_id : AuthenticAMD

cpu family : 23

model : 24

model name : AMD Ryzen 5 3500U with Radeon Vega Mobile Gfx

stepping : 1

microcode : 0x8108109

cpu MHz : 2100.000

cache size : 512 KB

physical id : 0

siblings : 4

core id : 3

cpu cores : 4

apicid : 3

initial apicid : 3

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext fxsr_opt pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid extd_apicid tsc_known_freq pni pclmulqdq ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm extapic cr8_legacy abm sse4a misalignsse 3dnowprefetch osvw topoext perfctr_core ssbd ibpb vmmcall fsgsbase bmi1 avx2 smep bmi2 rdseed adx smap clflushopt sha_ni xsaveopt xsavec xgetbv1 clzero arat npt svm_lock nrip_save vmcb_clean flushbyasid decodeassists overflow_recov succor

bugs : fxsave_leak sysret_ss_attrs null_seg spectre_v1 spectre_v2 spec_store_bypass retbleed smt_rsb srso div0 ibpb_no_ret spectre_v2_user

bogomips : 4200.00

TLB size : 2560 4K pages

clflush size : 64

cache_alignment : 64

address sizes : 45 bits physical, 48 bits virtual

power management:

root@proxmox2:~#

というわけで、vSphere ESXi 8.0上で「4vCPU」を設定する場合、「1CPU 4コア設定」と「4CPU1コア設定」とで提供されるCPU機能が異なり、一部の挙動が変わる、ということでした。