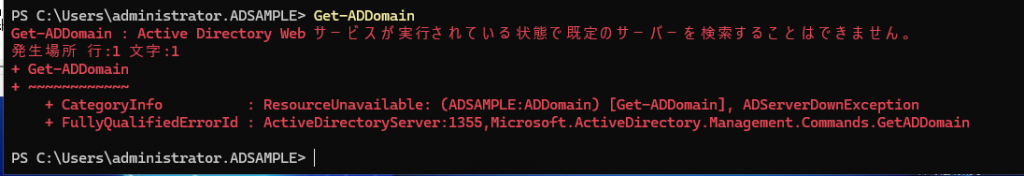

sambaで作ったActive Directoryサーバ環境で、Windows ServerからPowerShellのGet-ADDomainコマンドを実行してみたところエラーとなった

PS C:\Users\administrator.ADSAMPLE> Get-ADDomain

Get-ADDomain : Active Directory Web サービスが実行されている状態で既定のサーバーを検索することはできません。

発生場所 行:1 文字:1

+ Get-ADDomain

+ ~~~~~~~~~~~~

+ CategoryInfo : ResourceUnavailable: (ADSAMPLE:ADDomain) [Get-ADDomain], ADServerDownException

+ FullyQualifiedErrorId : ActiveDirectoryServer:1355,Microsoft.ActiveDirectory.Management.Commands.GetADDomain

PS C:\Users\administrator.ADSAMPLE>

サーバ名を指定すればいけるかな?と「Get-ADDomain -Server サーバ名」にしてもエラー

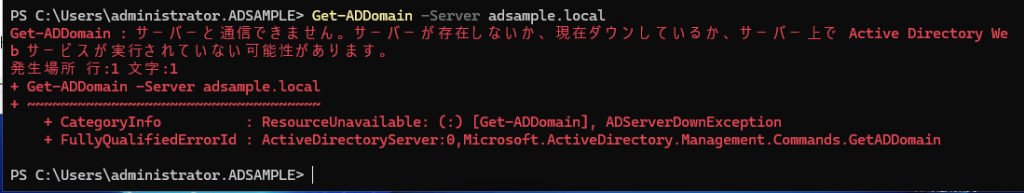

PS C:\Users\administrator.ADSAMPLE> Get-ADDomain -Server adsample.local

Get-ADDomain : サーバーと通信できません。サーバーが存在しないか、現在ダウンしているか、サーバー上で Active Directory Web サービスが実行されていない可能性があります。

発生場所 行:1 文字:1

+ Get-ADDomain -Server adsample.local

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : ResourceUnavailable: (:) [Get-ADDomain], ADServerDownException

+ FullyQualifiedErrorId : ActiveDirectoryServer:0,Microsoft.ActiveDirectory.Management.Commands.GetADDomain

PS C:\Users\administrator.ADSAMPLE>

今度は「Active Directory Web サービスが実行されていない」ことがエラーの原因とされている。

Active Directory Web サービス について確認すると、どうやらsamba では提供されていないようだ。

2021年4月13日に作成されたsamba wikiのページ「ADWS / AD Powershell compatibility」に、「Samba does not support many of the AD PowerShell commands that manipulate AD objects,」とある

そこによると、2016年頃に「samba-adws」というPowerShellコマンドを使えるようにするプロジェクトが立ち上がって、開発中とのこと。

masterブランチの最終更新は2018年12月であるが、「garming-main」ブランチを見ると、2024年9月頃までなんかやっていたようで、それは https://github.com/GSam/samba-adws にておもに開発してたようで、それは https://github.com/GSam/samba で公開されているパッチ版で利用できるようだ

ただ、どちらにせよ、1年以上更新はされていないようだ

このため、PowerShellのActive Directory関連のコマンドレット群はsamba環境で使用できない、ということになるようだ