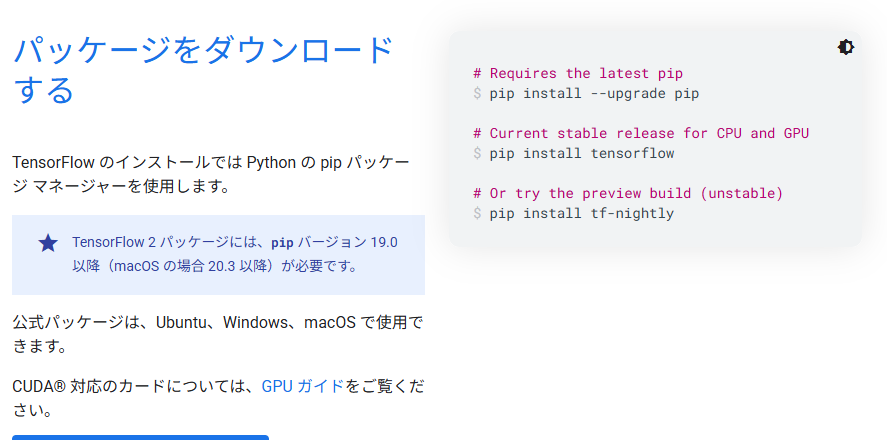

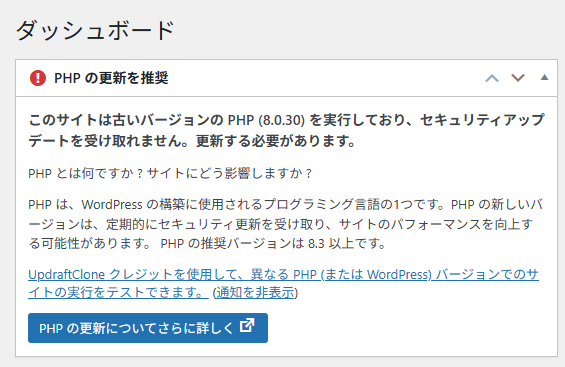

AlmaLinux 9環境でwordpressを動かしているのだが下記の「PHPの更新を推奨」という警告が表示された。

(Updraftプラグインを入れているので、余計な文面も入っている)

PHPの更新について詳しくと書かれているリンク先「より高速で安全なサイトを手に入れましょう: 今すぐ PHP を更新」

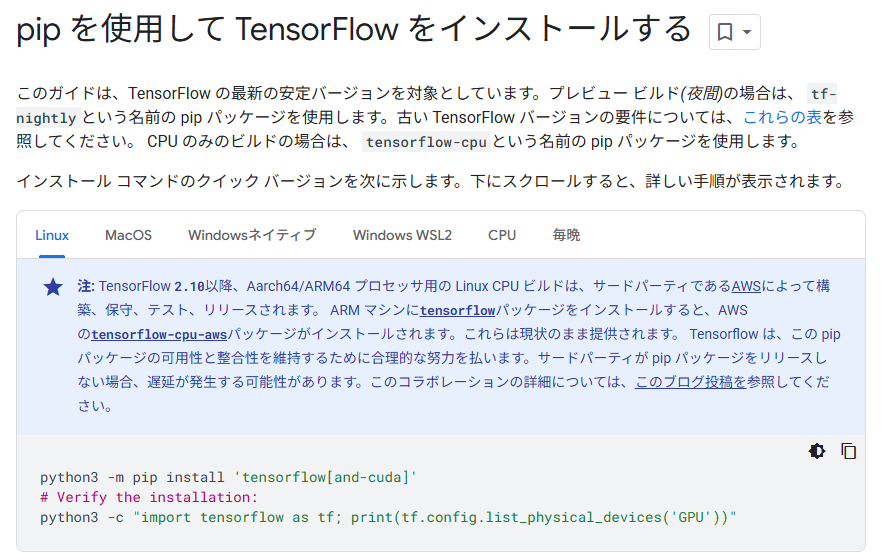

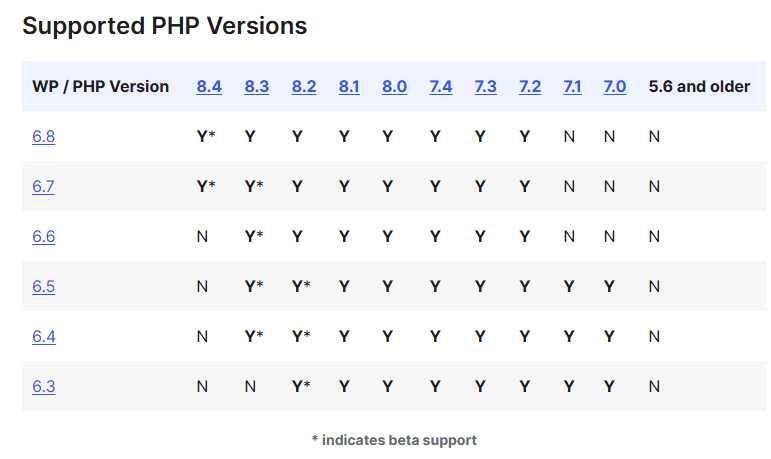

2025/08/14追記 「PHP Compatibility and WordPress Versions」を見ると、PHP 8.3以外のバージョンでもしばらくは大丈夫な模様

RHEL/AlmaLinux/RockyLinux/Oracle Linuxでphp 8.3以降なのはなにかを確認すると、標準状態であればRHEL10からになる。

Red Hat Enterprise Linux 10 動的プログラミング言語のインストールおよび使用「第4章 PHP スクリプト言語の使用」

RHEL9ではRHEL9.6からmysql 8.4とともにphp 8.3の提供も追加されている。

Red Hat Enterprise Linux 9 9.6 リリースノート「3.12. 動的プログラミング言語、Web サーバー、およびデータベースサーバー」

RHEL8については、もう新しいリリースはでないので、公式としてphp 8.3がリリースされることはないので3rdパーティーのレポジトリを使う必要があるが、前記の互換性記述を見る限りではPHP 8.2でもしばらくは大丈夫である模様。

RHEL7時代は、Oracle Linuxの「PHP Packages for Oracle Linux」にてRHELでは配布しない新しめのphpも配布してくれていたのですが、いまのところその様子はないので、php 8.2でやる感じか?

RHEL9系でのphp8.3導入

RHEL9 / AlmaLinux 9環境でphp 8.3を導入できるのは9.6からなので、少なくともそのバージョンへはアップデートを終わらせておく。

そのあと、まずはモジュールとして選択できることを「dnf module list php」を実行して確認する

$ dnf module list php

メタデータの期限切れの最終確認: 0:00:10 前の 2025年07月16日 09時55分14秒 に実施しました。

AlmaLinux 9 - AppStream

Name Stream Profiles Summary

php 8.1 common [d], devel, minimal PHP scripting language

php 8.2 common [d], devel, minimal PHP scripting language

php 8.3 common [d], devel, minimal PHP scripting language

ヒント: [d]efault, [e]nabled, [x]disabled, [i]nstalled

$ php -v

PHP 8.0.30 (cli) (built: May 13 2025 19:33:03) ( NTS gcc x86_64 )

Copyright (c) The PHP Group

Zend Engine v4.0.30, Copyright (c) Zend Technologies

with Zend OPcache v8.0.30, Copyright (c), by Zend Technologies

$

新規インストールの場合は「dnf install @php:8.3」とバージョンを指定してインストールする

$ sudo dnf install @php:8.3

すでにphpがインストール済みの場合は「dnf module switch-to php:8.3」でバージョンを切り替える(11.5. 後のストリームへの切り替え)

$ sudo dnf module switch-to php:8.3

インストール終了後、バージョンを確認

$ dnf module list php

メタデータの期限切れの最終確認: 0:08:21 前の 2025年07月16日 09時55分14秒 に実施しました。

AlmaLinux 9 - AppStream

Name Stream Profiles Summary

php 8.1 common [d], devel, minimal PHP scripting language

php 8.2 common [d], devel, minimal PHP scripting language

php 8.3 [e] common [d], devel, minimal PHP scripting language

ヒント: [d]efault, [e]nabled, [x]disabled, [i]nstalled

$

php 8.3の横に[e]というマークがついて、enabledになった、という表示に切り替わっていることが確認できます。

php -vでバージョンを確認すると 8.3.19 であることも確認できました。

$ php -v

PHP 8.3.19 (cli) (built: Mar 12 2025 13:10:27) (NTS gcc x86_64)

Copyright (c) The PHP Group

Zend Engine v4.3.19, Copyright (c) Zend Technologies

with Zend OPcache v8.3.19, Copyright (c), by Zend Technologies

$

トラブル事例

ちなみに・・・うちの環境ではdnf module switch-to php:8.3は素直に実行されてくれませんでした。

$ sudo dnf module switch-to php:8.3

メタデータの期限切れの最終確認: 2:38:13 前の 2025年07月16日 07時18分53秒 に実施しました。

エラー:

問題: インストール済パッケージの問題 php-pecl-imagick-3.7.0-1.el9.x86_64

- package php-pecl-imagick-3.7.0-1.el9.x86_64 from @System requires php(api) = 20200930-64, but none of the providers can be installed

- package php-pecl-imagick-3.7.0-1.el9.x86_64 from @System requires php(zend-abi) = 20200930-64, but none of the providers can be installed

- package php-pecl-imagick-3.7.0-1.el9.x86_64 from epel requires php(api) = 20200930-64, but none of the providers can be installed

- package php-pecl-imagick-3.7.0-1.el9.x86_64 from epel requires php(zend-abi) = 20200930-64, but none of the providers can be installed

- php-common-8.0.30-3.el9_6.x86_64 from @System does not belong to a distupgrade repository

- package php-common-8.0.30-3.el9_6.x86_64 from appstream is filtered out by modular filtering

(インストール不可のパッケージをスキップするには、'--skip-broken' を追加してみてください または、'--nobest' を追加して、最適候補のパッケージのみを使用しないでください)

$

理由はepelレポジトリからインストールしたphp-pecl-imagick パッケージ

$ dnf info php-pecl-imagick

メタデータの期限切れの最終確認: 0:02:32 前の 2025年07月16日 09時55分14秒 に実施しました。

インストール済みパッケージ

名前 : php-pecl-imagick

バージョン : 3.7.0

リリース : 1.el9

Arch : x86_64

サイズ : 554 k

ソース : php-pecl-imagick-3.7.0-1.el9.src.rpm

リポジトリー : @System

repo から : epel

概要 : Provides a wrapper to the ImageMagick library

URL : https://pecl.php.net/package/imagick

ライセンス : PHP

説明 : Imagick is a native php extension to create and modify images using the

: ImageMagick API.

$

アンインストールをしてみたところ関係するパッケージが多くてびっくりしました。

$ sudo dnf remove php-pecl-imagick

依存関係が解決しました。

===========================================================================================================

パッケージ Arch バージョン リポジトリー サイズ

===========================================================================================================

削除中:

php-pecl-imagick x86_64 3.7.0-1.el9 @epel 554 k

未使用の依存関係の削除:

ImageMagick-libs x86_64 6.9.13.25-1.el9 @epel 8.3 M

LibRaw x86_64 0.21.1-1.el9 @appstream 2.4 M

ModemManager-glib x86_64 1.20.2-1.el9 @baseos 1.5 M

abattis-cantarell-fonts noarch 0.301-4.el9 @appstream 705 k

adobe-mappings-cmap noarch 20171205-12.el9 @appstream 13 M

adobe-mappings-cmap-deprecated noarch 20171205-12.el9 @appstream 583 k

adobe-mappings-pdf noarch 20180407-10.el9 @appstream 4.2 M

adobe-source-code-pro-fonts noarch 2.030.1.050-12.el9.1 @baseos 1.8 M

adwaita-cursor-theme noarch 40.1.1-3.el9 @appstream 12 M

adwaita-icon-theme noarch 40.1.1-3.el9 @appstream 11 M

at-spi2-atk x86_64 2.38.0-4.el9 @appstream 272 k

at-spi2-core x86_64 2.40.3-1.el9 @appstream 516 k

atk x86_64 2.36.0-5.el9 @appstream 1.2 M

avahi-glib x86_64 0.8-22.el9_6 @appstream 19 k

avahi-libs x86_64 0.8-22.el9_6 @baseos 173 k

bluez-libs x86_64 5.72-4.el9 @baseos 214 k

bubblewrap x86_64 0.4.1-8.el9_5 @baseos 101 k

cairo x86_64 1.17.4-7.el9 @appstream 1.6 M

cairo-gobject x86_64 1.17.4-7.el9 @appstream 43 k

colord-libs x86_64 1.4.5-6.el9_6 @appstream 830 k

composefs-libs x86_64 1.0.8-1.el9 @appstream 143 k

cups-libs x86_64 1:2.3.3op2-33.el9 @baseos 670 k

dconf x86_64 0.40.0-6.el9 @appstream 305 k

dejavu-sans-fonts noarch 2.37-18.el9 @baseos 5.7 M

exempi x86_64 2.6.0-0.2.20211007gite23c213.el9 @appstream 1.3 M

exiv2 x86_64 0.27.5-2.el9 @appstream 4.4 M

exiv2-libs x86_64 0.27.5-2.el9 @appstream 2.7 M

fdk-aac-free x86_64 2.0.0-8.el9 @appstream 588 k

flac-libs x86_64 1.3.3-10.el9_2.1 @appstream 549 k

flatpak x86_64 1.12.9-4.el9_6 @appstream 7.7 M

flatpak-selinux noarch 1.12.9-4.el9_6 @appstream 13 k

flatpak-session-helper x86_64 1.12.9-4.el9_6 @appstream 210 k

fontconfig x86_64 2.14.0-2.el9_1 @appstream 818 k

fonts-filesystem noarch 1:2.0.5-7.el9.1 @baseos 0

fribidi x86_64 1.0.10-6.el9.2 @appstream 339 k

fuse x86_64 2.9.9-17.el9 @baseos 214 k

fuse-common x86_64 3.10.2-9.el9 @baseos 38

gd x86_64 2.3.2-3.el9 @appstream 412 k

gdk-pixbuf2 x86_64 2.42.6-4.el9_4 @appstream 2.5 M

gdk-pixbuf2-modules x86_64 2.42.6-4.el9_4 @appstream 265 k

geoclue2 x86_64 2.6.0-8.el9_6.1 @appstream 359 k

giflib x86_64 5.2.1-9.el9 @appstream 106 k

glib-networking x86_64 2.68.3-3.el9 @baseos 640 k

google-droid-sans-fonts noarch 20200215-11.el9.2 @appstream 6.3 M

graphene x86_64 1.10.6-2.el9 @appstream 167 k

graphviz x86_64 2.44.0-26.el9 @appstream 12 M

gsettings-desktop-schemas x86_64 40.0-6.el9 @baseos 4.5 M

gsm x86_64 1.0.19-6.el9 @appstream 64 k

gstreamer1 x86_64 1.22.12-3.el9 @appstream 4.8 M

gstreamer1-plugins-base x86_64 1.22.12-4.el9 @appstream 7.3 M

gtk-update-icon-cache x86_64 3.24.31-5.el9 @appstream 66 k

gtk2 x86_64 2.24.33-8.el9 @appstream 13 M

gtk3 x86_64 3.24.31-5.el9 @appstream 20 M

hicolor-icon-theme noarch 0.17-13.el9 @appstream 72 k

imath x86_64 3.1.2-1.el9 @appstream 363 k

iso-codes noarch 4.6.0-3.el9 @appstream 19 M

jasper-libs x86_64 2.0.28-3.el9 @appstream 326 k

jbig2dec-libs x86_64 0.19-7.el9 @appstream 164 k

jbigkit-libs x86_64 2.1-23.el9 @appstream 114 k

langpacks-core-font-en noarch 3.0-16.el9 @appstream 351

lcms2 x86_64 2.12-3.el9 @appstream 399 k

libICE x86_64 1.0.10-8.el9 @appstream 171 k

libSM x86_64 1.2.3-10.el9 @appstream 93 k

libX11 x86_64 1.7.0-11.el9 @appstream 1.3 M

libX11-common noarch 1.7.0-11.el9 @appstream 1.3 M

libX11-xcb x86_64 1.7.0-11.el9 @appstream 15 k

libXau x86_64 1.0.9-8.el9 @appstream 63 k

libXaw x86_64 1.0.13-19.el9 @appstream 498 k

libXcomposite x86_64 0.4.5-7.el9 @appstream 41 k

libXcursor x86_64 1.2.0-7.el9 @appstream 50 k

libXdamage x86_64 1.1.5-7.el9 @appstream 36 k

libXext x86_64 1.3.4-8.el9 @appstream 93 k

libXfixes x86_64 5.0.3-16.el9 @appstream 35 k

libXft x86_64 2.3.3-8.el9 @appstream 133 k

libXi x86_64 1.7.10-8.el9 @appstream 73 k

libXinerama x86_64 1.1.4-10.el9 @appstream 19 k

libXmu x86_64 1.1.3-8.el9 @appstream 184 k

libXpm x86_64 3.5.13-10.el9 @appstream 126 k

libXrandr x86_64 1.5.2-8.el9 @appstream 52 k

libXrender x86_64 0.9.10-16.el9 @appstream 50 k

libXt x86_64 1.2.0-6.el9 @appstream 443 k

libXtst x86_64 1.2.3-16.el9 @appstream 38 k

libXv x86_64 1.0.11-16.el9 @appstream 26 k

libXxf86vm x86_64 1.1.4-18.el9 @appstream 26 k

libappstream-glib x86_64 0.7.18-5.el9_4 @appstream 1.4 M

libasyncns x86_64 0.8-22.el9 @appstream 59 k

libatomic x86_64 11.5.0-5.el9_5.alma.1 @baseos 29 k

libcanberra x86_64 0.30-27.el9 @appstream 281 k

libcanberra-gtk2 x86_64 0.30-27.el9 @appstream 54 k

libcanberra-gtk3 x86_64 0.30-27.el9 @appstream 74 k

libdrm x86_64 2.4.123-2.el9 @appstream 407 k

libepoxy x86_64 1.5.5-4.el9 @appstream 1.2 M

libexif x86_64 0.6.22-6.el9 @appstream 2.3 M

libfontenc x86_64 1.1.3-17.el9 @appstream 63 k

libgexiv2 x86_64 0.12.3-1.el9 @appstream 225 k

libglvnd x86_64 1:1.3.4-1.el9 @appstream 778 k

libglvnd-egl x86_64 1:1.3.4-1.el9 @appstream 69 k

libglvnd-glx x86_64 1:1.3.4-1.el9 @appstream 678 k

libgs x86_64 9.54.0-19.el9_6 @appstream 19 M

libgsf x86_64 1.14.47-5.el9 @appstream 941 k

libgxps x86_64 0.3.2-3.el9 @appstream 193 k

libijs x86_64 0.35-15.el9 @appstream 66 k

libiptcdata x86_64 1.0.5-10.el9 @appstream 172 k

libjpeg-turbo x86_64 2.0.90-7.el9 @appstream 633 k

libldac x86_64 2.0.2.3-10.el9 @appstream 79 k

liblqr-1 x86_64 0.4.2-19.el9 @epel 97 k

libnotify x86_64 0.7.9-8.el9 @appstream 99 k

libogg x86_64 2:1.3.4-6.el9 @appstream 49 k

libosinfo x86_64 1.10.0-1.el9 @appstream 1.2 M

libpaper x86_64 1.1.28-4.el9 @appstream 95 k

libpciaccess x86_64 0.16-7.el9 @baseos 48 k

libproxy x86_64 0.4.15-35.el9 @baseos 163 k

libproxy-webkitgtk4 x86_64 0.4.15-35.el9 @appstream 32 k

libraqm x86_64 0.8.0-1.el9 @epel 29 k

librsvg2 x86_64 2.50.7-3.el9 @appstream 10 M

libsbc x86_64 1.4-9.el9 @appstream 81 k

libsndfile x86_64 1.0.31-9.el9 @appstream 521 k

libsoup x86_64 2.72.0-10.el9_6.2 @appstream 1.2 M

libstemmer x86_64 0-18.585svn.el9 @appstream 344 k

libtheora x86_64 1:1.1.1-31.el9 @appstream 463 k

libtiff x86_64 4.4.0-13.el9 @appstream 573 k

libtracker-sparql x86_64 3.1.2-3.el9_1 @appstream 1.0 M

libvorbis x86_64 1:1.3.7-5.el9 @appstream 903 k

libwayland-client x86_64 1.21.0-1.el9 @appstream 70 k

libwayland-cursor x86_64 1.21.0-1.el9 @appstream 37 k

libwayland-egl x86_64 1.21.0-1.el9 @appstream 16 k

libwayland-server x86_64 1.21.0-1.el9 @appstream 86 k

libwebp x86_64 1.2.0-8.el9_3 @appstream 769 k

libwmf-lite x86_64 0.2.12-10.el9 @appstream 164 k

libxcb x86_64 1.13.1-9.el9 @appstream 1.1 M

libxkbcommon x86_64 1.0.3-4.el9 @appstream 317 k

libxshmfence x86_64 1.3-10.el9 @appstream 16 k

low-memory-monitor x86_64 2.1-4.el9 @appstream 70 k

mesa-dri-drivers x86_64 24.2.8-2.el9_6.alma.1 @appstream 37 M

mesa-filesystem x86_64 24.2.8-2.el9_6.alma.1 @appstream 3.6 k

mesa-libEGL x86_64 24.2.8-2.el9_6.alma.1 @appstream 394 k

mesa-libGL x86_64 24.2.8-2.el9_6.alma.1 @appstream 508 k

mesa-libgbm x86_64 24.2.8-2.el9_6.alma.1 @appstream 64 k

mesa-libglapi x86_64 24.2.8-2.el9_6.alma.1 @appstream 217 k

mkfontscale x86_64 1.2.1-3.el9 @appstream 54 k

openexr-libs x86_64 3.1.1-3.el9 @appstream 4.7 M

openjpeg2 x86_64 2.4.0-8.el9 @appstream 376 k

opus x86_64 1.3.1-10.el9 @appstream 355 k

orc x86_64 0.4.31-8.el9 @appstream 601 k

osinfo-db noarch 20250124-2.el9_6.alma.2 @appstream 3.9 M

osinfo-db-tools x86_64 1.10.0-1.el9 @appstream 177 k

ostree-libs x86_64 2025.1-1.el9 @appstream 1.1 M

p11-kit-server x86_64 0.25.3-3.el9_5 @appstream 1.3 M

pango x86_64 1.48.7-3.el9 @appstream 878 k

pipewire x86_64 1.0.1-1.el9 @appstream 351 k

pipewire-alsa x86_64 1.0.1-1.el9 @appstream 173 k

pipewire-jack-audio-connection-kit x86_64 1.0.1-1.el9 @appstream 30

pipewire-jack-audio-connection-kit-libs x86_64 1.0.1-1.el9 @appstream 547 k

pipewire-libs x86_64 1.0.1-1.el9 @appstream 7.6 M

pipewire-pulseaudio x86_64 1.0.1-1.el9 @appstream 427 k

pixman x86_64 0.40.0-6.el9_3 @appstream 694 k

poppler x86_64 21.01.0-21.el9 @appstream 3.6 M

poppler-data noarch 0.4.9-9.el9 @appstream 11 M

poppler-glib x86_64 21.01.0-21.el9 @appstream 477 k

pulseaudio-libs x86_64 15.0-3.el9 @appstream 3.2 M

rtkit x86_64 0.11-29.el9 @appstream 146 k

sound-theme-freedesktop noarch 0.8-17.el9 @appstream 460 k

totem-pl-parser x86_64 3.26.6-2.el9 @appstream 330 k

tracker x86_64 3.1.2-3.el9_1 @appstream 2.0 M

tracker-miners x86_64 3.1.2-4.el9_3 @appstream 4.0 M

upower x86_64 0.99.13-2.el9 @appstream 547 k

urw-base35-bookman-fonts noarch 20200910-6.el9 @appstream 1.4 M

urw-base35-c059-fonts noarch 20200910-6.el9 @appstream 1.4 M

urw-base35-d050000l-fonts noarch 20200910-6.el9 @appstream 85 k

urw-base35-fonts noarch 20200910-6.el9 @appstream 5.3 k

urw-base35-fonts-common noarch 20200910-6.el9 @appstream 37 k

urw-base35-gothic-fonts noarch 20200910-6.el9 @appstream 1.2 M

urw-base35-nimbus-mono-ps-fonts noarch 20200910-6.el9 @appstream 1.0 M

urw-base35-nimbus-roman-fonts noarch 20200910-6.el9 @appstream 1.4 M

urw-base35-nimbus-sans-fonts noarch 20200910-6.el9 @appstream 2.4 M

urw-base35-p052-fonts noarch 20200910-6.el9 @appstream 1.5 M

urw-base35-standard-symbols-ps-fonts noarch 20200910-6.el9 @appstream 44 k

urw-base35-z003-fonts noarch 20200910-6.el9 @appstream 391 k

webkit2gtk3-jsc x86_64 2.48.3-1.el9_6 @appstream 28 M

webrtc-audio-processing x86_64 0.3.1-8.el9 @appstream 734 k

wireplumber x86_64 0.4.14-1.el9 @appstream 301 k

wireplumber-libs x86_64 0.4.14-1.el9 @appstream 1.2 M

xdg-dbus-proxy x86_64 0.1.3-1.el9 @appstream 85 k

xdg-desktop-portal x86_64 1.12.6-1.el9 @appstream 1.8 M

xdg-desktop-portal-gtk x86_64 1.12.0-3.el9 @appstream 478 k

xkeyboard-config noarch 2.33-2.el9 @appstream 5.8 M

xml-common noarch 0.6.3-58.el9 @appstream 78 k

xorg-x11-fonts-ISO8859-1-100dpi noarch 7.5-33.el9 @appstream 1.0 M

トランザクションの概要

===========================================================================================================

削除 189 パッケージ

解放された容量: 369 M

これでよろしいですか? [y/N]: y

トランザクションを確認しています

トランザクションの確認に成功しました。

トランザクションをテストしています

トランザクションのテストに成功しました。

トランザクションを実行しています

準備中 : 1/1

削除中 : flatpak-1.12.9-4.el9_6.x86_64 1/189

削除中 : libappstream-glib-0.7.18-5.el9_4.x86_64 2/189

削除中 : php-pecl-imagick-3.7.0-1.el9.x86_64 3/189

削除中 : ImageMagick-libs-6.9.13.25-1.el9.x86_64 4/189

削除中 : graphviz-2.44.0-26.el9.x86_64 5/189

削除中 : gtk2-2.24.33-8.el9.x86_64 6/189

削除中 : libgs-9.54.0-19.el9_6.x86_64 7/189

削除中 : libcanberra-gtk2-0.30-27.el9.x86_64 8/189

削除中 : urw-base35-fonts-20200910-6.el9.noarch 9/189

scriptletの実行中: xdg-desktop-portal-gtk-1.12.0-3.el9.x86_64 10/189

削除中 : xdg-desktop-portal-gtk-1.12.0-3.el9.x86_64 10/189

削除中 : gtk3-3.24.31-5.el9.x86_64 11/189

削除中 : libcanberra-gtk3-0.30-27.el9.x86_64 12/189

削除中 : librsvg2-2.50.7-3.el9.x86_64 13/189

削除中 : cairo-gobject-1.17.4-7.el9.x86_64 14/189

削除中 : gd-2.3.2-3.el9.x86_64 15/189

scriptletの実行中: libcanberra-0.30-27.el9.x86_64 16/189

削除中 : libcanberra-0.30-27.el9.x86_64 16/189

scriptletの実行中: xdg-desktop-portal-1.12.6-1.el9.x86_64 17/189

削除中 : xdg-desktop-portal-1.12.6-1.el9.x86_64 17/189

削除中 : wireplumber-libs-0.4.14-1.el9.x86_64 18/189

scriptletの実行中: wireplumber-0.4.14-1.el9.x86_64 19/189

Removed "/etc/systemd/user/pipewire-session-manager.service".

Removed "/etc/systemd/user/pipewire.service.wants/wireplumber.service".

削除中 : wireplumber-0.4.14-1.el9.x86_64 19/189

削除中 : pipewire-alsa-1.0.1-1.el9.x86_64 20/189

削除中 : pipewire-jack-audio-connection-kit-libs-1.0.1-1.el9.x86_64 21/189

削除中 : pipewire-jack-audio-connection-kit-1.0.1-1.el9.x86_64 22/189

削除中 : pipewire-libs-1.0.1-1.el9.x86_64 23/189

削除中 : pipewire-pulseaudio-1.0.1-1.el9.x86_64 24/189

削除中 : pipewire-1.0.1-1.el9.x86_64 25/189

scriptletの実行中: geoclue2-2.6.0-8.el9_6.1.x86_64 26/189

削除中 : geoclue2-2.6.0-8.el9_6.1.x86_64 26/189

scriptletの実行中: geoclue2-2.6.0-8.el9_6.1.x86_64 26/189

削除中 : libXaw-1.0.13-19.el9.x86_64 27/189

削除中 : LibRaw-0.21.1-1.el9.x86_64 28/189

削除中 : urw-base35-bookman-fonts-20200910-6.el9.noarch 29/189

scriptletの実行中: urw-base35-bookman-fonts-20200910-6.el9.noarch 29/189

削除中 : urw-base35-c059-fonts-20200910-6.el9.noarch 30/189

scriptletの実行中: urw-base35-c059-fonts-20200910-6.el9.noarch 30/189

削除中 : urw-base35-d050000l-fonts-20200910-6.el9.noarch 31/189

scriptletの実行中: urw-base35-d050000l-fonts-20200910-6.el9.noarch 31/189

削除中 : urw-base35-gothic-fonts-20200910-6.el9.noarch 32/189

scriptletの実行中: urw-base35-gothic-fonts-20200910-6.el9.noarch 32/189

削除中 : urw-base35-nimbus-mono-ps-fonts-20200910-6.el9.noarch 33/189

scriptletの実行中: urw-base35-nimbus-mono-ps-fonts-20200910-6.el9.noarch 33/189

削除中 : urw-base35-nimbus-roman-fonts-20200910-6.el9.noarch 34/189

scriptletの実行中: urw-base35-nimbus-roman-fonts-20200910-6.el9.noarch 34/189

削除中 : urw-base35-nimbus-sans-fonts-20200910-6.el9.noarch 35/189

scriptletの実行中: urw-base35-nimbus-sans-fonts-20200910-6.el9.noarch 35/189

削除中 : urw-base35-p052-fonts-20200910-6.el9.noarch 36/189

scriptletの実行中: urw-base35-p052-fonts-20200910-6.el9.noarch 36/189

削除中 : urw-base35-standard-symbols-ps-fonts-20200910-6.el9.noarch 37/189

scriptletの実行中: urw-base35-standard-symbols-ps-fonts-20200910-6.el9.noarch 37/189

削除中 : urw-base35-z003-fonts-20200910-6.el9.noarch 38/189

scriptletの実行中: urw-base35-z003-fonts-20200910-6.el9.noarch 38/189

削除中 : xorg-x11-fonts-ISO8859-1-100dpi-7.5-33.el9.noarch 39/189

scriptletの実行中: xorg-x11-fonts-ISO8859-1-100dpi-7.5-33.el9.noarch 39/189

削除中 : pulseaudio-libs-15.0-3.el9.x86_64 40/189

削除中 : libsndfile-1.0.31-9.el9.x86_64 41/189

削除中 : gdk-pixbuf2-modules-2.42.6-4.el9_4.x86_64 42/189

削除中 : libXcursor-1.2.0-7.el9.x86_64 43/189

削除中 : libXrandr-1.5.2-8.el9.x86_64 44/189

削除中 : at-spi2-atk-2.38.0-4.el9.x86_64 45/189

削除中 : at-spi2-core-2.40.3-1.el9.x86_64 46/189

削除中 : libXtst-1.2.3-16.el9.x86_64 47/189

削除中 : libXmu-1.1.3-8.el9.x86_64 48/189

削除中 : libXt-1.2.0-6.el9.x86_64 49/189

削除中 : libXinerama-1.1.4-10.el9.x86_64 50/189

scriptletの実行中: tracker-3.1.2-3.el9_1.x86_64 51/189

削除中 : tracker-3.1.2-3.el9_1.x86_64 51/189

scriptletの実行中: tracker-3.1.2-3.el9_1.x86_64 51/189

scriptletの実行中: tracker-miners-3.1.2-4.el9_3.x86_64 52/189

削除中 : tracker-miners-3.1.2-4.el9_3.x86_64 52/189

scriptletの実行中: tracker-miners-3.1.2-4.el9_3.x86_64 52/189

削除中 : libtracker-sparql-3.1.2-3.el9_1.x86_64 53/189

削除中 : gstreamer1-plugins-base-1.22.12-4.el9.x86_64 54/189

削除中 : pango-1.48.7-3.el9.x86_64 55/189

削除中 : libgxps-0.3.2-3.el9.x86_64 56/189

削除中 : libglvnd-glx-1:1.3.4-1.el9.x86_64 57/189

削除中 : mesa-libGL-24.2.8-2.el9_6.alma.1.x86_64 58/189

削除中 : libglvnd-egl-1:1.3.4-1.el9.x86_64 59/189

削除中 : mesa-libEGL-24.2.8-2.el9_6.alma.1.x86_64 60/189

削除中 : mesa-libglapi-24.2.8-2.el9_6.alma.1.x86_64 61/189

削除中 : mesa-dri-drivers-24.2.8-2.el9_6.alma.1.x86_64 62/189

削除中 : mesa-libgbm-24.2.8-2.el9_6.alma.1.x86_64 63/189

削除中 : libXft-2.3.3-8.el9.x86_64 64/189

削除中 : libXxf86vm-1.1.4-18.el9.x86_64 65/189

削除中 : libXi-1.7.10-8.el9.x86_64 66/189

削除中 : libXv-1.0.11-16.el9.x86_64 67/189

削除中 : libosinfo-1.10.0-1.el9.x86_64 68/189

削除中 : poppler-glib-21.01.0-21.el9.x86_64 69/189

削除中 : cairo-1.17.4-7.el9.x86_64 70/189

削除中 : poppler-21.01.0-21.el9.x86_64 71/189

削除中 : fontconfig-2.14.0-2.el9_1.x86_64 72/189

scriptletの実行中: fontconfig-2.14.0-2.el9_1.x86_64 72/189

削除中 : libtiff-4.4.0-13.el9.x86_64 73/189

削除中 : libXext-1.3.4-8.el9.x86_64 74/189

削除中 : libXrender-0.9.10-16.el9.x86_64 75/189

削除中 : jasper-libs-2.0.28-3.el9.x86_64 76/189

削除中 : avahi-glib-0.8-22.el9_6.x86_64 77/189

削除中 : libXdamage-1.1.5-7.el9.x86_64 78/189

削除中 : libXfixes-5.0.3-16.el9.x86_64 79/189

削除中 : cups-libs-1:2.3.3op2-33.el9.x86_64 80/189

削除中 : exiv2-0.27.5-2.el9.x86_64 81/189

削除中 : langpacks-core-font-en-3.0-16.el9.noarch 82/189

削除中 : dejavu-sans-fonts-2.37-18.el9.noarch 83/189

削除中 : iso-codes-4.6.0-3.el9.noarch 84/189

削除中 : urw-base35-fonts-common-20200910-6.el9.noarch 85/189

削除中 : adwaita-icon-theme-40.1.1-3.el9.noarch 86/189

削除中 : adobe-mappings-cmap-deprecated-20171205-12.el9.noarch 87/189

削除中 : google-droid-sans-fonts-20200215-11.el9.2.noarch 88/189

削除中 : osinfo-db-tools-1.10.0-1.el9.x86_64 89/189

削除中 : libsoup-2.72.0-10.el9_6.2.x86_64 90/189

削除中 : glib-networking-2.68.3-3.el9.x86_64 91/189

削除中 : libproxy-webkitgtk4-0.4.15-35.el9.x86_64 92/189

削除中 : gsettings-desktop-schemas-40.0-6.el9.x86_64 93/189

削除中 : webkit2gtk3-jsc-2.48.3-1.el9_6.x86_64 94/189

削除中 : adobe-source-code-pro-fonts-2.030.1.050-12.el9.1.noarch 95/189

削除中 : abattis-cantarell-fonts-0.301-4.el9.noarch 96/189

削除中 : libdrm-2.4.123-2.el9.x86_64 97/189

削除中 : libtheora-1:1.1.1-31.el9.x86_64 98/189

削除中 : libvorbis-1:1.3.7-5.el9.x86_64 99/189

削除中 : libwayland-cursor-1.21.0-1.el9.x86_64 100/189

削除中 : libgexiv2-0.12.3-1.el9.x86_64 101/189

削除中 : libgsf-1.14.47-5.el9.x86_64 102/189

削除中 : libSM-1.2.3-10.el9.x86_64 103/189

削除中 : flac-libs-1.3.3-10.el9_2.1.x86_64 104/189

削除中 : mkfontscale-1.2.1-3.el9.x86_64 105/189

削除中 : libXpm-3.5.13-10.el9.x86_64 106/189

削除中 : libnotify-0.7.9-8.el9.x86_64 107/189

削除中 : fuse-2.9.9-17.el9.x86_64 108/189

削除中 : gtk-update-icon-cache-3.24.31-5.el9.x86_64 109/189

削除中 : gdk-pixbuf2-2.42.6-4.el9_4.x86_64 110/189

削除中 : libXcomposite-0.4.5-7.el9.x86_64 111/189

削除中 : libX11-1.7.0-11.el9.x86_64 112/189

削除中 : libxcb-1.13.1-9.el9.x86_64 113/189

削除中 : colord-libs-1.4.5-6.el9_6.x86_64 114/189

削除中 : libxkbcommon-1.0.3-4.el9.x86_64 115/189

削除中 : openexr-libs-3.1.1-3.el9.x86_64 116/189

削除中 : libraqm-0.8.0-1.el9.x86_64 117/189

削除中 : ostree-libs-2025.1-1.el9.x86_64 118/189

削除中 : xkeyboard-config-2.33-2.el9.noarch 119/189

削除中 : libX11-common-1.7.0-11.el9.noarch 120/189

削除中 : fuse-common-3.10.2-9.el9.x86_64 121/189

削除中 : fonts-filesystem-1:2.0.5-7.el9.1.noarch 122/189

削除中 : adobe-mappings-cmap-20171205-12.el9.noarch 123/189

削除中 : adwaita-cursor-theme-40.1.1-3.el9.noarch 124/189

削除中 : xml-common-0.6.3-58.el9.noarch 125/189

警告: /etc/xml/catalog saved as /etc/xml/catalog.rpmsave

削除中 : poppler-data-0.4.9-9.el9.noarch 126/189

削除中 : osinfo-db-20250124-2.el9_6.alma.2.noarch 127/189

削除中 : mesa-filesystem-24.2.8-2.el9_6.alma.1.x86_64 128/189

削除中 : sound-theme-freedesktop-0.8-17.el9.noarch 129/189

scriptletの実行中: sound-theme-freedesktop-0.8-17.el9.noarch 129/189

削除中 : hicolor-icon-theme-0.17-13.el9.noarch 130/189

削除中 : adobe-mappings-pdf-20180407-10.el9.noarch 131/189

削除中 : flatpak-selinux-1.12.9-4.el9_6.noarch 132/189

scriptletの実行中: flatpak-selinux-1.12.9-4.el9_6.noarch 132/189

削除中 : composefs-libs-1.0.8-1.el9.x86_64 133/189

削除中 : fribidi-1.0.10-6.el9.2.x86_64 134/189

削除中 : imath-3.1.2-1.el9.x86_64 135/189

削除中 : lcms2-2.12-3.el9.x86_64 136/189

削除中 : libXau-1.0.9-8.el9.x86_64 137/189

削除中 : libjpeg-turbo-2.0.90-7.el9.x86_64 138/189

削除中 : libfontenc-1.1.3-17.el9.x86_64 139/189

削除中 : libogg-2:1.3.4-6.el9.x86_64 140/189

削除中 : libICE-1.0.10-8.el9.x86_64 141/189

削除中 : exiv2-libs-0.27.5-2.el9.x86_64 142/189

削除中 : libwayland-client-1.21.0-1.el9.x86_64 143/189

削除中 : libpciaccess-0.16-7.el9.x86_64 144/189

削除中 : libatomic-11.5.0-5.el9_5.alma.1.x86_64 145/189

削除中 : libproxy-0.4.15-35.el9.x86_64 146/189

削除中 : avahi-libs-0.8-22.el9_6.x86_64 147/189

削除中 : jbigkit-libs-2.1-23.el9.x86_64 148/189

削除中 : libwebp-1.2.0-8.el9_3.x86_64 149/189

削除中 : openjpeg2-2.4.0-8.el9.x86_64 150/189

削除中 : pixman-0.40.0-6.el9_3.x86_64 151/189

削除中 : libwayland-server-1.21.0-1.el9.x86_64 152/189

削除中 : libX11-xcb-1.7.0-11.el9.x86_64 153/189

削除中 : libxshmfence-1.3-10.el9.x86_64 154/189

削除中 : libglvnd-1:1.3.4-1.el9.x86_64 155/189

削除中 : graphene-1.10.6-2.el9.x86_64 156/189

削除中 : gstreamer1-1.22.12-3.el9.x86_64 157/189

削除中 : opus-1.3.1-10.el9.x86_64 158/189

削除中 : orc-0.4.31-8.el9.x86_64 159/189

削除中 : libwayland-egl-1.21.0-1.el9.x86_64 160/189

削除中 : libstemmer-0-18.585svn.el9.x86_64 161/189

削除中 : exempi-2.6.0-0.2.20211007gite23c213.el9.x86_64 162/189

削除中 : libexif-0.6.22-6.el9.x86_64 163/189

削除中 : giflib-5.2.1-9.el9.x86_64 164/189

削除中 : libiptcdata-1.0.5-10.el9.x86_64 165/189

削除中 : totem-pl-parser-3.26.6-2.el9.x86_64 166/189

scriptletの実行中: upower-0.99.13-2.el9.x86_64 167/189

Removed "/etc/systemd/system/graphical.target.wants/upower.service".

削除中 : upower-0.99.13-2.el9.x86_64 167/189

scriptletの実行中: upower-0.99.13-2.el9.x86_64 167/189

削除中 : atk-2.36.0-5.el9.x86_64 168/189

削除中 : gsm-1.0.19-6.el9.x86_64 169/189

削除中 : libasyncns-0.8-22.el9.x86_64 170/189

削除中 : ModemManager-glib-1.20.2-1.el9.x86_64 171/189

scriptletの実行中: rtkit-0.11-29.el9.x86_64 172/189

Removed "/etc/systemd/system/graphical.target.wants/rtkit-daemon.service".

削除中 : rtkit-0.11-29.el9.x86_64 172/189

scriptletの実行中: rtkit-0.11-29.el9.x86_64 172/189

削除中 : bluez-libs-5.72-4.el9.x86_64 173/189

削除中 : fdk-aac-free-2.0.0-8.el9.x86_64 174/189

削除中 : libldac-2.0.2.3-10.el9.x86_64 175/189

削除中 : libsbc-1.4-9.el9.x86_64 176/189

削除中 : webrtc-audio-processing-0.3.1-8.el9.x86_64 177/189

scriptletの実行中: low-memory-monitor-2.1-4.el9.x86_64 178/189

Removed "/etc/systemd/system/basic.target.wants/low-memory-monitor.service".

削除中 : low-memory-monitor-2.1-4.el9.x86_64 178/189

scriptletの実行中: low-memory-monitor-2.1-4.el9.x86_64 178/189

削除中 : libepoxy-1.5.5-4.el9.x86_64 179/189

scriptletの実行中: dconf-0.40.0-6.el9.x86_64 180/189

The unit files have no installation config (WantedBy=, RequiredBy=, Also=,

Alias= settings in the [Install] section, and DefaultInstance= for template

units). This means they are not meant to be enabled or disabled using systemctl.

Possible reasons for having this kind of units are:

~ A unit may be statically enabled by being symlinked from another unit's

.wants/ or .requires/ directory.

~ A unit's purpose may be to act as a helper for some other unit which has

a requirement dependency on it.

~ A unit may be started when needed via activation (socket, path, timer,

D-Bus, udev, scripted systemctl call, ...).

~ In case of template units, the unit is meant to be enabled with some

instance name specified.

削除中 : dconf-0.40.0-6.el9.x86_64 180/189

scriptletの実行中: dconf-0.40.0-6.el9.x86_64 180/189

削除中 : libijs-0.35-15.el9.x86_64 181/189

削除中 : jbig2dec-libs-0.19-7.el9.x86_64 182/189

削除中 : libpaper-1.1.28-4.el9.x86_64 183/189

削除中 : liblqr-1-0.4.2-19.el9.x86_64 184/189

削除中 : libwmf-lite-0.2.12-10.el9.x86_64 185/189

削除中 : xdg-dbus-proxy-0.1.3-1.el9.x86_64 186/189

削除中 : bubblewrap-0.4.1-8.el9_5.x86_64 187/189

削除中 : flatpak-session-helper-1.12.9-4.el9_6.x86_64 188/189

削除中 : p11-kit-server-0.25.3-3.el9_5.x86_64 189/189

scriptletの実行中: p11-kit-server-0.25.3-3.el9_5.x86_64 189/189

検証中 : ImageMagick-libs-6.9.13.25-1.el9.x86_64 1/189

検証中 : LibRaw-0.21.1-1.el9.x86_64 2/189

検証中 : ModemManager-glib-1.20.2-1.el9.x86_64 3/189

検証中 : abattis-cantarell-fonts-0.301-4.el9.noarch 4/189

検証中 : adobe-mappings-cmap-20171205-12.el9.noarch 5/189

検証中 : adobe-mappings-cmap-deprecated-20171205-12.el9.noarch 6/189

検証中 : adobe-mappings-pdf-20180407-10.el9.noarch 7/189

検証中 : adobe-source-code-pro-fonts-2.030.1.050-12.el9.1.noarch 8/189

検証中 : adwaita-cursor-theme-40.1.1-3.el9.noarch 9/189

検証中 : adwaita-icon-theme-40.1.1-3.el9.noarch 10/189

検証中 : at-spi2-atk-2.38.0-4.el9.x86_64 11/189

検証中 : at-spi2-core-2.40.3-1.el9.x86_64 12/189

検証中 : atk-2.36.0-5.el9.x86_64 13/189

検証中 : avahi-glib-0.8-22.el9_6.x86_64 14/189

検証中 : avahi-libs-0.8-22.el9_6.x86_64 15/189

検証中 : bluez-libs-5.72-4.el9.x86_64 16/189

検証中 : bubblewrap-0.4.1-8.el9_5.x86_64 17/189

検証中 : cairo-1.17.4-7.el9.x86_64 18/189

検証中 : cairo-gobject-1.17.4-7.el9.x86_64 19/189

検証中 : colord-libs-1.4.5-6.el9_6.x86_64 20/189

検証中 : composefs-libs-1.0.8-1.el9.x86_64 21/189

検証中 : cups-libs-1:2.3.3op2-33.el9.x86_64 22/189

検証中 : dconf-0.40.0-6.el9.x86_64 23/189

検証中 : dejavu-sans-fonts-2.37-18.el9.noarch 24/189

検証中 : exempi-2.6.0-0.2.20211007gite23c213.el9.x86_64 25/189

検証中 : exiv2-0.27.5-2.el9.x86_64 26/189

検証中 : exiv2-libs-0.27.5-2.el9.x86_64 27/189

検証中 : fdk-aac-free-2.0.0-8.el9.x86_64 28/189

検証中 : flac-libs-1.3.3-10.el9_2.1.x86_64 29/189

検証中 : flatpak-1.12.9-4.el9_6.x86_64 30/189

検証中 : flatpak-selinux-1.12.9-4.el9_6.noarch 31/189

検証中 : flatpak-session-helper-1.12.9-4.el9_6.x86_64 32/189

検証中 : fontconfig-2.14.0-2.el9_1.x86_64 33/189

検証中 : fonts-filesystem-1:2.0.5-7.el9.1.noarch 34/189

検証中 : fribidi-1.0.10-6.el9.2.x86_64 35/189

検証中 : fuse-2.9.9-17.el9.x86_64 36/189

検証中 : fuse-common-3.10.2-9.el9.x86_64 37/189

検証中 : gd-2.3.2-3.el9.x86_64 38/189

検証中 : gdk-pixbuf2-2.42.6-4.el9_4.x86_64 39/189

検証中 : gdk-pixbuf2-modules-2.42.6-4.el9_4.x86_64 40/189

検証中 : geoclue2-2.6.0-8.el9_6.1.x86_64 41/189

検証中 : giflib-5.2.1-9.el9.x86_64 42/189

検証中 : glib-networking-2.68.3-3.el9.x86_64 43/189

検証中 : google-droid-sans-fonts-20200215-11.el9.2.noarch 44/189

検証中 : graphene-1.10.6-2.el9.x86_64 45/189

検証中 : graphviz-2.44.0-26.el9.x86_64 46/189

検証中 : gsettings-desktop-schemas-40.0-6.el9.x86_64 47/189

検証中 : gsm-1.0.19-6.el9.x86_64 48/189

検証中 : gstreamer1-1.22.12-3.el9.x86_64 49/189

検証中 : gstreamer1-plugins-base-1.22.12-4.el9.x86_64 50/189

検証中 : gtk-update-icon-cache-3.24.31-5.el9.x86_64 51/189

検証中 : gtk2-2.24.33-8.el9.x86_64 52/189

検証中 : gtk3-3.24.31-5.el9.x86_64 53/189

検証中 : hicolor-icon-theme-0.17-13.el9.noarch 54/189

検証中 : imath-3.1.2-1.el9.x86_64 55/189

検証中 : iso-codes-4.6.0-3.el9.noarch 56/189

検証中 : jasper-libs-2.0.28-3.el9.x86_64 57/189

検証中 : jbig2dec-libs-0.19-7.el9.x86_64 58/189

検証中 : jbigkit-libs-2.1-23.el9.x86_64 59/189

検証中 : langpacks-core-font-en-3.0-16.el9.noarch 60/189

検証中 : lcms2-2.12-3.el9.x86_64 61/189

検証中 : libICE-1.0.10-8.el9.x86_64 62/189

検証中 : libSM-1.2.3-10.el9.x86_64 63/189

検証中 : libX11-1.7.0-11.el9.x86_64 64/189

検証中 : libX11-common-1.7.0-11.el9.noarch 65/189

検証中 : libX11-xcb-1.7.0-11.el9.x86_64 66/189

検証中 : libXau-1.0.9-8.el9.x86_64 67/189

検証中 : libXaw-1.0.13-19.el9.x86_64 68/189

検証中 : libXcomposite-0.4.5-7.el9.x86_64 69/189

検証中 : libXcursor-1.2.0-7.el9.x86_64 70/189

検証中 : libXdamage-1.1.5-7.el9.x86_64 71/189

検証中 : libXext-1.3.4-8.el9.x86_64 72/189

検証中 : libXfixes-5.0.3-16.el9.x86_64 73/189

検証中 : libXft-2.3.3-8.el9.x86_64 74/189

検証中 : libXi-1.7.10-8.el9.x86_64 75/189

検証中 : libXinerama-1.1.4-10.el9.x86_64 76/189

検証中 : libXmu-1.1.3-8.el9.x86_64 77/189

検証中 : libXpm-3.5.13-10.el9.x86_64 78/189

検証中 : libXrandr-1.5.2-8.el9.x86_64 79/189

検証中 : libXrender-0.9.10-16.el9.x86_64 80/189

検証中 : libXt-1.2.0-6.el9.x86_64 81/189

検証中 : libXtst-1.2.3-16.el9.x86_64 82/189

検証中 : libXv-1.0.11-16.el9.x86_64 83/189

検証中 : libXxf86vm-1.1.4-18.el9.x86_64 84/189

検証中 : libappstream-glib-0.7.18-5.el9_4.x86_64 85/189

検証中 : libasyncns-0.8-22.el9.x86_64 86/189

検証中 : libatomic-11.5.0-5.el9_5.alma.1.x86_64 87/189

検証中 : libcanberra-0.30-27.el9.x86_64 88/189

検証中 : libcanberra-gtk2-0.30-27.el9.x86_64 89/189

検証中 : libcanberra-gtk3-0.30-27.el9.x86_64 90/189

検証中 : libdrm-2.4.123-2.el9.x86_64 91/189

検証中 : libepoxy-1.5.5-4.el9.x86_64 92/189

検証中 : libexif-0.6.22-6.el9.x86_64 93/189

検証中 : libfontenc-1.1.3-17.el9.x86_64 94/189

検証中 : libgexiv2-0.12.3-1.el9.x86_64 95/189

検証中 : libglvnd-1:1.3.4-1.el9.x86_64 96/189

検証中 : libglvnd-egl-1:1.3.4-1.el9.x86_64 97/189

検証中 : libglvnd-glx-1:1.3.4-1.el9.x86_64 98/189

検証中 : libgs-9.54.0-19.el9_6.x86_64 99/189

検証中 : libgsf-1.14.47-5.el9.x86_64 100/189

検証中 : libgxps-0.3.2-3.el9.x86_64 101/189

検証中 : libijs-0.35-15.el9.x86_64 102/189

検証中 : libiptcdata-1.0.5-10.el9.x86_64 103/189

検証中 : libjpeg-turbo-2.0.90-7.el9.x86_64 104/189

検証中 : libldac-2.0.2.3-10.el9.x86_64 105/189

検証中 : liblqr-1-0.4.2-19.el9.x86_64 106/189

検証中 : libnotify-0.7.9-8.el9.x86_64 107/189

検証中 : libogg-2:1.3.4-6.el9.x86_64 108/189

検証中 : libosinfo-1.10.0-1.el9.x86_64 109/189

検証中 : libpaper-1.1.28-4.el9.x86_64 110/189

検証中 : libpciaccess-0.16-7.el9.x86_64 111/189

検証中 : libproxy-0.4.15-35.el9.x86_64 112/189

検証中 : libproxy-webkitgtk4-0.4.15-35.el9.x86_64 113/189

検証中 : libraqm-0.8.0-1.el9.x86_64 114/189

検証中 : librsvg2-2.50.7-3.el9.x86_64 115/189

検証中 : libsbc-1.4-9.el9.x86_64 116/189

検証中 : libsndfile-1.0.31-9.el9.x86_64 117/189

検証中 : libsoup-2.72.0-10.el9_6.2.x86_64 118/189

検証中 : libstemmer-0-18.585svn.el9.x86_64 119/189

検証中 : libtheora-1:1.1.1-31.el9.x86_64 120/189

検証中 : libtiff-4.4.0-13.el9.x86_64 121/189

検証中 : libtracker-sparql-3.1.2-3.el9_1.x86_64 122/189

検証中 : libvorbis-1:1.3.7-5.el9.x86_64 123/189

検証中 : libwayland-client-1.21.0-1.el9.x86_64 124/189

検証中 : libwayland-cursor-1.21.0-1.el9.x86_64 125/189

検証中 : libwayland-egl-1.21.0-1.el9.x86_64 126/189

検証中 : libwayland-server-1.21.0-1.el9.x86_64 127/189

検証中 : libwebp-1.2.0-8.el9_3.x86_64 128/189

検証中 : libwmf-lite-0.2.12-10.el9.x86_64 129/189

検証中 : libxcb-1.13.1-9.el9.x86_64 130/189

検証中 : libxkbcommon-1.0.3-4.el9.x86_64 131/189

検証中 : libxshmfence-1.3-10.el9.x86_64 132/189

検証中 : low-memory-monitor-2.1-4.el9.x86_64 133/189

検証中 : mesa-dri-drivers-24.2.8-2.el9_6.alma.1.x86_64 134/189

検証中 : mesa-filesystem-24.2.8-2.el9_6.alma.1.x86_64 135/189

検証中 : mesa-libEGL-24.2.8-2.el9_6.alma.1.x86_64 136/189

検証中 : mesa-libGL-24.2.8-2.el9_6.alma.1.x86_64 137/189

検証中 : mesa-libgbm-24.2.8-2.el9_6.alma.1.x86_64 138/189

検証中 : mesa-libglapi-24.2.8-2.el9_6.alma.1.x86_64 139/189

検証中 : mkfontscale-1.2.1-3.el9.x86_64 140/189

検証中 : openexr-libs-3.1.1-3.el9.x86_64 141/189

検証中 : openjpeg2-2.4.0-8.el9.x86_64 142/189

検証中 : opus-1.3.1-10.el9.x86_64 143/189

検証中 : orc-0.4.31-8.el9.x86_64 144/189

検証中 : osinfo-db-20250124-2.el9_6.alma.2.noarch 145/189

検証中 : osinfo-db-tools-1.10.0-1.el9.x86_64 146/189

検証中 : ostree-libs-2025.1-1.el9.x86_64 147/189

検証中 : p11-kit-server-0.25.3-3.el9_5.x86_64 148/189

検証中 : pango-1.48.7-3.el9.x86_64 149/189

検証中 : php-pecl-imagick-3.7.0-1.el9.x86_64 150/189

検証中 : pipewire-1.0.1-1.el9.x86_64 151/189

検証中 : pipewire-alsa-1.0.1-1.el9.x86_64 152/189

検証中 : pipewire-jack-audio-connection-kit-1.0.1-1.el9.x86_64 153/189

検証中 : pipewire-jack-audio-connection-kit-libs-1.0.1-1.el9.x86_64 154/189

検証中 : pipewire-libs-1.0.1-1.el9.x86_64 155/189

検証中 : pipewire-pulseaudio-1.0.1-1.el9.x86_64 156/189

検証中 : pixman-0.40.0-6.el9_3.x86_64 157/189

検証中 : poppler-21.01.0-21.el9.x86_64 158/189

検証中 : poppler-data-0.4.9-9.el9.noarch 159/189

検証中 : poppler-glib-21.01.0-21.el9.x86_64 160/189

検証中 : pulseaudio-libs-15.0-3.el9.x86_64 161/189

検証中 : rtkit-0.11-29.el9.x86_64 162/189

検証中 : sound-theme-freedesktop-0.8-17.el9.noarch 163/189

検証中 : totem-pl-parser-3.26.6-2.el9.x86_64 164/189

検証中 : tracker-3.1.2-3.el9_1.x86_64 165/189

検証中 : tracker-miners-3.1.2-4.el9_3.x86_64 166/189

検証中 : upower-0.99.13-2.el9.x86_64 167/189

検証中 : urw-base35-bookman-fonts-20200910-6.el9.noarch 168/189

検証中 : urw-base35-c059-fonts-20200910-6.el9.noarch 169/189

検証中 : urw-base35-d050000l-fonts-20200910-6.el9.noarch 170/189

検証中 : urw-base35-fonts-20200910-6.el9.noarch 171/189

検証中 : urw-base35-fonts-common-20200910-6.el9.noarch 172/189

検証中 : urw-base35-gothic-fonts-20200910-6.el9.noarch 173/189

検証中 : urw-base35-nimbus-mono-ps-fonts-20200910-6.el9.noarch 174/189

検証中 : urw-base35-nimbus-roman-fonts-20200910-6.el9.noarch 175/189

検証中 : urw-base35-nimbus-sans-fonts-20200910-6.el9.noarch 176/189

検証中 : urw-base35-p052-fonts-20200910-6.el9.noarch 177/189

検証中 : urw-base35-standard-symbols-ps-fonts-20200910-6.el9.noarch 178/189

検証中 : urw-base35-z003-fonts-20200910-6.el9.noarch 179/189

検証中 : webkit2gtk3-jsc-2.48.3-1.el9_6.x86_64 180/189

検証中 : webrtc-audio-processing-0.3.1-8.el9.x86_64 181/189

検証中 : wireplumber-0.4.14-1.el9.x86_64 182/189

検証中 : wireplumber-libs-0.4.14-1.el9.x86_64 183/189

検証中 : xdg-dbus-proxy-0.1.3-1.el9.x86_64 184/189

検証中 : xdg-desktop-portal-1.12.6-1.el9.x86_64 185/189

検証中 : xdg-desktop-portal-gtk-1.12.0-3.el9.x86_64 186/189

検証中 : xkeyboard-config-2.33-2.el9.noarch 187/189

検証中 : xml-common-0.6.3-58.el9.noarch 188/189

検証中 : xorg-x11-fonts-ISO8859-1-100dpi-7.5-33.el9.noarch 189/189

削除しました:

ImageMagick-libs-6.9.13.25-1.el9.x86_64

LibRaw-0.21.1-1.el9.x86_64

ModemManager-glib-1.20.2-1.el9.x86_64

abattis-cantarell-fonts-0.301-4.el9.noarch

adobe-mappings-cmap-20171205-12.el9.noarch

adobe-mappings-cmap-deprecated-20171205-12.el9.noarch

adobe-mappings-pdf-20180407-10.el9.noarch

adobe-source-code-pro-fonts-2.030.1.050-12.el9.1.noarch

adwaita-cursor-theme-40.1.1-3.el9.noarch

adwaita-icon-theme-40.1.1-3.el9.noarch

at-spi2-atk-2.38.0-4.el9.x86_64

at-spi2-core-2.40.3-1.el9.x86_64

atk-2.36.0-5.el9.x86_64

avahi-glib-0.8-22.el9_6.x86_64

avahi-libs-0.8-22.el9_6.x86_64

bluez-libs-5.72-4.el9.x86_64

bubblewrap-0.4.1-8.el9_5.x86_64

cairo-1.17.4-7.el9.x86_64

cairo-gobject-1.17.4-7.el9.x86_64

colord-libs-1.4.5-6.el9_6.x86_64

composefs-libs-1.0.8-1.el9.x86_64

cups-libs-1:2.3.3op2-33.el9.x86_64

dconf-0.40.0-6.el9.x86_64

dejavu-sans-fonts-2.37-18.el9.noarch

exempi-2.6.0-0.2.20211007gite23c213.el9.x86_64

exiv2-0.27.5-2.el9.x86_64

exiv2-libs-0.27.5-2.el9.x86_64

fdk-aac-free-2.0.0-8.el9.x86_64

flac-libs-1.3.3-10.el9_2.1.x86_64

flatpak-1.12.9-4.el9_6.x86_64

flatpak-selinux-1.12.9-4.el9_6.noarch

flatpak-session-helper-1.12.9-4.el9_6.x86_64

fontconfig-2.14.0-2.el9_1.x86_64

fonts-filesystem-1:2.0.5-7.el9.1.noarch

fribidi-1.0.10-6.el9.2.x86_64

fuse-2.9.9-17.el9.x86_64

fuse-common-3.10.2-9.el9.x86_64

gd-2.3.2-3.el9.x86_64

gdk-pixbuf2-2.42.6-4.el9_4.x86_64

gdk-pixbuf2-modules-2.42.6-4.el9_4.x86_64

geoclue2-2.6.0-8.el9_6.1.x86_64

giflib-5.2.1-9.el9.x86_64

glib-networking-2.68.3-3.el9.x86_64

google-droid-sans-fonts-20200215-11.el9.2.noarch

graphene-1.10.6-2.el9.x86_64

graphviz-2.44.0-26.el9.x86_64

gsettings-desktop-schemas-40.0-6.el9.x86_64

gsm-1.0.19-6.el9.x86_64

gstreamer1-1.22.12-3.el9.x86_64

gstreamer1-plugins-base-1.22.12-4.el9.x86_64

gtk-update-icon-cache-3.24.31-5.el9.x86_64

gtk2-2.24.33-8.el9.x86_64

gtk3-3.24.31-5.el9.x86_64

hicolor-icon-theme-0.17-13.el9.noarch

imath-3.1.2-1.el9.x86_64

iso-codes-4.6.0-3.el9.noarch

jasper-libs-2.0.28-3.el9.x86_64

jbig2dec-libs-0.19-7.el9.x86_64

jbigkit-libs-2.1-23.el9.x86_64

langpacks-core-font-en-3.0-16.el9.noarch

lcms2-2.12-3.el9.x86_64

libICE-1.0.10-8.el9.x86_64

libSM-1.2.3-10.el9.x86_64

libX11-1.7.0-11.el9.x86_64

libX11-common-1.7.0-11.el9.noarch

libX11-xcb-1.7.0-11.el9.x86_64

libXau-1.0.9-8.el9.x86_64

libXaw-1.0.13-19.el9.x86_64

libXcomposite-0.4.5-7.el9.x86_64

libXcursor-1.2.0-7.el9.x86_64

libXdamage-1.1.5-7.el9.x86_64

libXext-1.3.4-8.el9.x86_64

libXfixes-5.0.3-16.el9.x86_64

libXft-2.3.3-8.el9.x86_64

libXi-1.7.10-8.el9.x86_64

libXinerama-1.1.4-10.el9.x86_64

libXmu-1.1.3-8.el9.x86_64

libXpm-3.5.13-10.el9.x86_64

libXrandr-1.5.2-8.el9.x86_64

libXrender-0.9.10-16.el9.x86_64

libXt-1.2.0-6.el9.x86_64

libXtst-1.2.3-16.el9.x86_64

libXv-1.0.11-16.el9.x86_64

libXxf86vm-1.1.4-18.el9.x86_64

libappstream-glib-0.7.18-5.el9_4.x86_64

libasyncns-0.8-22.el9.x86_64

libatomic-11.5.0-5.el9_5.alma.1.x86_64

libcanberra-0.30-27.el9.x86_64

libcanberra-gtk2-0.30-27.el9.x86_64

libcanberra-gtk3-0.30-27.el9.x86_64

libdrm-2.4.123-2.el9.x86_64

libepoxy-1.5.5-4.el9.x86_64

libexif-0.6.22-6.el9.x86_64

libfontenc-1.1.3-17.el9.x86_64

libgexiv2-0.12.3-1.el9.x86_64

libglvnd-1:1.3.4-1.el9.x86_64

libglvnd-egl-1:1.3.4-1.el9.x86_64

libglvnd-glx-1:1.3.4-1.el9.x86_64

libgs-9.54.0-19.el9_6.x86_64

libgsf-1.14.47-5.el9.x86_64

libgxps-0.3.2-3.el9.x86_64

libijs-0.35-15.el9.x86_64

libiptcdata-1.0.5-10.el9.x86_64

libjpeg-turbo-2.0.90-7.el9.x86_64

libldac-2.0.2.3-10.el9.x86_64

liblqr-1-0.4.2-19.el9.x86_64

libnotify-0.7.9-8.el9.x86_64

libogg-2:1.3.4-6.el9.x86_64

libosinfo-1.10.0-1.el9.x86_64

libpaper-1.1.28-4.el9.x86_64

libpciaccess-0.16-7.el9.x86_64

libproxy-0.4.15-35.el9.x86_64

libproxy-webkitgtk4-0.4.15-35.el9.x86_64

libraqm-0.8.0-1.el9.x86_64

librsvg2-2.50.7-3.el9.x86_64

libsbc-1.4-9.el9.x86_64

libsndfile-1.0.31-9.el9.x86_64

libsoup-2.72.0-10.el9_6.2.x86_64

libstemmer-0-18.585svn.el9.x86_64

libtheora-1:1.1.1-31.el9.x86_64

libtiff-4.4.0-13.el9.x86_64

libtracker-sparql-3.1.2-3.el9_1.x86_64

libvorbis-1:1.3.7-5.el9.x86_64

libwayland-client-1.21.0-1.el9.x86_64

libwayland-cursor-1.21.0-1.el9.x86_64

libwayland-egl-1.21.0-1.el9.x86_64

libwayland-server-1.21.0-1.el9.x86_64

libwebp-1.2.0-8.el9_3.x86_64

libwmf-lite-0.2.12-10.el9.x86_64

libxcb-1.13.1-9.el9.x86_64

libxkbcommon-1.0.3-4.el9.x86_64

libxshmfence-1.3-10.el9.x86_64

low-memory-monitor-2.1-4.el9.x86_64

mesa-dri-drivers-24.2.8-2.el9_6.alma.1.x86_64

mesa-filesystem-24.2.8-2.el9_6.alma.1.x86_64

mesa-libEGL-24.2.8-2.el9_6.alma.1.x86_64

mesa-libGL-24.2.8-2.el9_6.alma.1.x86_64

mesa-libgbm-24.2.8-2.el9_6.alma.1.x86_64

mesa-libglapi-24.2.8-2.el9_6.alma.1.x86_64

mkfontscale-1.2.1-3.el9.x86_64

openexr-libs-3.1.1-3.el9.x86_64

openjpeg2-2.4.0-8.el9.x86_64

opus-1.3.1-10.el9.x86_64

orc-0.4.31-8.el9.x86_64

osinfo-db-20250124-2.el9_6.alma.2.noarch

osinfo-db-tools-1.10.0-1.el9.x86_64

ostree-libs-2025.1-1.el9.x86_64

p11-kit-server-0.25.3-3.el9_5.x86_64

pango-1.48.7-3.el9.x86_64

php-pecl-imagick-3.7.0-1.el9.x86_64

pipewire-1.0.1-1.el9.x86_64

pipewire-alsa-1.0.1-1.el9.x86_64

pipewire-jack-audio-connection-kit-1.0.1-1.el9.x86_64

pipewire-jack-audio-connection-kit-libs-1.0.1-1.el9.x86_64

pipewire-libs-1.0.1-1.el9.x86_64

pipewire-pulseaudio-1.0.1-1.el9.x86_64

pixman-0.40.0-6.el9_3.x86_64

poppler-21.01.0-21.el9.x86_64

poppler-data-0.4.9-9.el9.noarch

poppler-glib-21.01.0-21.el9.x86_64

pulseaudio-libs-15.0-3.el9.x86_64

rtkit-0.11-29.el9.x86_64

sound-theme-freedesktop-0.8-17.el9.noarch

totem-pl-parser-3.26.6-2.el9.x86_64

tracker-3.1.2-3.el9_1.x86_64

tracker-miners-3.1.2-4.el9_3.x86_64

upower-0.99.13-2.el9.x86_64

urw-base35-bookman-fonts-20200910-6.el9.noarch

urw-base35-c059-fonts-20200910-6.el9.noarch

urw-base35-d050000l-fonts-20200910-6.el9.noarch

urw-base35-fonts-20200910-6.el9.noarch

urw-base35-fonts-common-20200910-6.el9.noarch

urw-base35-gothic-fonts-20200910-6.el9.noarch

urw-base35-nimbus-mono-ps-fonts-20200910-6.el9.noarch

urw-base35-nimbus-roman-fonts-20200910-6.el9.noarch

urw-base35-nimbus-sans-fonts-20200910-6.el9.noarch

urw-base35-p052-fonts-20200910-6.el9.noarch

urw-base35-standard-symbols-ps-fonts-20200910-6.el9.noarch

urw-base35-z003-fonts-20200910-6.el9.noarch

webkit2gtk3-jsc-2.48.3-1.el9_6.x86_64

webrtc-audio-processing-0.3.1-8.el9.x86_64

wireplumber-0.4.14-1.el9.x86_64

wireplumber-libs-0.4.14-1.el9.x86_64

xdg-dbus-proxy-0.1.3-1.el9.x86_64

xdg-desktop-portal-1.12.6-1.el9.x86_64

xdg-desktop-portal-gtk-1.12.0-3.el9.x86_64

xkeyboard-config-2.33-2.el9.noarch

xml-common-0.6.3-58.el9.noarch

xorg-x11-fonts-ISO8859-1-100dpi-7.5-33.el9.noarch

完了しました!

$

この後再度、dnf module switch-to php:8.3 を実行して切り替えに成功しました

$ sudo dnf module switch-to php:8.3

メタデータの期限切れの最終確認: 2:41:59 前の 2025年07月16日 07時18分53秒 に実施しました。

依存関係が解決しました。

===========================================================================================================

パッケージ Arch バージョン リポジトリー サイズ

===========================================================================================================

アップグレード:

php x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 7.8 k

php-cli x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 3.7 M

php-common x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 706 k

php-fpm x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 1.9 M

php-intl x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 167 k

php-mbstring x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 524 k

php-mysqlnd x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 144 k

php-opcache x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 353 k

php-pdo x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 86 k

php-pecl-zip x86_64 1.22.3-1.module_el9.6.0+151+5f31e576 appstream 57 k

php-xml x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 150 k

依存関係のインストール:

capstone x86_64 4.0.2-10.el9 appstream 766 k

モジュールストリームの有効化中:

php 8.3

トランザクションの概要

===========================================================================================================

インストール 1 パッケージ

アップグレード 11 パッケージ

ダウンロードサイズの合計: 8.5 M

これでよろしいですか? [y/N]: y

パッケージのダウンロード:

(1/12): php-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 86 kB/s | 7.8 kB 00:00

(2/12): capstone-4.0.2-10.el9.x86_64.rpm 3.1 MB/s | 766 kB 00:00

(3/12): php-common-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 2.8 MB/s | 706 kB 00:00

(4/12): php-intl-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 2.0 MB/s | 167 kB 00:00

(5/12): php-mbstring-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 2.5 MB/s | 524 kB 00:00

(6/12): php-mysqlnd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 2.2 MB/s | 144 kB 00:00

(7/12): php-fpm-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 3.8 MB/s | 1.9 MB 00:00

(8/12): php-opcache-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 2.7 MB/s | 353 kB 00:00

(9/12): php-pdo-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 1.1 MB/s | 86 kB 00:00

(10/12): php-pecl-zip-1.22.3-1.module_el9.6.0+151+5f31e576.x86_64.rpm 1.5 MB/s | 57 kB 00:00

(11/12): php-xml-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 1.5 MB/s | 150 kB 00:00

(12/12): php-cli-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 4.0 MB/s | 3.7 MB 00:00

-----------------------------------------------------------------------------------------------------------

合計 5.7 MB/s | 8.5 MB 00:01

トランザクションを確認しています

トランザクションの確認に成功しました。

トランザクションをテストしています

トランザクションのテストに成功しました。

トランザクションを実行しています

準備中 : 1/1

アップグレード中 : php-common-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 1/23

アップグレード中 : php-pdo-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 2/23

アップグレード中 : php-cli-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 3/23

アップグレード中 : php-fpm-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 4/23

scriptletの実行中: php-fpm-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 4/23

アップグレード中 : php-mbstring-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 5/23

アップグレード中 : php-xml-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 6/23

インストール中 : capstone-4.0.2-10.el9.x86_64 7/23

アップグレード中 : php-opcache-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 8/23

アップグレード中 : php-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 9/23

アップグレード中 : php-mysqlnd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 10/23

アップグレード中 : php-intl-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 11/23

アップグレード中 : php-pecl-zip-1.22.3-1.module_el9.6.0+151+5f31e576.x86_64 12/23

整理 : php-8.0.30-3.el9_6.x86_64 13/23

整理 : php-pecl-zip-1.19.2-6.el9.x86_64 14/23

整理 : php-cli-8.0.30-3.el9_6.x86_64 15/23

scriptletの実行中: php-fpm-8.0.30-3.el9_6.x86_64 16/23

整理 : php-fpm-8.0.30-3.el9_6.x86_64 16/23

整理 : php-mbstring-8.0.30-3.el9_6.x86_64 17/23

整理 : php-opcache-8.0.30-3.el9_6.x86_64 18/23

整理 : php-xml-8.0.30-3.el9_6.x86_64 19/23

整理 : php-mysqlnd-8.0.30-3.el9_6.x86_64 20/23

整理 : php-pdo-8.0.30-3.el9_6.x86_64 21/23

整理 : php-intl-8.0.30-3.el9_6.x86_64 22/23

整理 : php-common-8.0.30-3.el9_6.x86_64 23/23

scriptletの実行中: php-common-8.0.30-3.el9_6.x86_64 23/23

検証中 : capstone-4.0.2-10.el9.x86_64 1/23

検証中 : php-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 2/23

検証中 : php-8.0.30-3.el9_6.x86_64 3/23

検証中 : php-cli-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 4/23

検証中 : php-cli-8.0.30-3.el9_6.x86_64 5/23

検証中 : php-common-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 6/23

検証中 : php-common-8.0.30-3.el9_6.x86_64 7/23

検証中 : php-fpm-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 8/23

検証中 : php-fpm-8.0.30-3.el9_6.x86_64 9/23

検証中 : php-intl-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 10/23

検証中 : php-intl-8.0.30-3.el9_6.x86_64 11/23

検証中 : php-mbstring-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 12/23

検証中 : php-mbstring-8.0.30-3.el9_6.x86_64 13/23

検証中 : php-mysqlnd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 14/23

検証中 : php-mysqlnd-8.0.30-3.el9_6.x86_64 15/23

検証中 : php-opcache-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 16/23

検証中 : php-opcache-8.0.30-3.el9_6.x86_64 17/23

検証中 : php-pdo-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 18/23

検証中 : php-pdo-8.0.30-3.el9_6.x86_64 19/23

検証中 : php-pecl-zip-1.22.3-1.module_el9.6.0+151+5f31e576.x86_64 20/23

検証中 : php-pecl-zip-1.19.2-6.el9.x86_64 21/23

検証中 : php-xml-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 22/23

検証中 : php-xml-8.0.30-3.el9_6.x86_64 23/23

アップグレード済み:

php-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-cli-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-common-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-fpm-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-intl-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-mbstring-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-mysqlnd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-opcache-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-pdo-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

php-pecl-zip-1.22.3-1.module_el9.6.0+151+5f31e576.x86_64

php-xml-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

インストール済み:

capstone-4.0.2-10.el9.x86_64

完了しました!

$

で・・・削除した php-pecl-imagick はインストールできるかな?と試してみましたが、やっぱりだめでした

$ sudo dnf install php-pecl-imagick

メタデータの期限切れの最終確認: 2:43:35 前の 2025年07月16日 07時18分53秒 に実施しました。

エラー:

問題: package php-pecl-imagick-3.7.0-1.el9.x86_64 from epel requires php(api) = 20200930-64, but none of the providers can be installed

- package php-pecl-imagick-3.7.0-1.el9.x86_64 from epel requires php(zend-abi) = 20200930-64, but none of the providers can be installed

- 競合するリクエスト

- package php-common-8.0.30-3.el9_6.x86_64 from appstream is filtered out by modular filtering

(インストール不可のパッケージをスキップするには、'--skip-broken' を追加してみてください または、'--nobest' を追加して、最適候補のパッケージのみを使用しないでください)

$

2025/07/18追記

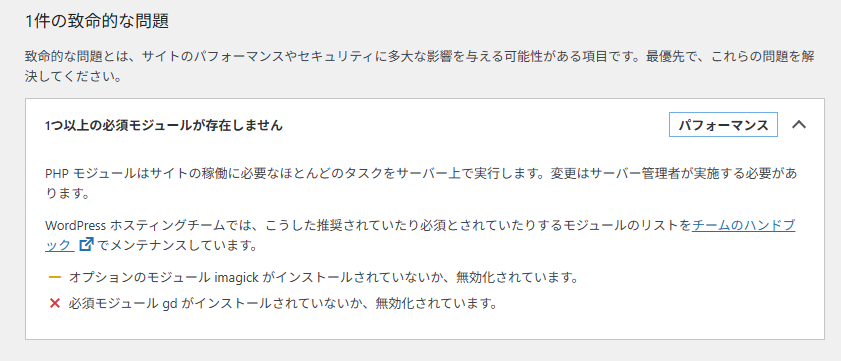

あれ??エディッタで直接画像を貼り付けようとすると「Webサーバーはこの画像に対してレスポンシブな画像サイズを生成できません。アップロードする前にJPEGまたはPNGに変換してください。」と表示されるようになった

今回の対応中にgdに関するパッケージが消されていたためだた。

必要なのは php-gdでした。

最初 gdだけかと思ってdnf install gdでインストールしたら状況が変わらなかったので「dnf install gd php-gd」が正解でした。

# dnf install gd

メタデータの期限切れの最終確認: 0:27:57 前の 2025年07月19日 18時14分35秒 に実施しました。

依存関係が解決しました。

==========================================================================================================================================

パッケージ アーキテクチャー バージョン リポジトリー サイズ

==========================================================================================================================================

インストール:

gd x86_64 2.3.2-3.el9 appstream 131 k

依存関係のインストール:

dejavu-sans-fonts noarch 2.37-18.el9 baseos 1.3 M

fontconfig x86_64 2.14.0-2.el9_1 appstream 274 k

fonts-filesystem noarch 1:2.0.5-7.el9.1 baseos 9.0 k

jbigkit-libs x86_64 2.1-23.el9 appstream 52 k

langpacks-core-font-en noarch 3.0-16.el9 appstream 9.4 k

libX11 x86_64 1.7.0-11.el9 appstream 646 k

libX11-common noarch 1.7.0-11.el9 appstream 151 k

libXau x86_64 1.0.9-8.el9 appstream 30 k

libXpm x86_64 3.5.13-10.el9 appstream 57 k

libjpeg-turbo x86_64 2.0.90-7.el9 appstream 174 k

libtiff x86_64 4.4.0-13.el9 appstream 197 k

libwebp x86_64 1.2.0-8.el9_3 appstream 276 k

libxcb x86_64 1.13.1-9.el9 appstream 225 k

xml-common noarch 0.6.3-58.el9 appstream 31 k

トランザクションの概要

==========================================================================================================================================

インストール 15 パッケージ

ダウンロードサイズの合計: 3.5 M

インストール後のサイズ: 13 M

これでよろしいですか? [y/N]: y

パッケージのダウンロード:

(1/15): jbigkit-libs-2.1-23.el9.x86_64.rpm 123 kB/s | 52 kB 00:00

(2/15): gd-2.3.2-3.el9.x86_64.rpm 309 kB/s | 131 kB 00:00

(3/15): langpacks-core-font-en-3.0-16.el9.noarch.rpm 257 kB/s | 9.4 kB 00:00

(4/15): fontconfig-2.14.0-2.el9_1.x86_64.rpm 555 kB/s | 274 kB 00:00

(5/15): libXau-1.0.9-8.el9.x86_64.rpm 389 kB/s | 30 kB 00:00

(6/15): libX11-common-1.7.0-11.el9.noarch.rpm 1.2 MB/s | 151 kB 00:00

(7/15): libX11-1.7.0-11.el9.x86_64.rpm 3.7 MB/s | 646 kB 00:00

(8/15): libXpm-3.5.13-10.el9.x86_64.rpm 1.3 MB/s | 57 kB 00:00

(9/15): libjpeg-turbo-2.0.90-7.el9.x86_64.rpm 1.9 MB/s | 174 kB 00:00

(10/15): libtiff-4.4.0-13.el9.x86_64.rpm 2.0 MB/s | 197 kB 00:00

(11/15): libwebp-1.2.0-8.el9_3.x86_64.rpm 2.8 MB/s | 276 kB 00:00

(12/15): xml-common-0.6.3-58.el9.noarch.rpm 715 kB/s | 31 kB 00:00

(13/15): libxcb-1.13.1-9.el9.x86_64.rpm 2.4 MB/s | 225 kB 00:00

(14/15): fonts-filesystem-2.0.5-7.el9.1.noarch.rpm 136 kB/s | 9.0 kB 00:00

(15/15): dejavu-sans-fonts-2.37-18.el9.noarch.rpm 4.4 MB/s | 1.3 MB 00:00

------------------------------------------------------------------------------------------------------------------------------------------

合計 1.5 MB/s | 3.5 MB 00:02

トランザクションを確認しています

トランザクションの確認に成功しました。

トランザクションをテストしています

トランザクションのテストに成功しました。

トランザクションを実行しています

準備中 : 1/1

インストール中 : fonts-filesystem-1:2.0.5-7.el9.1.noarch 1/15

インストール中 : dejavu-sans-fonts-2.37-18.el9.noarch 2/15

インストール中 : libwebp-1.2.0-8.el9_3.x86_64 3/15

インストール中 : libjpeg-turbo-2.0.90-7.el9.x86_64 4/15

インストール中 : langpacks-core-font-en-3.0-16.el9.noarch 5/15

scriptletの実行中: xml-common-0.6.3-58.el9.noarch 6/15

インストール中 : xml-common-0.6.3-58.el9.noarch 6/15

インストール中 : fontconfig-2.14.0-2.el9_1.x86_64 7/15

scriptletの実行中: fontconfig-2.14.0-2.el9_1.x86_64 7/15

インストール中 : libXau-1.0.9-8.el9.x86_64 8/15

インストール中 : libxcb-1.13.1-9.el9.x86_64 9/15

インストール中 : libX11-common-1.7.0-11.el9.noarch 10/15

インストール中 : libX11-1.7.0-11.el9.x86_64 11/15

インストール中 : libXpm-3.5.13-10.el9.x86_64 12/15

インストール中 : jbigkit-libs-2.1-23.el9.x86_64 13/15

インストール中 : libtiff-4.4.0-13.el9.x86_64 14/15

インストール中 : gd-2.3.2-3.el9.x86_64 15/15

scriptletの実行中: fontconfig-2.14.0-2.el9_1.x86_64 15/15

scriptletの実行中: gd-2.3.2-3.el9.x86_64 15/15

検証中 : fontconfig-2.14.0-2.el9_1.x86_64 1/15

検証中 : gd-2.3.2-3.el9.x86_64 2/15

検証中 : jbigkit-libs-2.1-23.el9.x86_64 3/15

検証中 : langpacks-core-font-en-3.0-16.el9.noarch 4/15

検証中 : libX11-1.7.0-11.el9.x86_64 5/15

検証中 : libX11-common-1.7.0-11.el9.noarch 6/15

検証中 : libXau-1.0.9-8.el9.x86_64 7/15

検証中 : libXpm-3.5.13-10.el9.x86_64 8/15

検証中 : libjpeg-turbo-2.0.90-7.el9.x86_64 9/15

検証中 : libtiff-4.4.0-13.el9.x86_64 10/15

検証中 : libwebp-1.2.0-8.el9_3.x86_64 11/15

検証中 : libxcb-1.13.1-9.el9.x86_64 12/15

検証中 : xml-common-0.6.3-58.el9.noarch 13/15

検証中 : dejavu-sans-fonts-2.37-18.el9.noarch 14/15

検証中 : fonts-filesystem-1:2.0.5-7.el9.1.noarch 15/15

インストール済み:

dejavu-sans-fonts-2.37-18.el9.noarch fontconfig-2.14.0-2.el9_1.x86_64 fonts-filesystem-1:2.0.5-7.el9.1.noarch

gd-2.3.2-3.el9.x86_64 jbigkit-libs-2.1-23.el9.x86_64 langpacks-core-font-en-3.0-16.el9.noarch

libX11-1.7.0-11.el9.x86_64 libX11-common-1.7.0-11.el9.noarch libXau-1.0.9-8.el9.x86_64

libXpm-3.5.13-10.el9.x86_64 libjpeg-turbo-2.0.90-7.el9.x86_64 libtiff-4.4.0-13.el9.x86_64

libwebp-1.2.0-8.el9_3.x86_64 libxcb-1.13.1-9.el9.x86_64 xml-common-0.6.3-58.el9.noarch

完了しました!

# dnf install php-gd

メタデータの期限切れの最終確認: 0:29:07 前の 2025年07月19日 18時14分35秒 に実施しました。

依存関係が解決しました。

==========================================================================================================================================

パッケージ アーキテクチャー バージョン リポジトリー サイズ

==========================================================================================================================================

インストール:

php-gd x86_64 8.3.19-1.module_el9.6.0+166+f262c21c appstream 40 k

トランザクションの概要

==========================================================================================================================================

インストール 1 パッケージ

ダウンロードサイズの合計: 40 k

インストール後のサイズ: 113 k

これでよろしいですか? [y/N]: y

パッケージのダウンロード:

php-gd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64.rpm 361 kB/s | 40 kB 00:00

------------------------------------------------------------------------------------------------------------------------------------------

合計 55 kB/s | 40 kB 00:00

トランザクションを確認しています

トランザクションの確認に成功しました。

トランザクションをテストしています

トランザクションのテストに成功しました。

トランザクションを実行しています

準備中 : 1/1

インストール中 : php-gd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 1/1

scriptletの実行中: php-gd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 1/1

検証中 : php-gd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64 1/1

インストール済み:

php-gd-8.3.19-1.module_el9.6.0+166+f262c21c.x86_64

完了しました!

#