nVidia GPUやAMD GPUを使ってStable Diffusion をやるって話はあるけど、AMD Ryzen GPU付きのGPU部分を使ってできるのか、ってのがよく分からなかったので試してみた。

1) 前準備

Windows 11環境なのでwingetコマンドを使ってpythonとgitをインストール

> winget install Python.Python.3.10

> winget install Git.Git

ただ、python 3.10.11 がインストールされたんだが、オリジナルの Stable Diffusion web UI の「Automatic Installation on Windows」には「Install Python 3.10.6 (Newer version of Python does not support torch), checking “Add Python to PATH”.」という記載が・・・果たしてホントにダメなのか?→問題ありませんでした

2) SD.Next編…失敗

いろいろ自働でセットアップしてくれるStable Diffusion web UI とそれにいろいろ機能を付け加えている SD.Next などがある。

とりあえず試してみるか、とやってみたが、CPUでの動作となっていた。(ドキュメントにWindowsでのAMDは対応していない、とある通り)

2023/09/14追記: 現在はSD.NextもDirectML対応になり使える様になりました

2023/11/24追記: SD.NextをDirectML使ってRyzen 5600Gで動かすと結構頻繁に処理途中で止まる感じでイマイチです。たぶんGPUメモリ少ないとイマイチなんでしょう。

PS D:\> mkdir sdnext

ディレクトリ: D:\

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 2023/07/11 15:23 sdnext

PS D:\> cd sdnext

PS D:\sdnext> git clone https://github.com/vladmandic/automatic

Cloning into 'automatic'...

remote: Enumerating objects: 27653, done.

remote: Counting objects: 100% (446/446), done.

remote: Compressing objects: 100% (206/206), done.

remote: Total 27653 (delta 300), reused 343 (delta 238), pack-reused 27207

Receiving objects: 100% (27653/27653), 34.77 MiB | 9.98 MiB/s, done.

Resolving deltas: 100% (19681/19681), done.

PS D:\sdnext> dir

ディレクトリ: D:\sdnext

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 2023/07/11 15:23 automatic

PS D:\sdnext> cd .\automatic\

PS D:\sdnext\automatic> dir

ディレクトリ: D:\sdnext\automatic

Mode LastWriteTime Length Name

---- ------------- ------ ----

d----- 2023/07/11 15:23 .github

d----- 2023/07/11 15:23 .vscode

d----- 2023/07/11 15:23 cli

d----- 2023/07/11 15:23 configs

d----- 2023/07/11 15:23 extensions

d----- 2023/07/11 15:23 extensions-builtin

d----- 2023/07/11 15:23 html

d----- 2023/07/11 15:23 javascript

d----- 2023/07/11 15:23 models

d----- 2023/07/11 15:23 modules

d----- 2023/07/11 15:23 repositories

d----- 2023/07/11 15:23 scripts

d----- 2023/07/11 15:23 train

d----- 2023/07/11 15:23 wiki

-a---- 2023/07/11 15:23 53 .eslintignore

-a---- 2023/07/11 15:23 3184 .eslintrc.json

-a---- 2023/07/11 15:23 800 .gitignore

-a---- 2023/07/11 15:23 2135 .gitmodules

-a---- 2023/07/11 15:23 98 .markdownlint.json

-a---- 2023/07/11 15:23 5949 .pylintrc

-a---- 2023/07/11 15:23 26192 CHANGELOG.md

-a---- 2023/07/11 15:23 37405 installer.py

-a---- 2023/07/11 15:23 7610 launch.py

-a---- 2023/07/11 15:23 35240 LICENSE.txt

-a---- 2023/07/11 15:23 1255 pyproject.toml

-a---- 2023/07/11 15:23 7897 README.md

-a---- 2023/07/11 15:23 832 requirements.txt

-a---- 2023/07/11 15:23 1254 SECURITY.md

-a---- 2023/07/11 15:23 2153 TODO.md

-a---- 2023/07/11 15:23 2135 webui.bat

-a---- 2023/07/11 15:23 13616 webui.py

-a---- 2023/07/11 15:23 2515 webui.sh

PS D:\sdnext\automatic>

PS D:\sdnext\automatic> .\webui.bat

Creating venv in directory D:\sdnext\automatic\venv using python "C:\Users\OSAKANATARO\AppData\Local\Programs\Python\Python310\python.exe"

Using VENV: D:\sdnext\automatic\venv

15:25:01-666542 INFO Starting SD.Next

15:25:01-669541 INFO Python 3.10.11 on Windows

15:25:01-721480 INFO Version: 6466d3cb Mon Jul 10 17:20:29 2023 -0400

15:25:01-789179 INFO Using CPU-only Torch

15:25:01-791196 INFO Installing package: torch torchvision

15:28:27-814772 INFO Torch 2.0.1+cpu

15:28:27-816772 INFO Installing package: tensorflow==2.12.0

15:29:30-011443 INFO Verifying requirements

15:29:30-018087 INFO Installing package: addict

15:29:31-123764 INFO Installing package: aenum

15:29:32-305603 INFO Installing package: aiohttp

15:29:34-971224 INFO Installing package: anyio

15:29:36-493994 INFO Installing package: appdirs

15:29:37-534966 INFO Installing package: astunparse

15:29:38-564191 INFO Installing package: bitsandbytes

15:29:50-921879 INFO Installing package: blendmodes

15:29:53-458099 INFO Installing package: clean-fid

15:30:03-300722 INFO Installing package: easydev

15:30:06-960355 INFO Installing package: extcolors

15:30:08-507545 INFO Installing package: facexlib

15:30:33-800356 INFO Installing package: filetype

15:30:35-194993 INFO Installing package: future

15:30:42-170599 INFO Installing package: gdown

15:30:43-999361 INFO Installing package: gfpgan

15:31:07-467514 INFO Installing package: GitPython

15:31:09-671195 INFO Installing package: httpcore

15:31:11-496157 INFO Installing package: inflection

15:31:12-879955 INFO Installing package: jsonmerge

15:31:16-636081 INFO Installing package: kornia

15:31:20-478210 INFO Installing package: lark

15:31:22-125443 INFO Installing package: lmdb

15:31:23-437953 INFO Installing package: lpips

15:31:24-867851 INFO Installing package: omegaconf

15:31:29-258237 INFO Installing package: open-clip-torch

15:31:36-741714 INFO Installing package: opencv-contrib-python

15:31:43-728945 INFO Installing package: piexif

15:31:45-357791 INFO Installing package: psutil

15:31:47-282924 INFO Installing package: pyyaml

15:31:48-716454 INFO Installing package: realesrgan

15:31:50-511931 INFO Installing package: resize-right

15:31:52-093682 INFO Installing package: rich

15:31:53-644532 INFO Installing package: safetensors

15:31:55-125015 INFO Installing package: scipy

15:31:56-653853 INFO Installing package: tb_nightly

15:31:58-439541 INFO Installing package: toml

15:32:00-133340 INFO Installing package: torchdiffeq

15:32:01-912273 INFO Installing package: torchsde

15:32:04-240460 INFO Installing package: voluptuous

15:32:05-884949 INFO Installing package: yapf

15:32:07-385998 INFO Installing package: scikit-image

15:32:08-929379 INFO Installing package: basicsr

15:32:10-544987 INFO Installing package: compel

15:32:41-171247 INFO Installing package: typing-extensions==4.7.1

15:32:43-013058 INFO Installing package: antlr4-python3-runtime==4.9.3

15:32:45-010443 INFO Installing package: pydantic==1.10.11

15:32:47-661255 INFO Installing package: requests==2.31.0

15:32:49-665092 INFO Installing package: tqdm==4.65.0

15:32:51-622194 INFO Installing package: accelerate==0.20.3

15:32:54-560549 INFO Installing package: opencv-python==4.7.0.72

15:33:01-124008 INFO Installing package: diffusers==0.18.1

15:33:03-084405 INFO Installing package: einops==0.4.1

15:33:05-232281 INFO Installing package: gradio==3.32.0

15:33:31-795569 INFO Installing package: numexpr==2.8.4

15:33:34-212078 INFO Installing package: numpy==1.23.5

15:33:36-321166 INFO Installing package: numba==0.57.0

15:33:45-795266 INFO Installing package: pandas==1.5.3

15:34:02-667504 INFO Installing package: protobuf==3.20.3

15:34:04-879519 INFO Installing package: pytorch_lightning==1.9.4

15:34:11-965173 INFO Installing package: transformers==4.30.2

15:34:14-260230 INFO Installing package: tomesd==0.1.3

15:34:16-574323 INFO Installing package: urllib3==1.26.15

15:34:19-258844 INFO Installing package: Pillow==9.5.0

15:34:21-521566 INFO Installing package: timm==0.6.13

15:34:25-728405 INFO Verifying packages

15:34:25-729402 INFO Installing package: git+https://github.com/openai/CLIP.git

15:34:32-108450 INFO Installing package:

git+https://github.com/patrickvonplaten/invisible-watermark.git@remove_onnxruntime_depedency

15:34:40-136600 INFO Installing package: onnxruntime==1.15.1

15:34:45-579550 INFO Verifying repositories

15:34:45-581057 INFO Cloning repository: https://github.com/Stability-AI/stablediffusion.git

15:34:54-267186 INFO Cloning repository: https://github.com/CompVis/taming-transformers.git

15:35:39-098788 INFO Cloning repository: https://github.com/crowsonkb/k-diffusion.git

15:35:40-207126 INFO Cloning repository: https://github.com/sczhou/CodeFormer.git

15:35:43-303813 INFO Cloning repository: https://github.com/salesforce/BLIP.git

15:35:45-355666 INFO Verifying submodules

15:36:50-587204 INFO Extension installed packages: clip-interrogator-ext ['clip-interrogator==0.6.0']

15:36:57-547973 INFO Extension installed packages: sd-webui-agent-scheduler ['SQLAlchemy==2.0.18',

'greenlet==2.0.2']

15:37:26-237541 INFO Extension installed packages: sd-webui-controlnet ['pywin32==306', 'lxml==4.9.3',

'reportlab==4.0.4', 'pycparser==2.21', 'portalocker==2.7.0', 'cffi==1.15.1', 'svglib==1.5.1',

'tinycss2==1.2.1', 'mediapipe==0.10.2', 'tabulate==0.9.0', 'cssselect2==0.7.0',

'webencodings==0.5.1', 'sounddevice==0.4.6', 'iopath==0.1.9', 'yacs==0.1.8',

'fvcore==0.1.5.post20221221']

15:37:41-631094 INFO Extension installed packages: stable-diffusion-webui-images-browser ['Send2Trash==1.8.2',

'image-reward==1.5', 'fairscale==0.4.13']

15:37:48-683136 INFO Extension installed packages: stable-diffusion-webui-rembg ['rembg==2.0.38', 'pooch==1.7.0',

'PyMatting==1.1.8']

15:37:48-781391 INFO Extensions enabled: ['a1111-sd-webui-lycoris', 'clip-interrogator-ext', 'LDSR', 'Lora',

'multidiffusion-upscaler-for-automatic1111', 'ScuNET', 'sd-dynamic-thresholding',

'sd-extension-system-info', 'sd-webui-agent-scheduler', 'sd-webui-controlnet',

'stable-diffusion-webui-images-browser', 'stable-diffusion-webui-rembg', 'SwinIR']

15:37:48-783895 INFO Verifying packages

15:37:48-845754 INFO Extension preload: 0.0s D:\sdnext\automatic\extensions-builtin

15:37:48-846767 INFO Extension preload: 0.0s D:\sdnext\automatic\extensions

15:37:48-882113 INFO Server arguments: []

15:37:56-683469 INFO Pipeline: Backend.ORIGINAL

No module 'xformers'. Proceeding without it.

15:38:01-166704 INFO Libraries loaded

15:38:01-168718 INFO Using data path: D:\sdnext\automatic

15:38:01-171245 INFO Available VAEs: D:\sdnext\automatic\models\VAE 0

15:38:01-174758 INFO Available models: D:\sdnext\automatic\models\Stable-diffusion 0

Download the default model? (y/N) y

Downloading: "https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.safetensors" to D:\sdnext\automatic\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors

100.0%

15:45:08-083310 INFO ControlNet v1.1.232

ControlNet v1.1.232

ControlNet preprocessor location: D:\sdnext\automatic\extensions-builtin\sd-webui-controlnet\annotator\downloads

15:45:08-271984 INFO ControlNet v1.1.232

ControlNet v1.1.232

Image Browser: ImageReward is not installed, cannot be used.

Image Browser: Creating database

Image Browser: Database created

15:45:08-497758 ERROR Module load:

D:\sdnext\automatic\extensions-builtin\stable-diffusion-webui-rembg\scripts\api.py: ImportError

Module load: D:\sdnext\automatic\extensions-builtin\stable-diffusion-webui-rembg\scripts\api.py: ImportError

╭───────────────────────────────────────── Traceback (most recent call last) ──────────────────────────────────────────╮

│ D:\sdnext\automatic\modules\script_loading.py:13 in load_module │

│ │

│ 12 │ try: │

│ ❱ 13 │ │ module_spec.loader.exec_module(module) │

│ 14 │ except Exception as e: │

│ in exec_module:883 │

│ │

│ ... 7 frames hidden ... │

│ │

│ D:\sdnext\automatic\venv\lib\site-packages\numba\__init__.py:55 in <module> │

│ │

│ 54 │

│ ❱ 55 _ensure_critical_deps() │

│ 56 # END DO NOT MOVE │

│ │

│ D:\sdnext\automatic\venv\lib\site-packages\numba\__init__.py:42 in _ensure_critical_deps │

│ │

│ 41 │ elif numpy_version > (1, 24): │

│ ❱ 42 │ │ raise ImportError("Numba needs NumPy 1.24 or less") │

│ 43 │ try: │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

ImportError: Numba needs NumPy 1.24 or less

15:45:08-546905 ERROR Module load:

D:\sdnext\automatic\extensions-builtin\stable-diffusion-webui-rembg\scripts\postprocessing_remb

g.py: ImportError

Module load: D:\sdnext\automatic\extensions-builtin\stable-diffusion-webui-rembg\scripts\postprocessing_rembg.py: ImportError

╭───────────────────────────────────────── Traceback (most recent call last) ──────────────────────────────────────────╮

│ D:\sdnext\automatic\modules\script_loading.py:13 in load_module │

│ │

│ 12 │ try: │

│ ❱ 13 │ │ module_spec.loader.exec_module(module) │

│ 14 │ except Exception as e: │

│ in exec_module:883 │

│ │

│ ... 7 frames hidden ... │

│ │

│ D:\sdnext\automatic\venv\lib\site-packages\numba\__init__.py:55 in <module> │

│ │

│ 54 │

│ ❱ 55 _ensure_critical_deps() │

│ 56 # END DO NOT MOVE │

│ │

│ D:\sdnext\automatic\venv\lib\site-packages\numba\__init__.py:42 in _ensure_critical_deps │

│ │

│ 41 │ elif numpy_version > (1, 24): │

│ ❱ 42 │ │ raise ImportError("Numba needs NumPy 1.24 or less") │

│ 43 │ try: │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

ImportError: Numba needs NumPy 1.24 or less

15:45:08-867572 INFO Loading UI theme: name=black-orange style=Auto

Running on local URL: http://127.0.0.1:7860

15:45:11-480274 INFO Local URL: http://127.0.0.1:7860/

15:45:11-482798 INFO Initializing middleware

15:45:11-602837 INFO [AgentScheduler] Task queue is empty

15:45:11-606823 INFO [AgentScheduler] Registering APIs

15:45:11-709704 INFO Model metadata saved: D:\sdnext\automatic\metadata.json 1

Loading weights: D:\sdnext\automatic\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors ━━━━━━━━━ 0.0/4.3 -:--:--

GB

15:45:12-501405 WARNING Torch FP16 test failed: Forcing FP32 operations: "LayerNormKernelImpl" not implemented for

'Half'

15:45:12-503413 INFO Torch override dtype: no-half set

15:45:12-504408 INFO Torch override VAE dtype: no-half set

15:45:12-505409 INFO Setting Torch parameters: dtype=torch.float32 vae=torch.float32 unet=torch.float32

LatentDiffusion: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

Downloading (…)olve/main/vocab.json: 100%|██████████████████████████████████████████| 961k/961k [00:00<00:00, 1.61MB/s]

Downloading (…)olve/main/merges.txt: 100%|██████████████████████████████████████████| 525k/525k [00:00<00:00, 1.16MB/s]

Downloading (…)cial_tokens_map.json: 100%|████████████████████████████████████████████████████| 389/389 [00:00<?, ?B/s]

Downloading (…)okenizer_config.json: 100%|████████████████████████████████████████████████████| 905/905 [00:00<?, ?B/s]

Downloading (…)lve/main/config.json: 100%|████████████████████████████████████████████████| 4.52k/4.52k [00:00<?, ?B/s]

Calculating model hash: D:\sdnext\automatic\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors ━━━━━━ 4.3/4… 0:00:…

GB

15:45:20-045323 INFO Applying Doggettx cross attention optimization

15:45:20-051844 INFO Embeddings: loaded=0 skipped=0

15:45:20-057917 INFO Model loaded in 8.1s (load=0.2s config=0.4s create=3.5s hash=3.2s apply=0.8s)

15:45:20-301777 INFO Model load finished: {'ram': {'used': 8.55, 'total': 31.3}} cached=0

15:45:20-859838 INFO Startup time: 452.0s (torch=4.3s gradio=2.4s libraries=5.5s models=424.0s codeformer=0.2s

scripts=3.3s onchange=0.2s ui-txt2img=0.1s ui-img2img=0.1s ui-settings=0.4s ui-extensions=1.7s

ui-defaults=0.1s launch=0.2s app-started=0.2s checkpoint=9.2s)

エラーがでていたので中断して、もう1回起動してみたらさっき出てたエラーっぽいのはないが止まった。

PS D:\sdnext\automatic> .\webui.bat

Using VENV: D:\sdnext\automatic\venv

20:46:25-099403 INFO Starting SD.Next

20:46:25-107728 INFO Python 3.10.11 on Windows

20:46:25-168108 INFO Version: 6466d3cb Mon Jul 10 17:20:29 2023 -0400

20:46:25-610382 INFO Latest published version: a844a83d9daa9987295932c0db391ec7be5f2d32 2023-07-11T08:00:45Z

20:46:25-634606 INFO Using CPU-only Torch

20:46:28-219427 INFO Torch 2.0.1+cpu

20:46:28-220614 INFO Installing package: tensorflow==2.12.0

20:47:05-861641 INFO Enabled extensions-builtin: ['a1111-sd-webui-lycoris', 'clip-interrogator-ext', 'LDSR', 'Lora',

'multidiffusion-upscaler-for-automatic1111', 'ScuNET', 'sd-dynamic-thresholding',

'sd-extension-system-info', 'sd-webui-agent-scheduler', 'sd-webui-controlnet',

'stable-diffusion-webui-images-browser', 'stable-diffusion-webui-rembg', 'SwinIR']

20:47:05-870117 INFO Enabled extensions: []

20:47:05-872302 INFO Verifying requirements

20:47:05-889503 INFO Verifying packages

20:47:05-891503 INFO Verifying repositories

20:47:11-387347 INFO Verifying submodules

20:47:32-176175 INFO Extensions enabled: ['a1111-sd-webui-lycoris', 'clip-interrogator-ext', 'LDSR', 'Lora',

'multidiffusion-upscaler-for-automatic1111', 'ScuNET', 'sd-dynamic-thresholding',

'sd-extension-system-info', 'sd-webui-agent-scheduler', 'sd-webui-controlnet',

'stable-diffusion-webui-images-browser', 'stable-diffusion-webui-rembg', 'SwinIR']

20:47:32-178176 INFO Verifying packages

20:47:32-186325 INFO Extension preload: 0.0s D:\sdnext\automatic\extensions-builtin

20:47:32-188648 INFO Extension preload: 0.0s D:\sdnext\automatic\extensions

20:47:32-221762 INFO Server arguments: []

20:47:40-417209 INFO Pipeline: Backend.ORIGINAL

No module 'xformers'. Proceeding without it.

20:47:43-468816 INFO Libraries loaded

20:47:43-469815 INFO Using data path: D:\sdnext\automatic

20:47:43-473321 INFO Available VAEs: D:\sdnext\automatic\models\VAE 0

20:47:43-488860 INFO Available models: D:\sdnext\automatic\models\Stable-diffusion 1

20:47:46-821663 INFO ControlNet v1.1.232

ControlNet v1.1.232

ControlNet preprocessor location: D:\sdnext\automatic\extensions-builtin\sd-webui-controlnet\annotator\downloads

20:47:47-027110 INFO ControlNet v1.1.232

ControlNet v1.1.232

Image Browser: ImageReward is not installed, cannot be used.

20:48:25-145779 INFO Loading UI theme: name=black-orange style=Auto

Running on local URL: http://127.0.0.1:7860

20:48:27-450550 INFO Local URL: http://127.0.0.1:7860/

20:48:27-451639 INFO Initializing middleware

20:48:28-016312 INFO [AgentScheduler] Task queue is empty

20:48:28-017325 INFO [AgentScheduler] Registering APIs

20:48:28-133032 WARNING Selected checkpoint not found: model.ckpt

Loading weights: D:\sdnext\automatic\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors ━━━━━━━━━ 0.0/4.3 -:--:--

GB

20:48:29-090045 WARNING Torch FP16 test failed: Forcing FP32 operations: "LayerNormKernelImpl" not implemented for

'Half'

20:48:29-091161 INFO Torch override dtype: no-half set

20:48:29-092186 INFO Torch override VAE dtype: no-half set

20:48:29-093785 INFO Setting Torch parameters: dtype=torch.float32 vae=torch.float32 unet=torch.float32

LatentDiffusion: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

20:48:30-662359 INFO Applying Doggettx cross attention optimization

20:48:30-666359 INFO Embeddings: loaded=0 skipped=0

20:48:30-679671 INFO Model loaded in 2.2s (load=0.2s config=0.4s create=0.5s apply=1.0s)

20:48:31-105108 INFO Model load finished: {'ram': {'used': 8.9, 'total': 31.3}} cached=0

20:48:31-879698 INFO Startup time: 59.7s (torch=6.1s gradio=1.5s libraries=3.7s codeformer=0.1s scripts=41.4s

onchange=0.2s ui-txt2img=0.1s ui-img2img=0.1s ui-settings=0.1s ui-extensions=1.6s

ui-defaults=0.1s launch=0.2s app-started=0.7s checkpoint=3.7s)

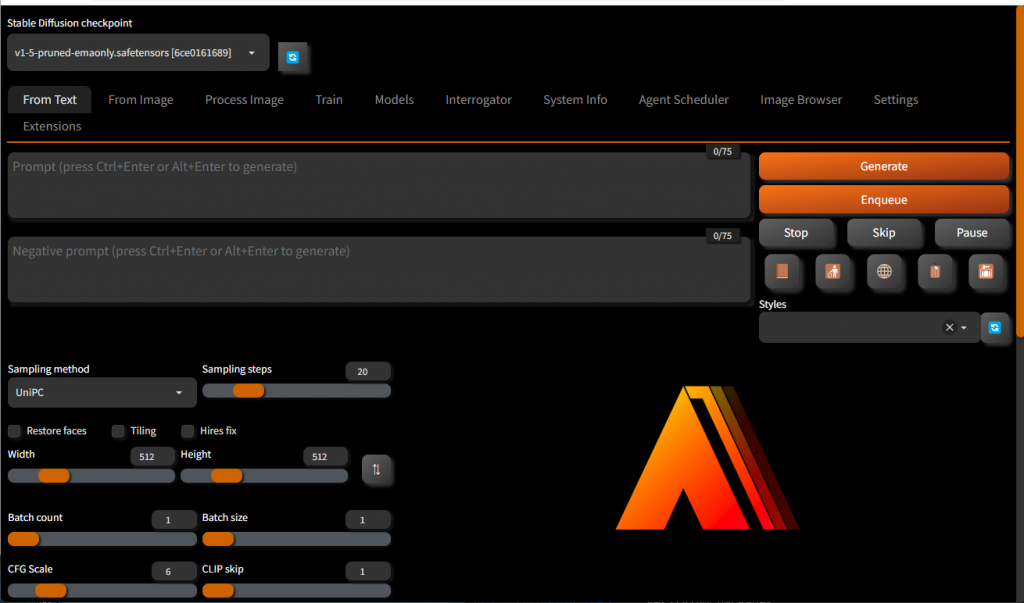

起動メッセージを確認すると「Using CPU-only Torch」と出ている

3) DirectML版

素直にAMDへの対応手法が記載されているオリジナルのStable Diffusion web UI を使うことにして「Install and Run on AMD GPUs」の「Windows」にある手順を実行します。

PS D:\sdnext> git clone https://github.com/lshqqytiger/stable-diffusion-webui-directml

Cloning into 'stable-diffusion-webui-directml'...

remote: Enumerating objects: 23452, done.

remote: Counting objects: 100% (12/12), done.

remote: Compressing objects: 100% (11/11), done.

remote: Total 23452 (delta 3), reused 6 (delta 1), pack-reused 23440

Receiving objects: 100% (23452/23452), 31.11 MiB | 8.63 MiB/s, done.

Resolving deltas: 100% (16326/16326), done.

PS D:\sdnext> cd .\stable-diffusion-webui-directml\

PS D:\sdnext\stable-diffusion-webui-directml> git submodule init

PS D:\sdnext\stable-diffusion-webui-directml> git submodule update

PS D:\sdnext\stable-diffusion-webui-directml>

そして実行

PS D:\sdnext\stable-diffusion-webui-directml> .\webui-user.bat

Creating venv in directory D:\sdnext\stable-diffusion-webui-directml\venv using python "C:\Users\OSAKANATARO\AppData\Local\Programs\Python\Python310\python.exe"

venv "D:\sdnext\stable-diffusion-webui-directml\venv\Scripts\Python.exe"

fatal: No names found, cannot describe anything.

Python 3.10.11 (tags/v3.10.11:7d4cc5a, Apr 5 2023, 00:38:17) [MSC v.1929 64 bit (AMD64)]

Version: ## 1.4.0

Commit hash: 265d626471eacd617321bdb51e50e4b87a7ca82e

Installing torch and torchvision

Collecting torch==2.0.0

Downloading torch-2.0.0-cp310-cp310-win_amd64.whl (172.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 172.3/172.3 MB 7.9 MB/s eta 0:00:00

Collecting torchvision==0.15.1

Downloading torchvision-0.15.1-cp310-cp310-win_amd64.whl (1.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.2/1.2 MB 10.8 MB/s eta 0:00:00

Collecting torch-directml

Downloading torch_directml-0.2.0.dev230426-cp310-cp310-win_amd64.whl (8.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 8.2/8.2 MB 8.6 MB/s eta 0:00:00

Collecting filelock

Using cached filelock-3.12.2-py3-none-any.whl (10 kB)

Collecting sympy

Using cached sympy-1.12-py3-none-any.whl (5.7 MB)

Collecting typing-extensions

Using cached typing_extensions-4.7.1-py3-none-any.whl (33 kB)

Collecting jinja2

Using cached Jinja2-3.1.2-py3-none-any.whl (133 kB)

Collecting networkx

Using cached networkx-3.1-py3-none-any.whl (2.1 MB)

Collecting numpy

Using cached numpy-1.25.1-cp310-cp310-win_amd64.whl (15.0 MB)

Collecting requests

Using cached requests-2.31.0-py3-none-any.whl (62 kB)

Collecting pillow!=8.3.*,>=5.3.0

Using cached Pillow-10.0.0-cp310-cp310-win_amd64.whl (2.5 MB)

Collecting MarkupSafe>=2.0

Using cached MarkupSafe-2.1.3-cp310-cp310-win_amd64.whl (17 kB)

Collecting certifi>=2017.4.17

Using cached certifi-2023.5.7-py3-none-any.whl (156 kB)

Collecting charset-normalizer<4,>=2

Using cached charset_normalizer-3.2.0-cp310-cp310-win_amd64.whl (96 kB)

Collecting idna<4,>=2.5

Using cached idna-3.4-py3-none-any.whl (61 kB)

Collecting urllib3<3,>=1.21.1

Using cached urllib3-2.0.3-py3-none-any.whl (123 kB)

Collecting mpmath>=0.19

Using cached mpmath-1.3.0-py3-none-any.whl (536 kB)

Installing collected packages: mpmath, urllib3, typing-extensions, sympy, pillow, numpy, networkx, MarkupSafe, idna, filelock, charset-normalizer, certifi, requests, jinja2, torch, torchvision, torch-directml

Successfully installed MarkupSafe-2.1.3 certifi-2023.5.7 charset-normalizer-3.2.0 filelock-3.12.2 idna-3.4 jinja2-3.1.2 mpmath-1.3.0 networkx-3.1 numpy-1.25.1 pillow-10.0.0 requests-2.31.0 sympy-1.12 torch-2.0.0 torch-directml-0.2.0.dev230426 torchvision-0.15.1 typing-extensions-4.7.1 urllib3-2.0.3

[notice] A new release of pip is available: 23.0.1 -> 23.1.2

[notice] To update, run: D:\sdnext\stable-diffusion-webui-directml\venv\Scripts\python.exe -m pip install --upgrade pip

Installing gfpgan

Installing clip

Installing open_clip

Cloning Stable Diffusion into D:\sdnext\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai...

Cloning K-diffusion into D:\sdnext\stable-diffusion-webui-directml\repositories\k-diffusion...

Cloning CodeFormer into D:\sdnext\stable-diffusion-webui-directml\repositories\CodeFormer...

Cloning BLIP into D:\sdnext\stable-diffusion-webui-directml\repositories\BLIP...

Installing requirements for CodeFormer

Installing requirements

Launching Web UI with arguments:

No module 'xformers'. Proceeding without it.

Warning: caught exception 'Torch not compiled with CUDA enabled', memory monitor disabled

Downloading: "https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.safetensors" to D:\sdnext\stable-diffusion-webui-directml\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors

100%|█████████████████████████████████████████████████████████████████████████████| 3.97G/3.97G [07:45<00:00, 9.15MB/s]

Calculating sha256 for D:\sdnext\stable-diffusion-webui-directml\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors: preload_extensions_git_metadata for 7 extensions took 0.00s

Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

Startup time: 479.1s (import torch: 3.3s, import gradio: 2.0s, import ldm: 0.8s, other imports: 4.2s, setup codeformer: 0.3s, list SD models: 466.4s, load scripts: 1.4s, create ui: 0.5s, gradio launch: 0.1s).

6ce0161689b3853acaa03779ec93eafe75a02f4ced659bee03f50797806fa2fa

Loading weights [6ce0161689] from D:\sdnext\stable-diffusion-webui-directml\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors

Creating model from config: D:\sdnext\stable-diffusion-webui-directml\configs\v1-inference.yaml

LatentDiffusion: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

Applying attention optimization: InvokeAI... done.

Textual inversion embeddings loaded(0):

Model loaded in 8.4s (calculate hash: 4.1s, load weights from disk: 0.2s, create model: 0.5s, apply weights to model: 0.8s, apply half(): 0.8s, move model to device: 1.4s, calculate empty prompt: 0.6s).

標準状態で起動させて生成を行っていると途中でクラッシュした

もう1回生成させたらブルースクリーンで止まった。

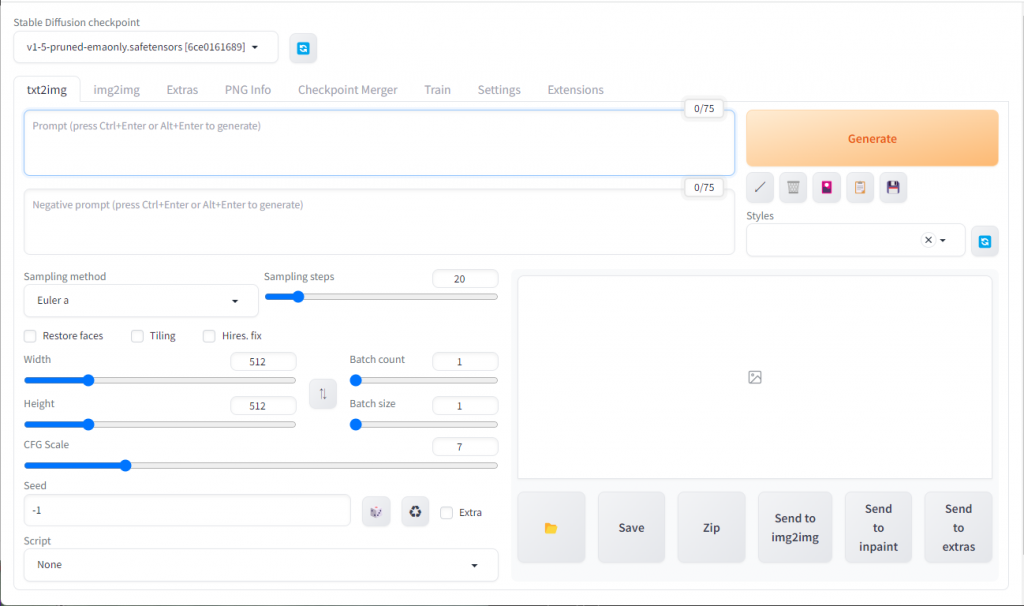

webui-user.bat をコピーして、 COMMANDLINE_ARGSのある行を「set COMMANDLINE_ARGS=–opt-sub-quad-attention –lowvram –disable-nan-check」に変えることで生成に成功した。

ちゃんとGPUで計算して生成に成功しました。

PS D:\sdnext\stable-diffusion-webui-directml> .\webui-user2.bat

venv "D:\sdnext\stable-diffusion-webui-directml\venv\Scripts\Python.exe"

fatal: No names found, cannot describe anything.

Python 3.10.11 (tags/v3.10.11:7d4cc5a, Apr 5 2023, 00:38:17) [MSC v.1929 64 bit (AMD64)]

Version: ## 1.4.0

Commit hash: 265d626471eacd617321bdb51e50e4b87a7ca82e

Installing requirements

Launching Web UI with arguments: --opt-sub-quad-attention --lowvram --disable-nan-check

No module 'xformers'. Proceeding without it.

Warning: caught exception 'Torch not compiled with CUDA enabled', memory monitor disabled

Loading weights [6ce0161689] from D:\sdnext\stable-diffusion-webui-directml\models\Stable-diffusion\v1-5-pruned-emaonly.safetensors

preload_extensions_git_metadata for 7 extensions took 0.00s

Creating model from config: D:\sdnext\stable-diffusion-webui-directml\configs\v1-inference.yaml

LatentDiffusion: Running in eps-prediction mode

Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

Startup time: 11.1s (import torch: 2.8s, import gradio: 1.3s, import ldm: 0.6s, other imports: 3.9s, setup codeformer: 0.1s, load scripts: 1.3s, create ui: 0.7s, gradio launch: 0.4s).

DiffusionWrapper has 859.52 M params.

Applying attention optimization: sub-quadratic... done.

Textual inversion embeddings loaded(0):

Model loaded in 14.4s (load weights from disk: 0.9s, create model: 0.6s, apply weights to model: 11.6s, apply half(): 0.8s, calculate empty prompt: 0.5s).

100%|██████████████████████████████████████████████████████████████████████████████████| 20/20 [02:32<00:00, 7.64s/it]

Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [02:29<00:00, 7.48s/it]

100%|██████████████████████████████████████████████████████████████████████████████████| 20/20 [02:30<00:00, 7.54s/it]

Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [02:28<00:00, 7.44s/it]

Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [02:28<00:00, 7.63s/it]

4) 出力メッセージの精査

「No module ‘xformers’. Proceeding without it.」はnVidia GPUじゃないと動かないのでこれは正常動作。

5) 学習モデルを持ってくる

参考:Stable Diffusion v2モデル_H2-2023

拡張子safetensorsのファイルは models\Stable-diffusion に配置した。

6) ControlNet追加

ControlNetを web uiに組み込める形にした ControlNet for Stable Diffusion WebUI

(SD.Nextだと組み込み済みだが、オリジナルの方は追加する)

Web GUIの「Extensions」の「Install from URL」に「https://github.com/Mikubill/sd-webui-controlnet.git」を入れて、手順を行う

Modelをhttps://huggingface.co/lllyasviel/ControlNet-v1-1/tree/main からダウンロードする、とあったのだが、既におかれていたので不要なのかなぁ?

とりあえず設定はしてみたけど、まだ使っていない。

追加:Ryzen 7 5800Hの場合

Ryzen 7 5800H環境でも同じように設定してみたのだが、こちらは何も生成しないうちにcontrolenetを組み込んでみたらエラーとなった。

PS C:\stablediff\stable-diffusion-webui-directml> .\webui-user-amd.bat

venv "C:\stablediff\stable-diffusion-webui-directml\venv\Scripts\Python.exe"

fatal: No names found, cannot describe anything.

Python 3.10.11 (tags/v3.10.11:7d4cc5a, Apr 5 2023, 00:38:17) [MSC v.1929 64 bit (AMD64)]

Version: ## 1.4.0

Commit hash: 265d626471eacd617321bdb51e50e4b87a7ca82e

Installing requirements

Launching Web UI with arguments: --opt-sub-quad-attention --lowvram --disable-nan-check --autolaunch

No module 'xformers'. Proceeding without it.

Warning: caught exception 'Torch not compiled with CUDA enabled', memory monitor disabled

reading checkpoint metadata: C:\stablediff\stable-diffusion-webui-directml\models\Stable-diffusion\unlimitedReplicant_v10.safetensors: AssertionError

Traceback (most recent call last):

File "C:\stablediff\stable-diffusion-webui-directml\modules\sd_models.py", line 62, in __init__

self.metadata = read_metadata_from_safetensors(filename)

File "C:\stablediff\stable-diffusion-webui-directml\modules\sd_models.py", line 236, in read_metadata_from_safetensors

assert metadata_len > 2 and json_start in (b'{"', b"{'"), f"{filename} is not a safetensors file"

AssertionError: C:\stablediff\stable-diffusion-webui-directml\models\Stable-diffusion\unlimitedReplicant_v10.safetensors is not a safetensors file

2023-07-12 13:46:53,471 - ControlNet - INFO - ControlNet v1.1.232

ControlNet preprocessor location: C:\stablediff\stable-diffusion-webui-directml\extensions\sd-webui-controlnet\annotator\downloads

2023-07-12 13:46:53,548 - ControlNet - INFO - ControlNet v1.1.232

Loading weights [c348e5681e] from C:\stablediff\stable-diffusion-webui-directml\models\Stable-diffusion\muaccamix_v15.safetensors

preload_extensions_git_metadata for 8 extensions took 0.13s

Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

Startup time: 7.2s (import torch: 2.2s, import gradio: 1.0s, import ldm: 0.5s, other imports: 1.2s, load scripts: 1.3s, create ui: 0.4s, gradio launch: 0.5s).

Creating model from config: C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\configs\stable-diffusion\v2-inference-v.yaml

LatentDiffusion: Running in v-prediction mode

DiffusionWrapper has 865.91 M params.

Applying attention optimization: sub-quadratic... done.

Textual inversion embeddings loaded(0):

Model loaded in 7.1s (load weights from disk: 0.7s, find config: 2.4s, create model: 0.2s, apply weights to model: 1.9s, apply half(): 1.0s, move model to device: 0.3s, calculate empty prompt: 0.4s).

Loading weights [e3b0c44298] from C:\stablediff\stable-diffusion-webui-directml\models\Stable-diffusion\unlimitedReplicant_v10.safetensors

changing setting sd_model_checkpoint to unlimitedReplicant_v10.safetensors [e3b0c44298]: SafetensorError

Traceback (most recent call last):

File "C:\stablediff\stable-diffusion-webui-directml\modules\shared.py", line 610, in set

self.data_labels[key].onchange()

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 13, in f

res = func(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\webui.py", line 226, in <lambda>

shared.opts.onchange("sd_model_checkpoint", wrap_queued_call(lambda: modules.sd_models.reload_model_weights()), call=False)

File "C:\stablediff\stable-diffusion-webui-directml\modules\sd_models.py", line 568, in reload_model_weights

state_dict = get_checkpoint_state_dict(checkpoint_info, timer)

File "C:\stablediff\stable-diffusion-webui-directml\modules\sd_models.py", line 277, in get_checkpoint_state_dict

res = read_state_dict(checkpoint_info.filename)

File "C:\stablediff\stable-diffusion-webui-directml\modules\sd_models.py", line 256, in read_state_dict

pl_sd = safetensors.torch.load_file(checkpoint_file, device=device)

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\safetensors\torch.py", line 259, in load_file

with safe_open(filename, framework="pt", device=device) as f:

safetensors_rust.SafetensorError: Error while deserializing header: HeaderTooSmall

*** Error completing request

*** Arguments: ('task(d0d406cu3531u31)', 'miku\n', '', [], 20, 0, False, False, 1, 1, 7, -1.0, -1.0, 0, 0, 0, False, 512, 512, False, 0.7, 2, 'Latent', 0, 0, 0, 0, '', '', [], 0, <scripts.controlnet_ui.controlnet_ui_group.UiControlNetUnit object at 0x000001FAA7416110>, False, False, 'positive', 'comma', 0, False, False, '', 1, '', [], 0, '', [], 0, '', [], True, False, False, False, 0, None, None, False, 50) {}

Traceback (most recent call last):

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 55, in f

res = list(func(*args, **kwargs))

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 35, in f

res = func(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\modules\txt2img.py", line 94, in txt2img

processed = processing.process_images(p)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 623, in process_images

res = process_images_inner(p)

File "C:\stablediff\stable-diffusion-webui-directml\extensions\sd-webui-controlnet\scripts\batch_hijack.py", line 42, in processing_process_images_hijack

return getattr(processing, '__controlnet_original_process_images_inner')(p, *args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 732, in process_images_inner

p.setup_conds()

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 1129, in setup_conds

super().setup_conds()

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 346, in setup_conds

self.uc = self.get_conds_with_caching(prompt_parser.get_learned_conditioning, self.negative_prompts, self.steps * self.step_multiplier, [self.cached_uc], self.extra_network_data)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 338, in get_conds_with_caching

cache[1] = function(shared.sd_model, required_prompts, steps)

File "C:\stablediff\stable-diffusion-webui-directml\modules\prompt_parser.py", line 143, in get_learned_conditioning

conds = model.get_learned_conditioning(texts)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\models\diffusion\ddpm.py", line 665, in get_learned_conditioning

c = self.cond_stage_model.encode(c)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\modules\encoders\modules.py", line 236, in encode

return self(text)

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\modules\encoders\modules.py", line 213, in forward

z = self.encode_with_transformer(tokens.to(self.device))

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\torch\cuda\__init__.py", line 239, in _lazy_init

raise AssertionError("Torch not compiled with CUDA enabled")

AssertionError: Torch not compiled with CUDA enabled

---

*** Error completing request

*** Arguments: ('task(rw9uda96ly6wovo)', 'miku\n', '', [], 20, 0, False, False, 1, 1, 7, -1.0, -1.0, 0, 0, 0, False, 512, 512, False, 0.7, 2, 'Latent', 0, 0, 0, 0, '', '', [], 0, <scripts.controlnet_ui.controlnet_ui_group.UiControlNetUnit object at 0x000001FA000A6620>, False, False, 'positive', 'comma', 0, False, False, '', 1, '', [], 0, '', [], 0, '', [], True, False, False, False, 0, None, None, False, 50) {}

Traceback (most recent call last):

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 55, in f

res = list(func(*args, **kwargs))

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 35, in f

res = func(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\modules\txt2img.py", line 94, in txt2img

processed = processing.process_images(p)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 623, in process_images

res = process_images_inner(p)

File "C:\stablediff\stable-diffusion-webui-directml\extensions\sd-webui-controlnet\scripts\batch_hijack.py", line 42, in processing_process_images_hijack

return getattr(processing, '__controlnet_original_process_images_inner')(p, *args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 732, in process_images_inner

p.setup_conds()

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 1129, in setup_conds

super().setup_conds()

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 346, in setup_conds

self.uc = self.get_conds_with_caching(prompt_parser.get_learned_conditioning, self.negative_prompts, self.steps * self.step_multiplier, [self.cached_uc], self.extra_network_data)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 338, in get_conds_with_caching

cache[1] = function(shared.sd_model, required_prompts, steps)

File "C:\stablediff\stable-diffusion-webui-directml\modules\prompt_parser.py", line 143, in get_learned_conditioning

conds = model.get_learned_conditioning(texts)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\models\diffusion\ddpm.py", line 665, in get_learned_conditioning

c = self.cond_stage_model.encode(c)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\modules\encoders\modules.py", line 236, in encode

return self(text)

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\modules\encoders\modules.py", line 213, in forward

z = self.encode_with_transformer(tokens.to(self.device))

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\torch\cuda\__init__.py", line 239, in _lazy_init

raise AssertionError("Torch not compiled with CUDA enabled")

AssertionError: Torch not compiled with CUDA enabled

---

*** Error completing request

*** Arguments: ('task(qgndomumiw4zfai)', 'miku\n', '', [], 20, 0, False, False, 1, 1, 7, -1.0, -1.0, 0, 0, 0, False, 512, 512, False, 0.7, 2, 'Latent', 0, 0, 0, 0, '', '', [], 0, <scripts.controlnet_ui.controlnet_ui_group.UiControlNetUnit object at 0x000001FAA6F229E0>, False, False, 'positive', 'comma', 0, False, False, '', 1, '', [], 0, '', [], 0, '', [], True, False, False, False, 0, None, None, False, 50) {}

Traceback (most recent call last):

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 55, in f

res = list(func(*args, **kwargs))

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 35, in f

res = func(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\modules\txt2img.py", line 94, in txt2img

processed = processing.process_images(p)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 623, in process_images

res = process_images_inner(p)

File "C:\stablediff\stable-diffusion-webui-directml\extensions\sd-webui-controlnet\scripts\batch_hijack.py", line 42, in processing_process_images_hijack

return getattr(processing, '__controlnet_original_process_images_inner')(p, *args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 732, in process_images_inner

p.setup_conds()

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 1129, in setup_conds

super().setup_conds()

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 346, in setup_conds

self.uc = self.get_conds_with_caching(prompt_parser.get_learned_conditioning, self.negative_prompts, self.steps * self.step_multiplier, [self.cached_uc], self.extra_network_data)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 338, in get_conds_with_caching

cache[1] = function(shared.sd_model, required_prompts, steps)

File "C:\stablediff\stable-diffusion-webui-directml\modules\prompt_parser.py", line 143, in get_learned_conditioning

conds = model.get_learned_conditioning(texts)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\models\diffusion\ddpm.py", line 665, in get_learned_conditioning

c = self.cond_stage_model.encode(c)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\modules\encoders\modules.py", line 236, in encode

return self(text)

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\modules\encoders\modules.py", line 213, in forward

z = self.encode_with_transformer(tokens.to(self.device))

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\torch\cuda\__init__.py", line 239, in _lazy_init

raise AssertionError("Torch not compiled with CUDA enabled")

AssertionError: Torch not compiled with CUDA enabled

---

Restarting UI...

Closing server running on port: 7860

2023-07-12 13:54:32,359 - ControlNet - INFO - ControlNet v1.1.232

Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

Startup time: 0.6s (load scripts: 0.3s, create ui: 0.2s).

preload_extensions_git_metadata for 8 extensions took 0.15s

*** Error completing request

*** Arguments: ('task(jwkb7fcvkg7wpb4)', 'miku', '', [], 20, 0, False, False, 1, 1, 7, -1.0, -1.0, 0, 0, 0, False, 512, 512, False, 0.7, 2, 'Latent', 0, 0, 0, 0, '', '', [], 0, <scripts.controlnet_ui.controlnet_ui_group.UiControlNetUnit object at 0x000001FB1BC5F010>, False, False, 'positive', 'comma', 0, False, False, '', 1, '', [], 0, '', [], 0, '', [], True, False, False, False, 0, None, None, False, 50) {}

Traceback (most recent call last):

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 55, in f

res = list(func(*args, **kwargs))

File "C:\stablediff\stable-diffusion-webui-directml\modules\call_queue.py", line 35, in f

res = func(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\modules\txt2img.py", line 94, in txt2img

processed = processing.process_images(p)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 623, in process_images

res = process_images_inner(p)

File "C:\stablediff\stable-diffusion-webui-directml\extensions\sd-webui-controlnet\scripts\batch_hijack.py", line 42, in processing_process_images_hijack

return getattr(processing, '__controlnet_original_process_images_inner')(p, *args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 732, in process_images_inner

p.setup_conds()

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 1129, in setup_conds

super().setup_conds()

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 346, in setup_conds

self.uc = self.get_conds_with_caching(prompt_parser.get_learned_conditioning, self.negative_prompts, self.steps * self.step_multiplier, [self.cached_uc], self.extra_network_data)

File "C:\stablediff\stable-diffusion-webui-directml\modules\processing.py", line 338, in get_conds_with_caching

cache[1] = function(shared.sd_model, required_prompts, steps)

File "C:\stablediff\stable-diffusion-webui-directml\modules\prompt_parser.py", line 143, in get_learned_conditioning

conds = model.get_learned_conditioning(texts)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\models\diffusion\ddpm.py", line 665, in get_learned_conditioning

c = self.cond_stage_model.encode(c)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\modules\encoders\modules.py", line 236, in encode

return self(text)

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\ldm\modules\encoders\modules.py", line 213, in forward

z = self.encode_with_transformer(tokens.to(self.device))

File "C:\stablediff\stable-diffusion-webui-directml\venv\lib\site-packages\torch\cuda\__init__.py", line 239, in _lazy_init

raise AssertionError("Torch not compiled with CUDA enabled")

AssertionError: Torch not compiled with CUDA enabled

---

fatal: No names found, cannot describe anything.

Python 3.10.11 (tags/v3.10.11:7d4cc5a, Apr 5 2023, 00:38:17) [MSC v.1929 64 bit (AMD64)]

Version: ## 1.4.0

Commit hash: 265d626471eacd617321bdb51e50e4b87a7ca82e

Installing requirements

Launching Web UI with arguments: --opt-sub-quad-attention --lowvram --disable-nan-check --autolaunch

No module 'xformers'. Proceeding without it.

Warning: caught exception 'Torch not compiled with CUDA enabled', memory monitor disabled

Loading weights [c348e5681e] from C:\stablediff\stable-diffusion-webui-directml\models\Stable-diffusion\muaccamix_v15.safetensors

preload_extensions_git_metadata for 8 extensions took 0.13s

Running on local URL: http://127.0.0.1:7860

To create a public link, set `share=True` in `launch()`.

Startup time: 6.6s (import torch: 2.2s, import gradio: 1.0s, import ldm: 0.5s, other imports: 1.2s, load scripts: 1.0s, create ui: 0.5s, gradio launch: 0.2s).

Creating model from config: C:\stablediff\stable-diffusion-webui-directml\repositories\stable-diffusion-stability-ai\configs\stable-diffusion\v2-inference-v.yaml

LatentDiffusion: Running in v-prediction mode

DiffusionWrapper has 865.91 M params.

Applying attention optimization: sub-quadratic... done.

Textual inversion embeddings loaded(0):

Model loaded in 6.3s (load weights from disk: 0.7s, find config: 1.7s, create model: 0.6s, apply weights to model: 1.6s, apply half(): 1.0s, move model to device: 0.3s, calculate empty prompt: 0.4s).

100%|██████████████████████████████████████████████████████████████████████████████████| 20/20 [01:44<00:00, 5.23s/it]

Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [01:41<00:00, 5.06s/it]

Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [01:41<00:00, 5.08s/it]

もしかしていきなりcontrole netを有効にしたせいかな?と一度無効化したところ正常動作した。

1回正常動作を確認後、再びctonrole net有効にしたら今度は問題なく動作した・・・なぜ?

生成時間比較

Ryzen 5 5600GとRyzen 7 5800H比較のため、モデルUnlimited Replicantを使って「miku」とだけ指定して生成してみたところ

Ryzen 7 5800Hでの生成時間

Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [01:39<00:00, 5.03s/it]

Ryzen 5 5600Gでの生成時間

Total progress: 100%|██████████████████████████████████████████████████████████████████| 20/20 [02:22<00:00, 7.23s/it]