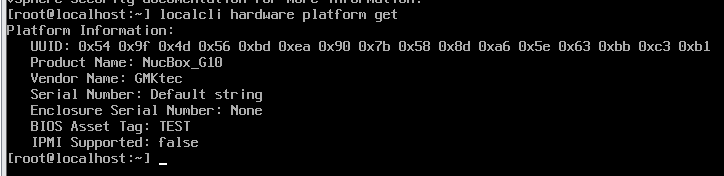

HPE VME ver 8.0.13環境を作ってみたところ、以前と比べてGFS2データストア作成プロセスが改善されていたのでメモ

(1) HPE VME側でiSCSIターゲットIP登録

[インフラストラクチャ]-[クラスター]で該当クラスタを選択し、[ストレージ]-[iSCSI]にてiSCSIストレージのターゲットIPアドレスを登録

これを行うと、しばらくすると各サーバの /etc/iscsi/initiatorname.iscsi の InitiatorName に設定されている名前で、iSCSIストレージに対してアクセスが実施される

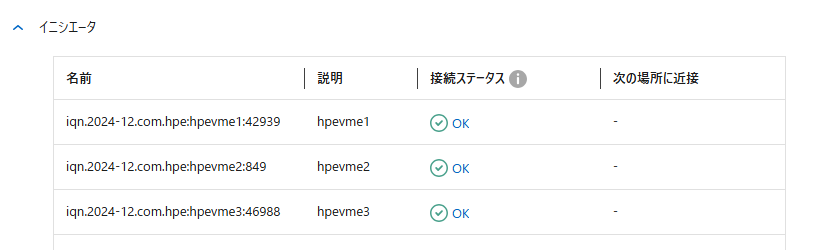

(2) iSCSIストレージ側でInitiatorNameを登録

iSCSIストレージ側で、各サーバの /etc/iscsi/initiatorname.iscsi の InitiatorName の名前を登録し、アクセスを許可する

NetAppの例

しばらく待っても接続ステータスが変更されない場合は、該当のVMEサーバにログインして「sudo iscsiadm -m session –rescan」を実行してスキャンを行う

pcuser@hpevme1:~$ sudo iscsiadm -m session --rescan

Rescanning session [sid: 1, target: iqn.1992-08.com.netapp:sn.588844e7ec3411f0a4bd000c292a75e7:vs.8, portal: 192.168.3.35,3260]

Rescanning session [sid: 2, target: iqn.1992-08.com.netapp:sn.588844e7ec3411f0a4bd000c292a75e7:vs.8, portal: 192.168.2.35,3260]

pcuser@hpevme1:~$

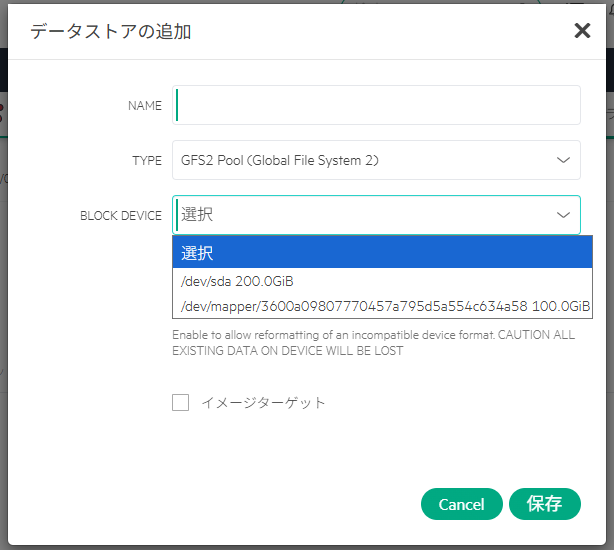

(3) HPE VME側でディスクが認識されているか確認

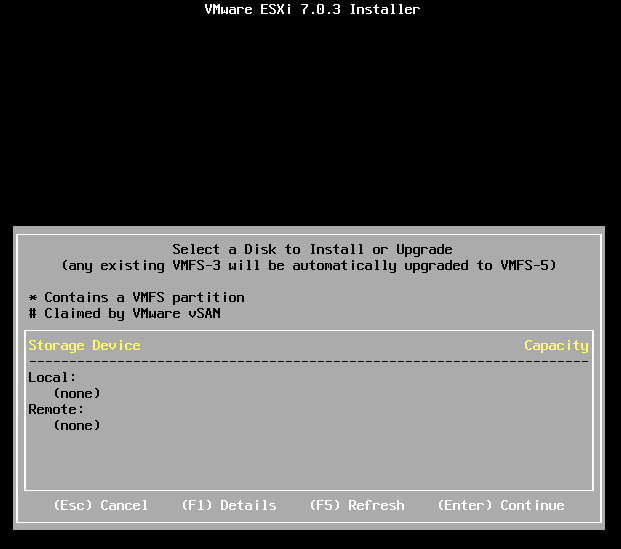

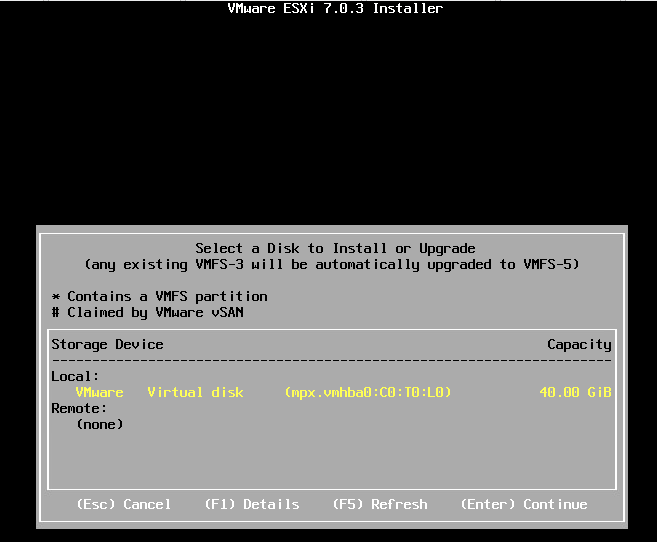

[インフラストラクチャ]-[クラスター]で該当クラスタを選択し、[ストレージ]-[データストア]にて「追加」をクリックして表示される「データストアの追加」にて

「Type:GFS2 Pool (Global File System 2)」を選択し、「BLOCK DEVICE」の選択を確認する

上記の様に「/dev/mapper/~」というディスクが認識されていれば、マルチパスで認識されているiSCSIディスクとなる。

mapperというデバイスが認識されていない場合、iSCSIマルチパスが動作しているかを確認する

まずはディスクデバイスが認識されているかを「sudo iscsiadm -m session -P 3」を実行して、「Attached SCSI devices:」の後に「scsi ??? Channel 00 ID 0 Lun :1」といった形でディスクが認識されていることを確認

pcuser@hpevme1:~$ sudo iscsiadm -m session -P 3

iSCSI Transport Class version 2.0-870

version 2.1.9

Target: iqn.1992-08.com.netapp:sn.588844e7ec3411f0a4bd000c292a75e7:vs.8 (non-flash)

Current Portal: 192.168.3.35:3260,1028

Persistent Portal: 192.168.3.35:3260,1028

**********

Interface:

**********

Iface Name: default

Iface Transport: tcp

Iface Initiatorname: iqn.2024-12.com.hpe:hpevme1:42939

Iface IPaddress: 192.168.3.51

Iface HWaddress: default

Iface Netdev: default

SID: 1

iSCSI Connection State: LOGGED IN

iSCSI Session State: LOGGED_IN

Internal iscsid Session State: NO CHANGE

*********

Timeouts:

*********

Recovery Timeout: 5

Target Reset Timeout: 30

LUN Reset Timeout: 30

Abort Timeout: 15

*****

CHAP:

*****

username: <empty>

password: ********

username_in: <empty>

password_in: ********

************************

Negotiated iSCSI params:

************************

HeaderDigest: None

DataDigest: None

MaxRecvDataSegmentLength: 262144

MaxXmitDataSegmentLength: 65536

FirstBurstLength: 65536

MaxBurstLength: 1048576

ImmediateData: Yes

InitialR2T: Yes

MaxOutstandingR2T: 1

************************

Attached SCSI devices:

************************

Host Number: 33 State: running

scsi33 Channel 00 Id 0 Lun: 1

Attached scsi disk sdc State: running

Current Portal: 192.168.2.35:3260,1027

Persistent Portal: 192.168.2.35:3260,1027

**********

Interface:

**********

Iface Name: default

Iface Transport: tcp

Iface Initiatorname: iqn.2024-12.com.hpe:hpevme1:42939

Iface IPaddress: 192.168.2.51

Iface HWaddress: default

Iface Netdev: default

SID: 2

iSCSI Connection State: LOGGED IN

iSCSI Session State: LOGGED_IN

Internal iscsid Session State: NO CHANGE

*********

Timeouts:

*********

Recovery Timeout: 5

Target Reset Timeout: 30

LUN Reset Timeout: 30

Abort Timeout: 15

*****

CHAP:

*****

username: <empty>

password: ********

username_in: <empty>

password_in: ********

************************

Negotiated iSCSI params:

************************

HeaderDigest: None

DataDigest: None

MaxRecvDataSegmentLength: 262144

MaxXmitDataSegmentLength: 65536

FirstBurstLength: 65536

MaxBurstLength: 1048576

ImmediateData: Yes

InitialR2T: Yes

MaxOutstandingR2T: 1

************************

Attached SCSI devices:

************************

Host Number: 34 State: running

scsi34 Channel 00 Id 0 Lun: 1

Attached scsi disk sdb State: running

pcuser@hpevme1:~$

これが認識されていないようであればiSCSIストレージ側のLUNマッピング設定やイニシエータのマッピング設定を見直す

マルチパスが動作しているかを確認する場合は「sudo multipath -ll」を実行して確認

pcuser@hpevme1:~$ sudo multipath -ll

3600a09807770457a795d5a554c634a58 dm-1 NETAPP,LUN C-Mode

size=100G features='3 queue_if_no_path pg_init_retries 50' hwhandler='1 alua' wp=rw

`-+- policy='service-time 0' prio=50 status=active

|- 34:0:0:1 sdb 8:16 active ready running

`- 33:0:0:1 sdc 8:32 active ready running

pcuser@hpevme1:~$

上記の様に、ツリーが表示されていればマルチパスが動作している

以前のバージョンではmultipathの設定を手動で行っていたが、ver 8.0.13では不要だった。

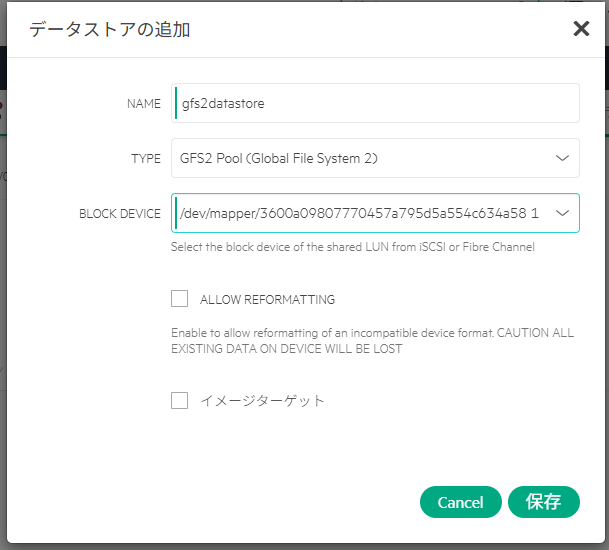

(4) HPE VME側でGFS2ファイルシステムの作成

[インフラストラクチャ]-[クラスター]で該当クラスタを選択し、[ストレージ]-[データストア]にて「追加」をクリックして表示される「データストアの追加」にて

「Type:GFS2 Pool (Global File System 2)」を選択し、「BLOCK DEVICE」の選択/dev/mapper/で始まるマルチパスデバイスを指定

しばらくファイルシステム作成が実施される

ver8.0.13時点ではファイルシステムが完成しても通知はなかったので、リロードなどして表示を更新する

これでGFS2データストアは作成できた。