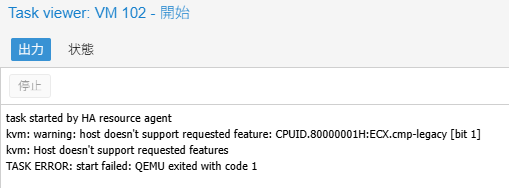

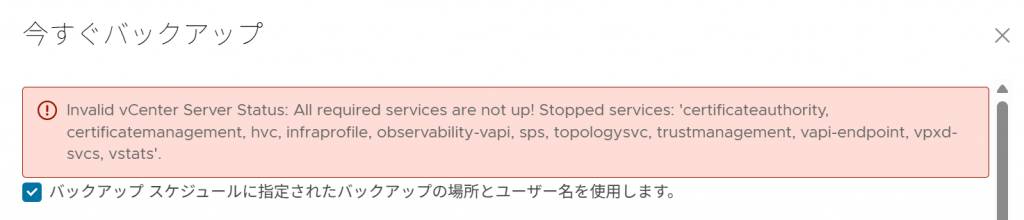

vSphere 8環境のVCSA仮想マシンバックアップを取ろうとしたらエラーになった

Invalid vCenter Server Status: All required services are not up! Stopped services: 'certificateauthority, certificatemanagement, hvc, infraprofile, observability-vapi, sps, topologysvc, trustmanagement, vapi-endpoint, vpxd-svcs, vstats'.

Invalid vCenter Server Status: All required services are not up! Stopped services: ‘statsmonitor’ (409983)

VCSA仮想マシンにsshでログインして、まずは「service-control –status」を実行してサービスの状態を確認してみる

root@vcsa [ ~ ]# service-control --status

Running:

applmgmt lookupsvc lwsmd observability pschealth vc-ws1a-broker vlcm vmafdd vmcad vmdird vmware-analytics vmware-cis-license vmware-content-library vmware-eam vmware-envoy vmware-envoy-hgw vmware-envoy-sidecar vmware-perfcharts vmware-pod vmware-postgres-archiver vmware-rhttpproxy vmware-sca vmware-stsd vmware-updatemgr vmware-vdtc vmware-vmon vmware-vpostgres vmware-vpxd vmware-vsan-health vmware-vsm vsphere-ui vtsdb wcp

Stopped:

observability-vapi vmcam vmonapi vmware-certificateauthority vmware-certificatemanagement vmware-hvc vmware-imagebuilder vmware-infraprofile vmware-netdumper vmware-rbd-watchdog vmware-sps vmware-topologysvc vmware-trustmanagement vmware-vapi-endpoint vmware-vcha vmware-vpxd-svcs vstats

root@vcsa [ ~ ]#

たしかにStoppedの方にエラーメッセージが出てるサービス群がいるような感じなんだけど、微妙に文字列が違う

サービス一覧を「service-control –list」で確認

root@vcsa [ ~ ]# service-control --list

vmware-analytics (VMware Analytics Service)

applmgmt (VMware Appliance Management Service)

vmware-certificateauthority (VMware Certificate Authority Service)

vmware-certificatemanagement (VMware Certificate Management Service)

vmware-cis-license (VMware License Service)

vmware-content-library (VMware Content Library Service)

vmware-eam (VMware ESX Agent Manager)

vmware-envoy (VMware Envoy Proxy)

vmware-envoy-hgw (VMware Envoy Host Gateway)

vmware-envoy-sidecar (VMware Envoy Sidecar)

vmware-hvc (VMware Hybrid VC Service)

vmware-imagebuilder (VMware Image Builder Manager)

vmware-infraprofile (VMware Infraprofile Service)

lookupsvc (VMware Lookup Service)

vmware-netdumper (VMware vSphere ESXi Dump Collector)

observability (VMware VCSA Observability Service)

observability-vapi (VMware VCSA Observability VAPI Service)

vmware-perfcharts (VMware Performance Charts)

vmware-pod (VMware Patching and Host Management Service)

pschealth (VMware Platform Services Controller Health Monitor)

vmware-rbd-watchdog (VMware vSphere Auto Deploy Waiter)

vmware-rhttpproxy (VMware HTTP Reverse Proxy)

vmware-sca (VMware Service Control Agent)

vmware-sps (VMware vSphere Profile-Driven Storage Service)

vmware-stsd (VMware Security Token Service)

vmware-topologysvc (VMware Topology Service)

vmware-trustmanagement (VMware Trust Management Service)

vmware-updatemgr (VMware Update Manager)

vmware-vapi-endpoint (VMware vAPI Endpoint)

vc-ws1a-broker (VMware Identity Single Container Service)

vmware-vcha (VMware vCenter High Availability)

vmware-vdtc (VMware vSphere Distrubuted Tracing Collector)

vlcm (VMware vCenter Server Lifecycle Manager)

vmafdd (VMware Authentication Framework)

vmcad (VMware Certificate Service)

vmcam (VMware vSphere Authentication Proxy)

vmdird (VMware Directory Service)

vmonapi (VMware Service Lifecycle Manager API)

vmware-postgres-archiver (VMware Postgres Archiver)

vmware-vmon (VMware Service Lifecycle Manager)

vmware-vpostgres (VMware Postgres)

vmware-vpxd (VMware vCenter Server)

vmware-vpxd-svcs (VMware vCenter-Services)

vmware-vsan-health (VMware VSAN Health Service)

vmware-vsm (VMware vService Manager)

vsphere-ui (VMware vSphere Client)

vstats (VMware vStats Service)

vtsdb (VMware vTsdb Service)

wcp (Workload Control Plane)

lwsmd (Likewise Service Manager)

root@vcsa [ ~ ]#

ヘルプ見ると「–all」ってのがあるので、とりあえず「service-control –start –all」を実行してみる

root@vcsa [ ~ ]# service-control --start --all

Operation not cancellable. Please wait for it to finish...

Performing start operation on service lwsmd...

Successfully started service lwsmd

Performing start operation on service vmafdd...

Successfully started service vmafdd

Performing start operation on service vmdird...

Successfully started service vmdird

Performing start operation on service vmcad...

Successfully started service vmcad

Performing start operation on profile: ALL...

Successfully started profile: ALL.

Performing start operation on service observability...

Successfully started service observability

Performing start operation on service vmware-vdtc...

Successfully started service vmware-vdtc

Performing start operation on service vmware-pod...

Successfully started service vmware-pod

root@vcsa [ ~ ]#

サービスの状態を確認すると、いろいろ止まっていたサービスが起動してる

root@vcsa [ ~ ]# service-control --status

Running:

applmgmt lookupsvc lwsmd observability observability-vapi pschealth vc-ws1a-broker vlcm vmafdd vmcad vmdird vmware-analytics vmware-certificateauthority vmware-certificatemanagement vmware-cis-license vmware-content-library vmware-eam vmware-envoy vmware-envoy-hgw vmware-envoy-sidecar vmware-hvc vmware-infraprofile vmware-perfcharts vmware-pod vmware-postgres-archiver vmware-rhttpproxy vmware-sca vmware-sps vmware-stsd vmware-topologysvc vmware-trustmanagement vmware-updatemgr vmware-vapi-endpoint vmware-vdtc vmware-vmon vmware-vpostgres vmware-vpxd vmware-vpxd-svcs vmware-vsan-health vmware-vsm vsphere-ui vstats vtsdb wcp

Stopped:

vmcam vmonapi vmware-imagebuilder vmware-netdumper vmware-rbd-watchdog vmware-vcha

root@vcsa [ ~ ]#

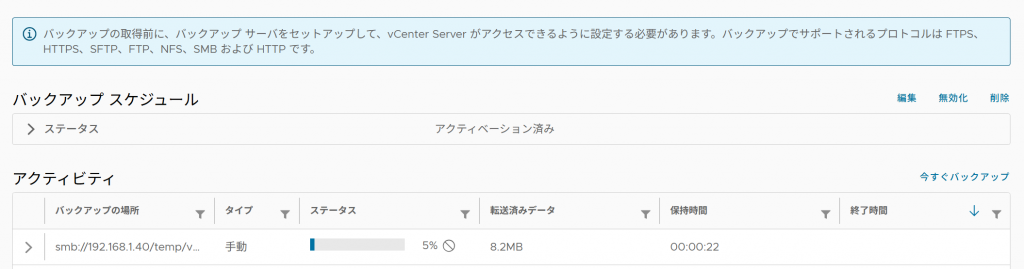

これで行けるのかな?とバックアップを実行してみれば、問題無く開始された

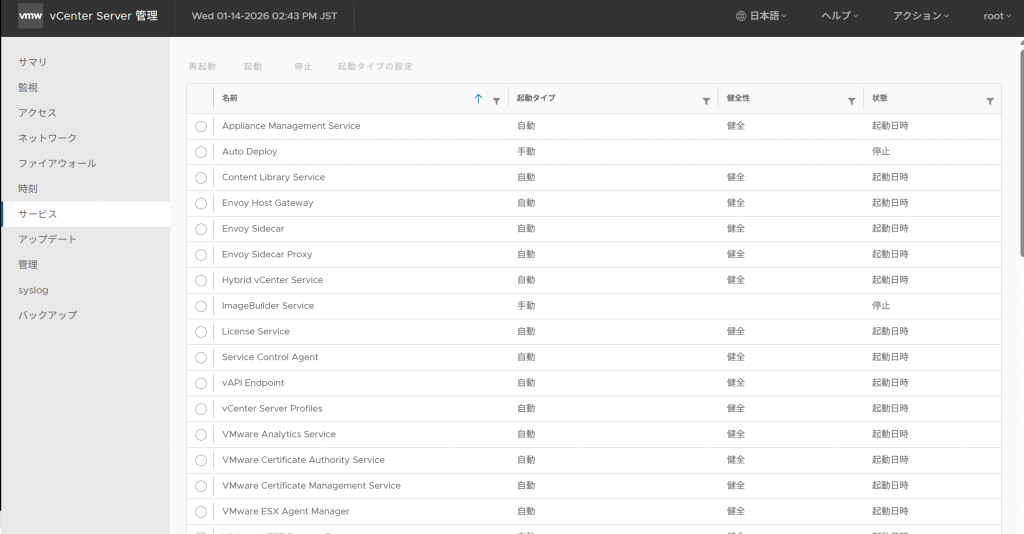

なお、 https://VCSA:5480/ の管理画面にある「サービス」から関連するサービスを手動で「起動」してもおそらくは大丈夫なはず